Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Stopping Spark through Ambari

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Stopping Spark through Ambari

- Labels:

-

Apache Ambari

-

Apache Spark

Created 02-04-2016 04:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Just wondering what happens when Spark service is chosen on Ambari and is stopped? What service is stopped behind the scenes.To my understanding, when Spark is installed through Ambari, it installs Spark-client, thrift-server and history-server. When I stop Spark through Ambari, what action is invoked. Is it the spark-client that is stopped or all the three?

Alternatively, if I have to stop spark-client through CLI, how it need to be done?

Please correct me in case my understanding is wrong in any of the above.

Thanks

Created on 02-04-2016 04:22 PM - edited 08-19-2019 03:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

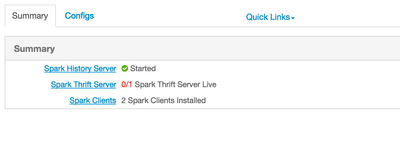

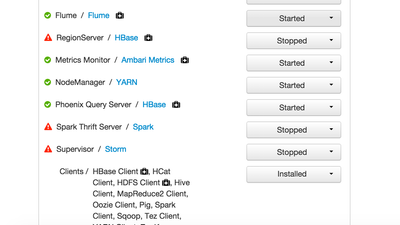

This is what it looks like

When you stop through ambari then it will stop the following/all components.

If just want to start or stop particular component then you can click that particular service and take the action.

Click Spark Thrift Server and you can just start or stop that .

I clicked thrift server and I can start from there...

Created 02-04-2016 04:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Greenhorn Techie it stops all spark related services via Ambari. I wouldn't recommend stopping Ambari managed service via shell.

Created 02-04-2016 04:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Artem Ervits Thanks for the quick response. I have two clients of Spark at the moment i.e. one is Ambari managed and the other is outside, manually setup. So, if I have to stop the manually managed one, how to do it?

Thanks

Created on 02-04-2016 04:22 PM - edited 08-19-2019 03:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is what it looks like

When you stop through ambari then it will stop the following/all components.

If just want to start or stop particular component then you can click that particular service and take the action.

Click Spark Thrift Server and you can just start or stop that .

I clicked thrift server and I can start from there...

Created 02-04-2016 04:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Neeraj Sabharwal, Thanks. Please see my below follow-up query.

Created 02-04-2016 04:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please see this and you can use that landing for all your future questions on manual install/use

Now, going back to your requirement...Please take few minutes to read this http://spark.apache.org/docs/latest/spark-standalone.html

sbin/start-all.sh- Starts both a master and a number of slaves as described above.sbin/stop-master.sh- Stops the master that was started via thebin/start-master.shscript.sbin/stop-slaves.sh- Stops all slave instances on the machines specified in theconf/slavesfile.sbin/stop-all.sh- Stops both the master and the slaves as described above.

Created 02-04-2016 05:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Neeraj Sabharwal I believe these are applicable for spark standalone mode. Correct me if I'm wrong. However, I wanted to understand how it works in yarn mode. Specifically my question is the below:

@Artem mentioned that 'it' would stop immediately after app execution from a shell. My question is what is 'it' here? Is it spark client or spark driver or the app itself. Alternatively, on Ambari what is it shown that is running which can be stopped through UI. I presume its the spark-client. If so, what need to be done to stop the spark-client, similar to the one done through Ambari.

Created 02-04-2016 05:38 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Greenhorn Techie spark client is client so there is no start and stop.

As you can see from my screeen shot , when you stop spark , it will stop all those components.

Now , for example when you run the following, it will launch a spark job and shutdown the job once it finishes.

Run on a YARN cluster

export HADOOP_CONF_DIR=XXX

./bin/spark-submit \

--class org.apache.spark.examples.SparkPi \

--master yarn-cluster \ # can also be `yarn-client` for client mode

--executor-memory 20G \

--num-executors 50 \

/path/to/examples.jar \

1000

Created 02-04-2016 05:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Neeraj Sabharwal Thanks. I agree with all the response given so far. Here is my understanding to summarise.

Only spark-thrift-server or spark-history-server can be stopped - either through Ambari or through CLI.

spark-client can only be installed (put the required libraries on the specified machine's directory) or uninstalled. There is nothing like stop or start. Same is the case through Ambari.

When using spark-shell or spark-submit, it would run interactively / submit the job to the cluster and once the application completes running, spark-driver program is ended.

Created 02-04-2016 05:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Greenhorn Techie Thanks for being so specific ....Perfect!! 🙂