Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Suggestions to handle high volume streaming da...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Suggestions to handle high volume streaming data in NiFi

- Labels:

-

Apache NiFi

Created 01-12-2017 03:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi guys,

I have a use case where we need to load near real-time streaming data into HDFS; incoming data is of high volume, about 1500 messages per second; I've a NiFi dataflow where the ListenTCP processor is ingesting the streaming data, but the requirement is to check the incoming messages for the required structure; so, messages from ListenTCP go to a custom processor that does the structure checking; only messages that have the right structure move forward to MergeContent processor and onto PutHDFS; right now, the validation/check processor became a bottleneck and the backpressure from that processor is causing ListenTCP to queue messages at the source system (the one sending the messages);

Since the message validation processor is not able to handle the incoming data fast enough, I'm thinking that I write the messages from ListenTCP first to the file system and then let the validation processor get the messages from the file system and continue forward. Is this the right approach to resolve this; are there any suggestions for alternatives.

Thanks in advance.

Created 01-12-2017 05:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

See above comments.

The main issue it to up your JVM memory. If you add 12-16 GB you should be awesome.

If it's a VM environment, give the node 16-32 or more cores. If that's not enough, go to multiple nodes in the cluster.

One node should scale to 10k/sec easy. How big are these files? Anything failing? Errors in the logs?

https://nifi.apache.org/docs/nifi-docs/html/administration-guide.html

Created 01-12-2017 05:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Add more nodes to your NiFi cluster or you can add RAM. Move to a bigger box (more RAM, CPU, Cores).

1500 messages is not a lot for NiFi.

You should be able to process 10k easy.

What are you JVM settings?

This one is important: https://community.hortonworks.com/articles/30424/optimizing-performance-of-apache-nifis-network-lis....

See:

https://community.hortonworks.com/articles/9782/nifihdf-dataflow-optimization-part-1-of-2.html

https://dzone.com/articles/apache-nifi-10-cheatsheet

https://community.hortonworks.com/articles/68375/nifi-cluster-and-load-balancer.html

Check to see if something is failing or where it is slow

Created 01-12-2017 05:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

See above comments.

The main issue it to up your JVM memory. If you add 12-16 GB you should be awesome.

If it's a VM environment, give the node 16-32 or more cores. If that's not enough, go to multiple nodes in the cluster.

One node should scale to 10k/sec easy. How big are these files? Anything failing? Errors in the logs?

https://nifi.apache.org/docs/nifi-docs/html/administration-guide.html

Created on 01-12-2017 02:39 PM - edited 08-19-2019 03:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks a lot @Timothy Spann I'm going to work with our Admin about the JVM settings and about the # of cores we have.

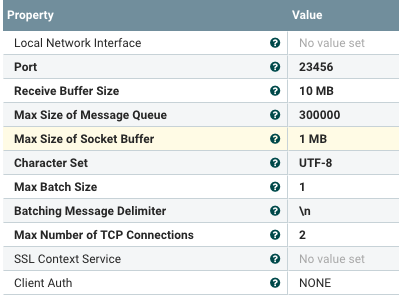

The flowfiles are small, about 5 KB each or less. ListenTCP processor is throwing these errors - "Internal queue at maximum capacity, could not queue event"; and messages are queuing on the source system side. Below are the memory settings for the ListenTCP that I set.

Created 01-12-2017 02:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Also, I'm in the process of having the Socket buffer (for ListenTCP) increased to 4 MB (the max the Unix admins can change it to).

Created 01-15-2017 02:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 01-12-2017 03:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The single biggest performance improvement for ListenTCP will be increasing the "Max Batch Size" from 1 to something like 1000, or maybe even more. The reason is because it will drastically reduce the number of flow files produced by ListenTCP, which will drastically reduce the amount of I/O to the internal NiFi repositories.

The downside is you won't have a single message per flow file anymore so your validation needs to work differently. If you can change your custom processor to stream in a flow file, read each line, and only write out the validated lines to the output stream then it should work well. If the validation processor is still the bottleneck, you could increase the concurrent tasks of this processor slightly so that it keeps up with the batches coming out ListenTCP.

Created 01-12-2017 04:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the suggestion @Bryan Bende; I'll try that approach, in addition to the JVM and Cores that Timothy mentioned.

I was thinking, if we batch files at ListenTCP, can we not Split them back to individual files with SplitText (the messages are text files) before passing them to our custom processor, minimizing the rework in the custom processor. Do you see any performance hit with this idea?

Thanks.

Created 01-12-2017 06:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can, but you are just shifting the problem downstream from ListenTCP to SplitText. SplitText now has to produce thousands/millions of flow files that would have been coming out of ListenTCP. It is slightly better though because it gives ListenTCP a chance to keep up with the source.

It would be most efficient to avoid splitting to the individual flow files if possible. Since you are merging things together before HDFS, it shouldn't matter if you are merging many flow files with one message each, or a few flow files containing thousands of messages each.

It just comes down to whether you want to rewrite some of the logic in your custom processor.

Created 01-12-2017 08:30 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

thanks for clarifying @Bryan Bende, it makes sense.