Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: The Pig Script Hangs While Running

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

The Pig Script Hangs While Running

Created 05-02-2016 07:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

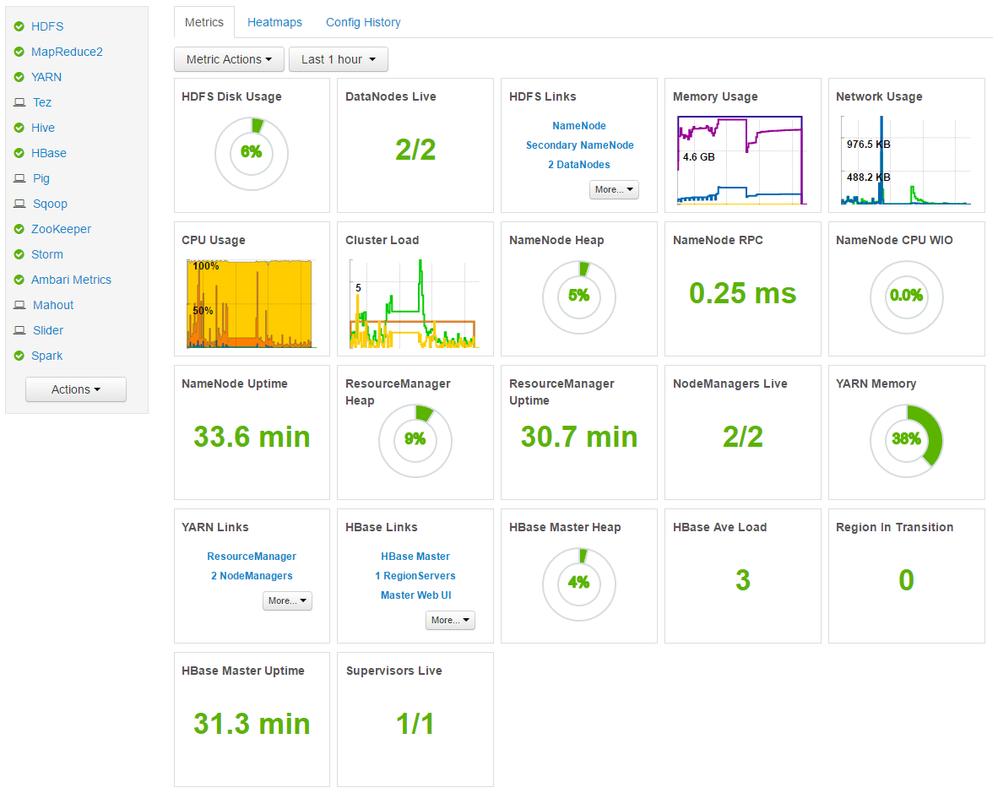

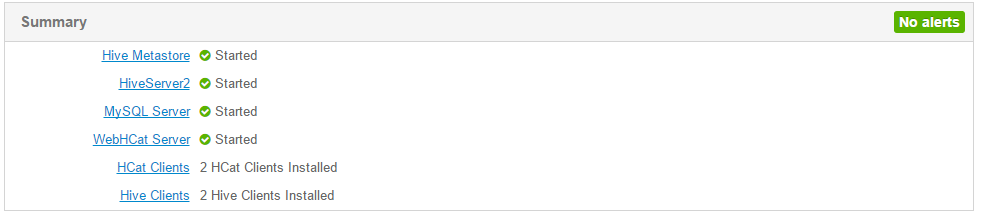

I have created a 2 node cluster (2 Core, 8 GB Ram on each) and started to follow the tutorial on this page. Whenever I try to execute the example pig script (Step 3.4: Execute Pig Script on Tez), script hangs although the status stays in "Running". As can be seen from the dashboard screenshot, all my services are seem to be up & running.

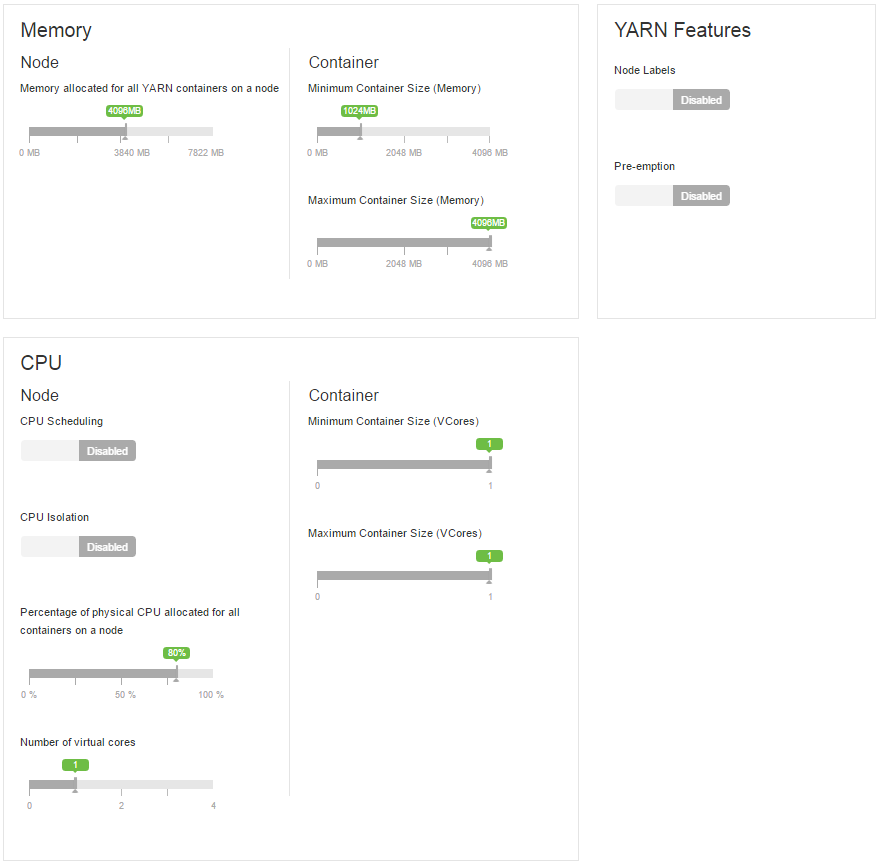

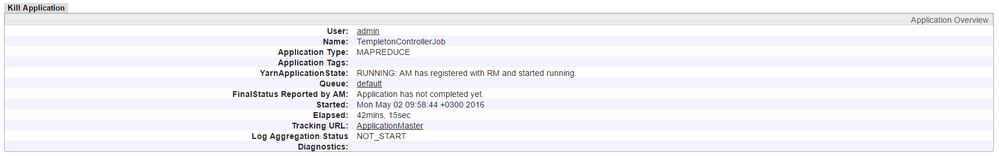

I have also attached the screenshots of simple yarn config screen and info regarding to specific MapReduce job.

Why might be the problem in my case?

Thank you.

Created 05-04-2016 07:27 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Considering your last comment and information provided, I had a look at:

https://cwiki.apache.org/confluence/display/Hive/HCatalog+LoadStore#HCatalogLoadStore-HCatStorer

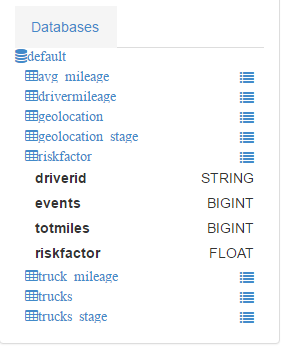

Can you confirm that the pre-requisites are met in your case? The table 'riskFactor' exists (with the correct schema)?

Besides is Hive up and running?

Are you running your script where Hive is installed? are you in a clustered env?

Created 05-04-2016 07:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your quick feedback. I can confirm that the table 'riskfactor' exists with the correct scheme (I used the HiveQL statement in the tutorial). Hive is up & running. I am in a 2 node cluster environment.

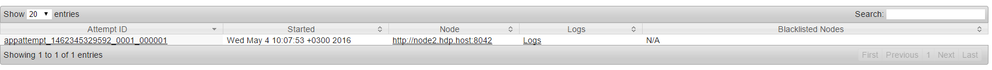

Only not sure about the question whether I am running my script where Hive installed or not. I can confirm that services like Hive Metastore, HiveServer2 are installed in the second node of my cluster and the map reduce job that I see in the ResourceManager UI says that Node is the second one (also attached the screenshot).

Created 05-04-2016 08:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You are running the pig script from the pig view in ambari as described in the tutorial? If yes, do you have a chance to get the logs from the pig view?

Created 05-04-2016 11:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

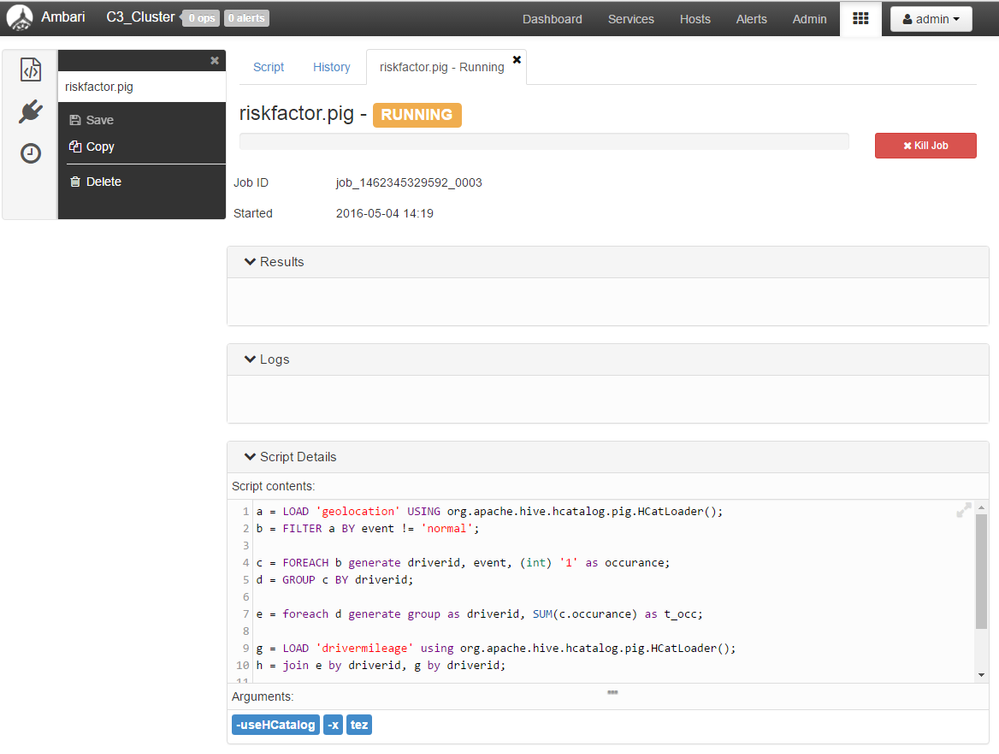

Yes, I do run the pig script from the pig view in Ambari, however since it constantly stays on the "Running" status no further log is displayed on the "Logs" panel in the view.

Btw, in order to observe the scenario you have mentioned, I changed the table name in the store operation to a non-existing table and it directly threw an error saying that the related table is not found. So we can assume that the script sees the "riskfactor" table but somehow stuck in performing the store operation.

Created 05-04-2016 11:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

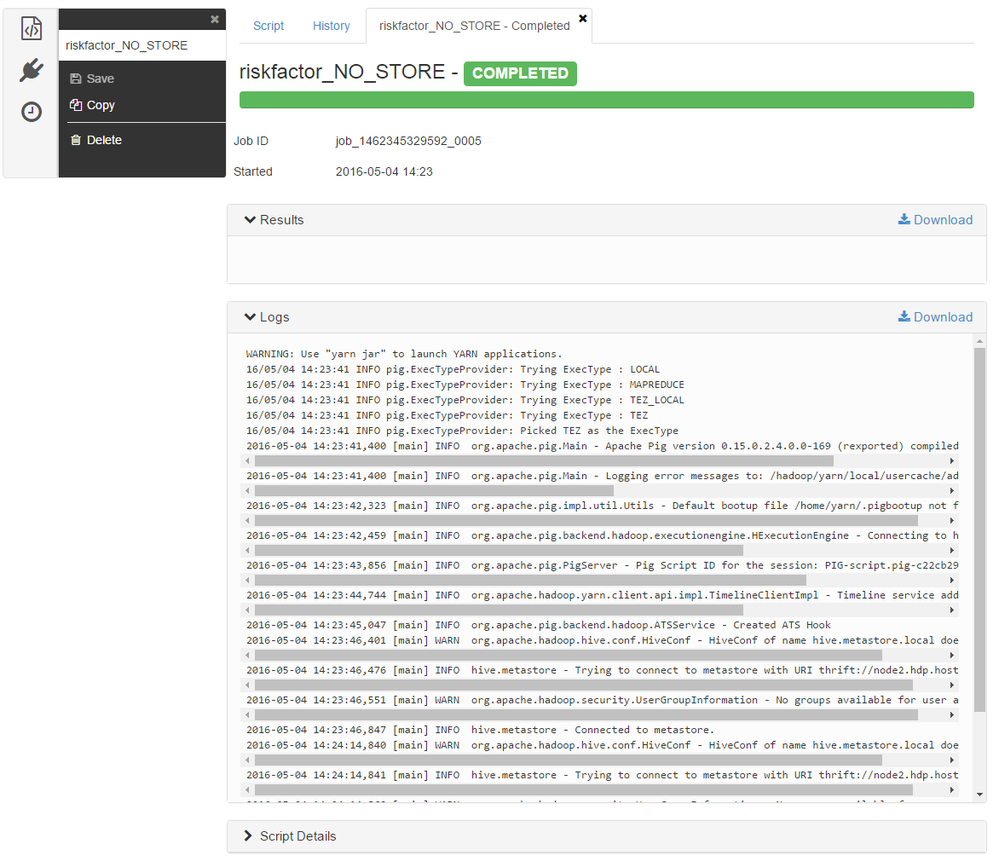

I am also adding the screenshot regarding to the scenario where I do not use the "Store" operation.

Created 05-04-2016 11:38 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Muhammed Yetginbal One option would be to test launching the Pig script from the console (https://wiki.apache.org/pig/RunPig). Using the client (grunt) you could try to execute the same commands. Maybe it will give you more details about what is happening when "running".

Created 05-04-2016 12:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 05-04-2016 05:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Honestly... I don't know 🙂 I'd suggest digging into the logs (ambari, mapreduce, yarn, hive, etc) to find an explanation. But maybe someone else on HCC will have an idea 🙂

Created 05-04-2016 06:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you Pierre 🙂 You've helped me a lot, appreciated 🙂 I'll accept your answer since it was the only option for me to be able to execute the script.

Created 12-06-2017 01:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, I'm facing the same issue by following the same tutorial mentioned in:

https://hortonworks.com/tutorial/hadoop-tutorial-getting-started-with-hdp/section/4/.

Did you find a solution for this issue? I'll be grateful if you could help me.

- « Previous

-

- 1

- 2

- Next »