Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Thoughts on NiFi error handling

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Thoughts on NiFi error handling

- Labels:

-

Apache NiFi

Created on 11-22-2018 02:39 PM - edited 08-17-2019 04:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey guys!

Hope you are having a good day!

I have a couple of questions regarding error handling in nifi. I found following links to be very useful to learn more about nifi error handling :

- https://community.hortonworks.com/questions/77336/nifi-best-practices-for-error-handling.html

- https://community.hortonworks.com/articles/76598/nifi-error-handling-design-pattern-1.html

- https://pierrevillard.com/2017/05/11/monitoring-nifi-introduction/

So regarding error handling there seem to be two general ways to go:

- flows that you want to retry

- flows that you don't want to retry

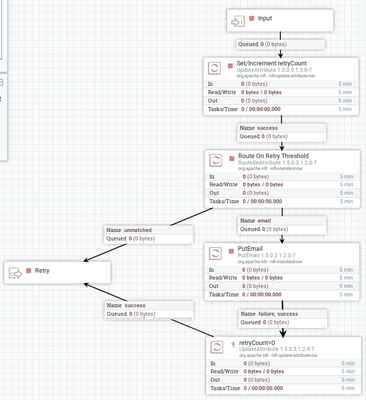

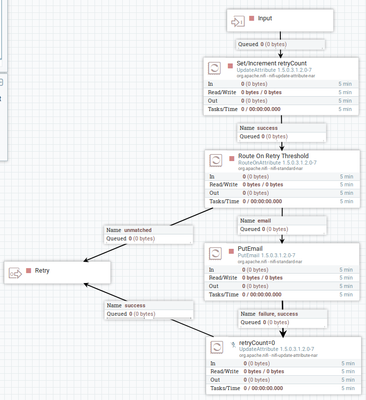

Flows that you want to retry

Count the number of retries and if a certain number of retries is reached send an email to the admin. After that wait by using a disabled processor:

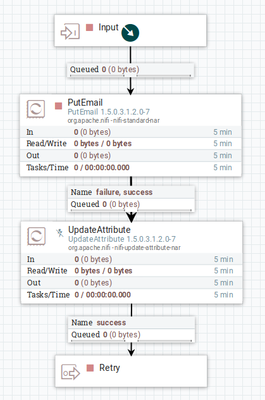

Flows that you don't want to retry

No need to count here, simply send an email and wait for the admin to fix the problem.

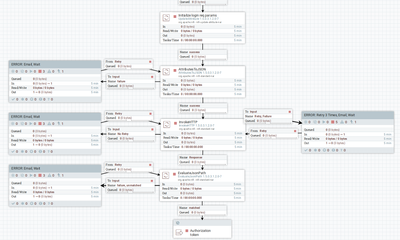

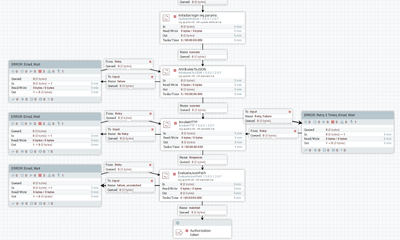

In both cases, we do not want to drop data that is routed to e.g. failure. Instead we route the data to a disabled processor, to queue it. After fixing the problem, the admin can enable the processor and the data can be processed again. Here is full example (a flow to retrieve an authentication token)

What are your thoughts on that? Do you think this kind of error handling is correct/ or can be improved? What bothers me is, that the ERROR process group will be all over the place. However I do not see any way to centralize it (since we have to deal with each failure flow individually, in order not to loose data).

Created 11-27-2018 07:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Artjom Zab

I'm facing the same issue and followed the same links as you. Because I'm quite new to NiFi my opinion might not be relevant but here are my thoughts on errorhandling.

Similar to your description I have built a processgroup where I handle the logic of retry for 3 times. Because the output port has to be tied to the original processor I don't see any other solution then connecting this processgroup to every retrieable action/processor.

Because on my installation is the NiFi-Registry available I built the processgroup one time and import it on every place from the registry. But if there will be changes one day on this processgroup there is a lot of work to do exchanging the version of this very frequently used processgroup.

BTW: In my first concrete case there is no worry about data loss relating to flowfiles which failed (with or without retry) because after informing the administrator about the details of the problematic data and his solution of the problem there always have to be requested actual data from the source systems for rerun.

Would be glad getting some tips for a better solution. Bye!

Created 11-28-2018 05:55 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey Justen! You're correct, if you not care about losing the data, then you can just drop the flow files. Another way to structure your error handling would be to create an error output port and to do the error handling outside of the process group. However this only makes sense, if you don't want to route the data back to the failing processor.

Another approach described as "log tailing" (here https://pierrevillard.com/2017/05/11/monitoring-nifi-introduction/) can be used, too. There could be another nifi flow that analyses the log files and sends out emails when it detects specific log entries. This approach could kind of centralize at least error reporting, right? However to do error handling without losing data, I currently don't see any way to centralize it. Cheers!

Created on 11-29-2018 02:20 PM - edited 08-17-2019 04:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Artjom Zab !

So a fundamental difference in error handling is whether the flowfiles have to processed again after error correction or not.

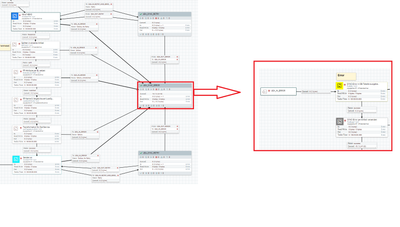

OK but beside of this aspect one has always to find the appropriate error information depending on where the error occurs. My aim is still to have a centralized processgroup for uniform error-handling which should log the information to a DB-table to point the support into right direction like the cutout of my flow shows.

So I have to verify where a flowfile comes from. IMHO there are three different situations:

1. Processors like InvokeHTTP which deliver suitable information about an error as flowfile attribute

OK possible to handle.

2. Processors which send the flowfile to failure-queue WITHOUT setting flowfile attributes or creating entries in bulletin-board, e.g. SplitJson, JoltTransformJSON

I don't believe that it is wise to set an UpdateAttribute-processor after every failure-queue and hardcode the component information to attributes. If I look at the data provenance I see componentID and componentName in the details but I don't see them at flowfile in failure-queue. Why...?

3. Processors which generate an entry in bulletin-board, e.g. InvokeHTTP on time-out, ExecuteSQL on time-out, and sending the flowfile to failure-queue

Thanks for the link for configurate "REPORTING TASKS". Tested it on my local installation (no permission on the customer DEV-System). But at the moment I don't see how to bed it in my flows. I think this would be a parallel action to monitor this and not being part of my flows.

If I would always know the componentID I assume I could handle ALL these cases with the REST-API (e.g. http://127.0.0.1:8080/nifi-api/processors/{id} or http://127.0.0.1:8080/nifi-api/flow/bulletin-board ) checking the concrete component or check bulletin-board for messages on this component.

Maybe my idea is absurd - I am sure hundreds of you solved this problem already. Any kind of help is very welcome!

Using NiFi 1.6.0

Created 01-30-2019 02:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @ Justen! Check out https://pierrevillard.com/2018/08/29/monitoring-driven-development-with-nifi-1-7/ Seems like a great way to decouple the core flow from monitoring/reporting, don't you think?

Created 02-04-2019 09:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Artjom Zab

Hi Artjom, thanks for keeping this subject in mind and providing this link to me!

I had a quick look at it and noticed that I will have to catch up with some points mentioned there (e.g. Record Reader/Writer). But I will try it.

Created 01-31-2019 01:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

One thing I tell people is to always put a limitrate in front of putemail. If you don't you will eventually send yourself 100000 emails in one second and get your nifi boxes blacklisted from your SMTP server 🙂 . Actually, the best solution in this case might be to feed putemail with a monitoractivity.

This is a tough problem. I am skeptical that there is a practically generalizable solution. Right now I feel that monitoring and alerting need to be flow-specific, but I am interested to see what others are doing.

Created 02-04-2019 09:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi David, You are right with being carefull sending to many emails and that there will be situations where monitoring/errorhandling needs to be adjusted individual. But I want a standard process for the majority of always repeated occuring errors. You know, not reinventing the wheel every time again.

And if one day my processes are designed further there won't be emails sent just logging everything and give the support some kind of graphical interface (dashboard) - that's the plan.