Support Questions

- Cloudera Community

- Support

- Support Questions

- Unable to insert data in MySQL using ConvertJSONTo...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Unable to insert data in MySQL using ConvertJSONToSQL processor through NiFi

- Labels:

-

Apache NiFi

Created on 07-08-2016 12:24 PM - edited 08-19-2019 03:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

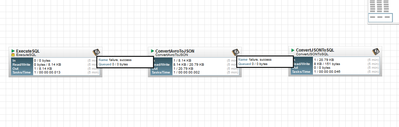

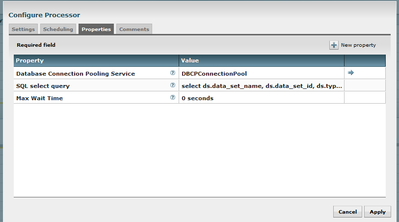

Hi, I want to load data from hive table to MySql table using HDF (nifi). I have created the following dataflow.

After execution of dataflow, it is showing 151 bytes data has written the destination table. However there is no data in the destination table.

Please help me if there is any configuration issue.

Created 07-08-2016 04:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Prasanta Sahoo,

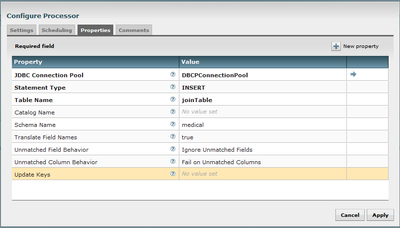

Please add PutSQL processor (with DBCPConnectionPool for mysql) after ConvertJSONToSQL processor and try.

Thanks,

Jobin

Created 07-08-2016 04:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Prasanta Sahoo,

Please add PutSQL processor (with DBCPConnectionPool for mysql) after ConvertJSONToSQL processor and try.

Thanks,

Jobin

Created 07-08-2016 05:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The ConvertJSONToSQL processor[1] merely converts a JSON object into a SQL query that can be executed. After it is converted, you haven't sent the command anywhere yet. You need another processor after that in order to actually send the command to be executed by a system. The PutSQL processor[2] (as suggested by @Jobin George) executes the SQL UPDATE or INSERT command that is in the contents of the incoming FlowFile.

Created 07-11-2016 06:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As suggested by @Jobin George , I have added PutSQL processor and it inserted only one record. However i have 100 records are coming from ExecuteSql processor( contains select query: select * from table_name) and want to insert 100 records. Please help me if any configuration required for this requirement.

Created on 07-11-2016 07:00 AM - edited 08-19-2019 03:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Prasanta Sahoo,

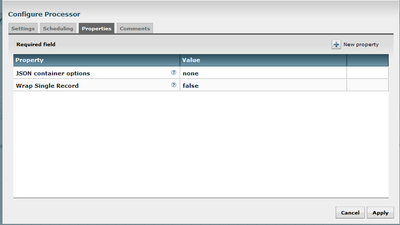

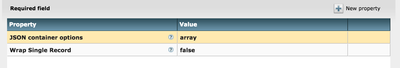

Can you try "ConvertAvroToJSON" processor with below configuration:

For "JSON container options" Instead of "none" try "array"

That determines how the stream flows as an array or single object.

Thanks,

Jobin