Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Unable to make connection from Ranger Admin to...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Unable to make connection from Ranger Admin to Yarn work with SSL enabled

- Labels:

-

Apache Ranger

-

Apache YARN

Created 12-09-2017 01:41 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello everyone.

I have configured a 6 nodes (2 master + 4 worker nodes) kerberized HDP 2.5.6 cluster with LDAP (Ambari, Ranger) + SSSD (Linux, Group mapping) authentication and https in Ambari and everything was working OK.

I enabled SSL on every Hadoop/HDP component following Chapter 4 (Data Protection: Wire Encryption) by using my own CA (managed wit XCA), generating certificates for every server node (CN=hostname) and a common certificate (CA=hadoopclient) for client connection. I had some issues with some Ambari Views mixing kerberos + ssl but I was able to fix it by using information in this forum and other internet sources.

The I proceeded to (re)configure Ranger Admin for SSL (working OK) and Ranger Plugins with SSL first in the Ambari service side in the corresponding ranger-xxxx-policymgr-ssl and ranger-xxxx-plugin properties by defining the client jks keystore/password (for the cn=hadoopclient certificate) and truststore/password (with the common trusted CA certificate) and the common.name.for.certificate (hadoopclient) following "Ranger Plugins for SSL" in Security manual.

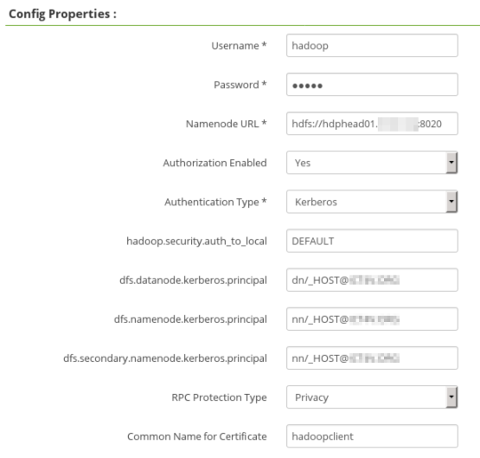

Also I have configured each Service Repository definition (mycluster_hadoop, mycluster_yarn, mycluster_hbase, etc) by setting the "Common Name for Certificate" to the same value and other values not mentioned in the (outdated, confuse and incomplete documentation on this topics) to match the services definitions (RPC Protection Type, kerberos principals, SSL URLs).

With all this in place the Ranger Plugin for the services I have tested (HDFS, HBase and YARN) are connecting to SSL Ranger Admin and updating their policies OK (as show in the Ranger Audit tab and ranger plugin logs). Also from the Ranger Admin side, in the repo definition for Hadoop/HDFS and HBase I'm able to check the connection (with ssl+kerberos) and It's working ok with no errors.

But in the YARN service policy repo definition the test keeps failing and when I try to test the connection I get the following message:

Connection Failed.

Unable to retrieve any files using given

parameters, You can still save the repository and start creating

policies, but you would not be able to use autocomplete for resource

names. Check ranger_admin.log for more info.

Every time I test the connection I get the following errors (edited) in the ranger/xa_portal.log logs:

2017-12-09 03:40:31,882 [timed-executor-pool-0] ERROR apache.ranger.services.yarn.client.YarnClient$1$1 (YarnClient.java:178) - Exception while getting Yarn Queue List. URL : https://hdphead01.xxxx.com:8090/ws/v1/cluster/scheduler com.sun.jersey.api.client.ClientHandlerException: javax.net.ssl.SSLException: java.lang.RuntimeException: Unexpected error: java.security.InvalidAlgorithmParameterException: the trustAnchors parameter must be non-empty at com.sun.jersey.client.urlconnection.URLConnectionClientHandler.handle(URLConnectionClientHandler.java:131)...Caused by: javax.net.ssl.SSLException: java.lang.RuntimeException: Unexpected error: java.security.InvalidAlgorithmParameterException: the trustAnchors parameter must be non-empty at sun.security.ssl.Alerts.getSSLException(Alerts.java:208)...

Searching the web and this site I have found the "trustAnchors parameter must be non-empty" error seems to be related to not having a properly defined truststore for the connection. But it doen't make to much sense to me because the other services are supposed to be using the same truststrore (as defined for Ranger Admin) and are working OK.

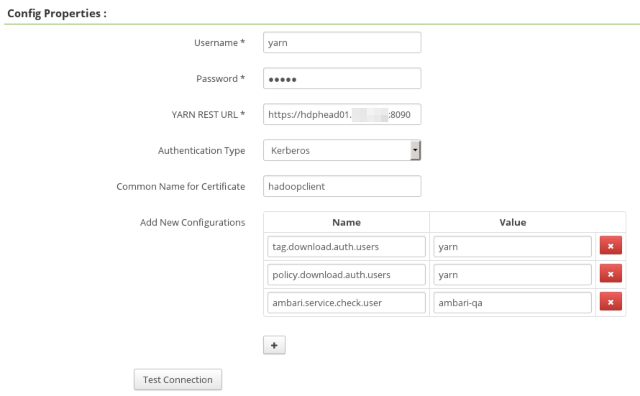

Also something I find strange is that the definition for the YARN Policy Repo in Ranger has many fields lacking with respect to the HDFS and HBase definitions (only YARN Rest URL: https://...:8090, Auth Type: kerberos, and Common Name: hadoopclient). I suppose it's because is inheriting the configuration from Hadoop repo, but not sure it this is the case.

I have searched and tried a lot of different possibilities without luck until now so I'm now sharing the problem with the HDP community. Has anyone experienced this problem or have any clue on how to fix it?

I'm also including the capture for configuration of the Ranger YARN repo (not working) and Ranger HDFS repo (working) as a reference.

Best Regards.

Created 12-09-2017 02:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm also including the capture for configuration of the Ranger YARN repo (not working) and Ranger HDFS repo (working) as a reference.

Created on 12-09-2017 08:59 PM - edited 08-17-2019 07:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Finally after writing out the problem to post the question I was able to find the problem for myself and I will describe it in case it may happen to someone else.

The problem was there are a couple of extra properties not documented here:

https://docs.hortonworks.com/HDPDocuments/HDP2/HDP-2.5.6/bk_security/content/configure_ambari_ranger...

used to define the truststore to be used for the Ranger Admin service when connecting to other services.

These are located at the of section "Advanced ranger-admin-site" as show here and should be changed to point to your system truststore (including the CA certificate used to sign the Hadoop Services certificates).

Ranger -> Advanced ranger-admin-site

So in order to make HTTPS and SSL work for Ranger Admin and Ranger Plugins in both directions you have to set correctly all the following fields pointing to the proper keystore (including private key) o truststore (including signing CA or certificate of the service you are going to connect):

- In Ranger -> Advanced ranger-admin-site

ranger.https.attrib.keystore.file = /etc/security/serverKeys/keystore.jks ranger.service.https.attrib.keystore.pass = ****** ... Other ranger.service.https.* releated properties // Not documented in Security manual ranger.truststore.file = /etc/security/serverKeys/truststore.jks ranger.truststore.password = *******

- In Ranger -> Advanced ranger-admin-site (this seems to be the same property above, so I suspect these are probably from different software version and only one is necessary but both are mentioned in the documentation so who knows?)

ranger.service.https.attrib.keystore.file = /etc/security/serverKeys/keystore.jks

- In Service (HDFS/YARn) -> Advanced ranger-hdfs-policymgr-ssl (also set properties in Advanced ranger-hdfs-plugin-properties to match the certificate common name)

// Keystore with the client certificate cn=hadoopclient,...

xasecure.policymgr.clientssl.keystore = /etc/security/clientKeys/hadoopclient.jks ... xasecure.policymgr.clientssl.truststore = /etc/security/clientKeys/truststore.jks ...