Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Unable to run multiple pyspark sessions

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Unable to run multiple pyspark sessions

- Labels:

-

Apache Spark

Created on 04-07-2018 01:43 PM - edited 09-16-2022 06:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am new to coudera. I have installed cloudera express on a Centos 7 VM, and created a cluster with 4 nodes(another 4 VMs). I ssh to the master node and run: pyspark

This works but only for one session. If I open another console and run pyspark I will get the following error:

WARN util.Utils: Service 'SparkUI' could not bind on port 4040. Attempting port 4041.

And it gets stuck there and does nothing until I close the other session running pyspark! Any idea why this is happening and how I can fix this so multiple sessions/user can run pyspark? Am I missing some configurations somewhere?

Thanks in advance for your help.

Created 04-15-2018 11:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@hedy thanks for sharing.

The workaround you received makes sense when you are not using any cluster manager(?)

Local mode ( --master local[i] ) is generally seen if you want to test or debug something quickly since there will be only one JVM launched on the node from where you are running pyspark and this JVM will act as driver, executor, and master -> all-in-one. But of course with local mode, you lose the scalability and resource management that a cluster manager provides. If you want to debug why simultaneous spark shells are not working when using Spark-On-Yarn, we need to diagnose this from YARN perspective (troubleshooting steps shared in the last post). Let us know.

Created 03-08-2019 05:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am facing the same issue and can anyone please suggest how to resolve this. On running two spark application , one remains at accepted state while other is running.

What is the configuration that needs to be done for this to be working?

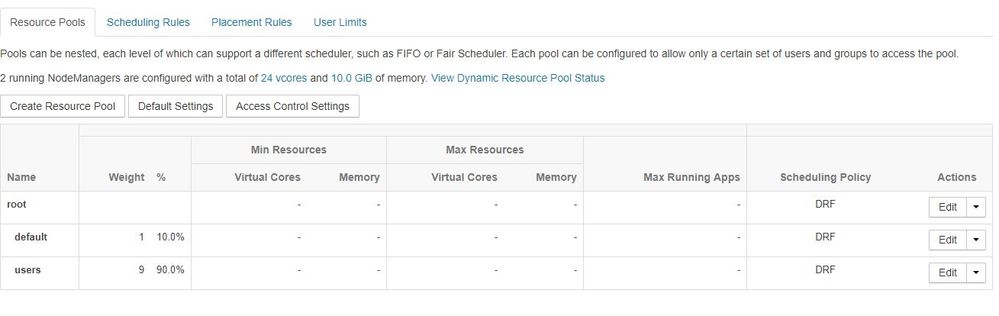

Following is the configuration for dynamic resource pool config:

Please help!

- « Previous

-

- 1

- 2

- Next »