Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Unable to start Metrics Monitor on Name Node :...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Unable to start Metrics Monitor on Name Node : Heart Beat Lost

- Labels:

-

Apache Ambari

Created 08-04-2017 10:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The issue started with an Alert on Hive Metastore Service:

Metastore on dh01.int.belong.com.au failed (Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/common-services/HIVE/0.12.0.2.0/package/alerts/alert_hive_metastore.py", line 183, in execute

timeout=int(check_command_timeout) )

File "/usr/lib/python2.6/site-packages/resource_management/core/base.py", line 154, in __init__

self.env.run()

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 158, in run

self.run_action(resource, action)

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 121, in run_action

provider_action()

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/system.py", line 238, in action_run

tries=self.resource.tries, try_sleep=self.resource.try_sleep)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 70, in inner

result = function(command, **kwargs)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 92, in checked_call

tries=tries, try_sleep=try_sleep)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 140, in _call_wrapper

result = _call(command, **kwargs_copy)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 291, in _call

raise Fail(err_msg)

Fail: Execution of 'export HIVE_CONF_DIR='/usr/hdp/current/hive-metastore/conf/conf.server' ; hive --hiveconf hive.metastore.uris=thrift://dh01.int.belong.com.au:9083 --hiveconf hive.metastore.client.connect.retry.delay=1 --hiveconf hive.metastore.failure.retries=1 --hiveconf hive.metastore.connect.retries=1 --hiveconf hive.metastore.client.socket.timeout=14 --hiveconf hive.execution.engine=mr -e 'show databases;'' returned 5. Java HotSpot(TM) 64-Bit Server VM warning: INFO: os::commit_memory(0x00000002c0000000, 977797120, 0) failed; error='Cannot allocate memory' (errno=12)

Unable to determine Hadoop version information.

'hadoop version' returned:

Java HotSpot(TM) 64-Bit Server VM warning: INFO: os::commit_memory(0x00000002c0000000, 977797120, 0) failed; error='Cannot allocate memory' (errno=12)

# # There is insufficient memory for the Java Runtime Environment to continue. # Native memory allocation (mmap) failed to map 977797120 bytes for committing reserved memory. # An error report file with more information is saved as: # /home/ambari-qa/hs_err_pid4858.log

)

I tried launching hive from command prompt : sudo hive , this error ed out with Java Run time Environment Exception.

Then, i looked at memory utilization which indicated that SWAP has run out.

]$ free -m total used free shared buffers cached Mem : 64560 63952 607 0 77 565 -/+ buffers/cache: 63309 1251 Swap : 1023 1023 0

I tried to restart Hive Metastore service from Ambari but that operation Hung for over 30 minutes without printing anything in the stdout and strerror logs. At this point I involved Server Administrator in the investigation and it was revealed that the following process had reserved upto 40 GB. It seemed strange (I am not sure what is the optimal utilization pattern for Ambari Agent/Monitor ?? !! )

root 3424 3404 14 2016 ? 52-22:05:00 /usr/bin/python2 /usr/lib/python2.6/site-packages/ambari_agent/main.py start

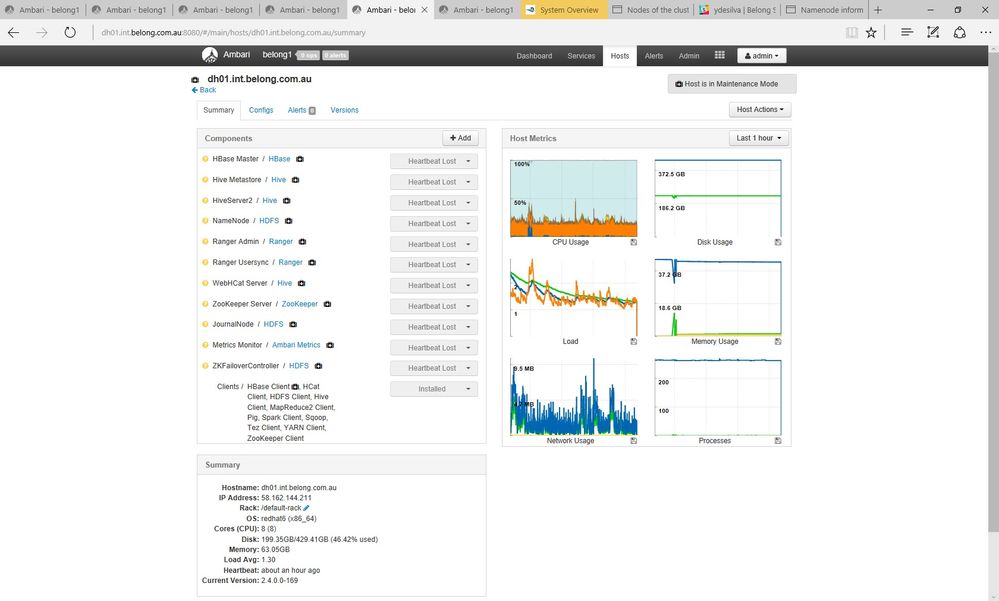

At this point i tried to restart Ambari Metric service on the name node from Ambari, the operation Timed out and then "Heart Beat" from the node stopped. As can be seen in the image.

I was not able to restart Ambari Metric service on the Name Node from Ambari Console, as the option was disabled. I tried to so a rolling restart of all Ambari Monitor Services, but the Monitor Service on Name Node did not start.

At this point we decided to 2 things, add more swap space (Admin added 1 more GB ) and then i stopped and started Ambari Services as follows:

#Stop operation did not succed at first go and i had to kill the Pid sudo su - ams -c '/usr/sbin/ambari-metrics-monitor --config /etc/ambari-metrics-monitor/conf stop' sudo su - ams -c '/usr/sbin/ambari-metrics-monitor --config /etc/ambari-metrics-monitor/conf start' #I looked at Agent Status sudo ambari-agent status#The agent was not running, Hence i started the agent sudo ambari-agent start

After the agent start the monitor from this node was up and reflected in Ambari. The only issue that i have now is that Namenode CPU WIO is N/A on the Ambari Dashboard ? , Will be helpfull to know how to get this back ?

Also, what i intend to do is to review HiveServer2 and Metastore heap sizes which current stand at, again would these settings cause this issue were swap runs out. This has not happened before !

HiveServer2 Heap Size = 20480 MB Metastore Heap Size = 12288 MB

Environment Information: Hadoop 2.7.1.2.4.0.0-169 hive-meta-store - 2.4.0.0-169 hive-server2 - 2.4.0.0-169 hive-webhcat - 2.4.0.0-169 Ambari 2.2.1.0RAM: 64 GB

Helpfull links:

https://community.hortonworks.com/questions/15862/how-can-i-start-my-ambari-heartbeat.html

https://cwiki.apache.org/confluence/display/AMBARI/Metrics

Created 08-04-2017 11:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Which process was consuming More memory on your Operating System when you noticed the new process died with the following error?

# Native memory allocation (mmap) failed to map 977797120 bytes for committing reserved memory.

.

What is the version of ambari that you are using?

Did you find ambari agent process was consuming more memory? In some older version of ambari agent there was a memory leak issue detected as mentioned in the https://issues.apache.org/jira/browse/AMBARI-17065 This causes ambari agent to consume many GB of memory over a period of 2-3 days and keep growing.

.

Created 08-04-2017 11:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Which process was consuming More memory on your Operating System when you noticed the new process died with the following error?

# Native memory allocation (mmap) failed to map 977797120 bytes for committing reserved memory.

.

What is the version of ambari that you are using?

Did you find ambari agent process was consuming more memory? In some older version of ambari agent there was a memory leak issue detected as mentioned in the https://issues.apache.org/jira/browse/AMBARI-17065 This causes ambari agent to consume many GB of memory over a period of 2-3 days and keep growing.

.

Created 08-04-2017 12:38 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Jay SenSharma

Thanks for getting back on this, the details of Ambari Agent as below

]$ ambari-agent --version 2.2.1.0 ]$ rpm -qa|grep ambari-agent ambari-agent-2.2.1.0-161.x86_64

Its does seem like , the issue indicated in the Jira is relevant to the issue that occurred. As of now this issue has occurred only once but it does seem like migrating would be a good option to avoid this issue in future.

Also, i had indicated that Namenode CPU WIO was N/A, after a few hours i am able to see the metric on the Dashboard.