Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Unable to upload file into HDFS

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Unable to upload file into HDFS

- Labels:

-

Apache Ambari

-

Apache Hadoop

-

HDFS

Created 07-20-2020 04:02 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I'm trying to upload a 73 GB tsv file into HDFS through Ambari's File View option. The progress bar seems to get stuck at 3%-4% and doesn't proceed further. I've had to cancel the upload process after waiting for an hour to see the progress bar move. What can I do?

Created 07-20-2020 05:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

An alternative approach to upload local file to HDFS is to use a command tool called distcp. The basic command would be as follows:

hadoop distcp file:///<path to local file> /<path on hdfs>This will generate and submit a MapReduce job to upload your data to hdfs piece by piece.

Created 07-21-2020 04:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My guess is you are running out of memory. I would like to know how much memory you have? Copying local files to HDFS is done using the mapreduce job when we use put or copyFromLocal commands it is actually using Streaming by the hadoop client binary client libraries and queues. So my guess is that the Ambari views copy might also be using MR behind the scenes.

Another alternative is to use DistCp [distributed copy] a tool used for large inter/intra-cluster copying. It also uses MapReduce to effect its distribution, error handling and recovery, and reporting. It expands a list of files and directories into input to map tasks, each of which will copy a partition of the files specified in the source list.

DistCp at times runs out of memory for big datasets? If the number of individual files/directories being copied from the source path(s) is extremely large, DistCp might run out of memory while determining the list of paths for copy. This is not unique to the new DistCp implementation. To get around this, consider changing the -Xmx JVM heap-size parameters, as follows:

$ export HADOOP_CLIENT_OPTS="-Xms64m -Xmx1024m"

$ hadoop distcp /source /targetHope that helps

Created 07-21-2020 07:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Initially, I was unable to load data into my guest OS and therefore I used Amabari's GUI to load data into HDFS directly from my host OS. Now that I have figured out using WinSCP, I have been able to copy my file from the host OS to the guest OS. I have used the put command to copy files to my HDFS. However, I have run out of disk space on my virtual machine. I have increased my VDI size but still figuring out a way to ensure HDFS utilizes the newly allocated storage. Once I figure that out, I think loading the data won't be a problem anymore. I did read the documentation of distcp yesterday. Thank you for giving a summary here anyway. I may not be required to use distcp. Let me see how it goes.

Created 07-22-2020 08:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

increasing VDI size doesn't mean partitions/filesystems inside Guest VM will be increased

you will need to resize partitions/PVs/VGs/LVs too and possibly move some partitions

( ex disk storage : |///--> / FS ///|.... Swap ....|--> free space ///| here you need to swapoff/delete swap, resize / FS , recreate swap and swapon etc...)

use gparted or CLI tools (pvresize, lvresize for LV ... resize2fs for FS ) to increase filesystems

it is important you understand how to partition disks in linux. you may loose data if you're not sure what you do

example of what you may need to do if using LVM

sudo pvresize /dev/sdb // if you VDI is seen as a second disk inside your VM (here it's used as LVM PV)

sudo lvresize -l +100%FREE /dev/mapper/datavg-data // resize LV to maximum availaible

sudo e2fsck -f /dev/mapper/datavg-data

sudo resize2fs /dev/mapper/datavg-data // resize the FS inside the LV to maximum availaible

Created 07-22-2020 09:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

increasing VDI size doesn't mean partitions/filesystems inside Guest VM will be increased - I figured this out. Anyway, I have been able to successfully increase the capacity of HDFS. I'm facing problems loading a 73GB file into hdfs. See my most recent post for more details (before this).

Created 07-22-2020 09:24 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

ambari files view (same PB for Hue File browser) is not the good tool if you want to upload (very) big files.

it's running in JVMs, and uploading big files will use more memory (you will hit maximum availaible mem very quickly and cause perfs issues to other users while you are uploading )

BTW it's possible to add other ambari server views to increase perfs (it may be dedicated to some teams/projects )

for very big files prefer Cli tools : scp to EDGE NODE with a big FS + hdfs dfs -put. or distcp or use an object storage accessible from you hadoop cluster with a good network bandwidth

Created on 07-22-2020 08:01 PM - edited 07-22-2020 08:02 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

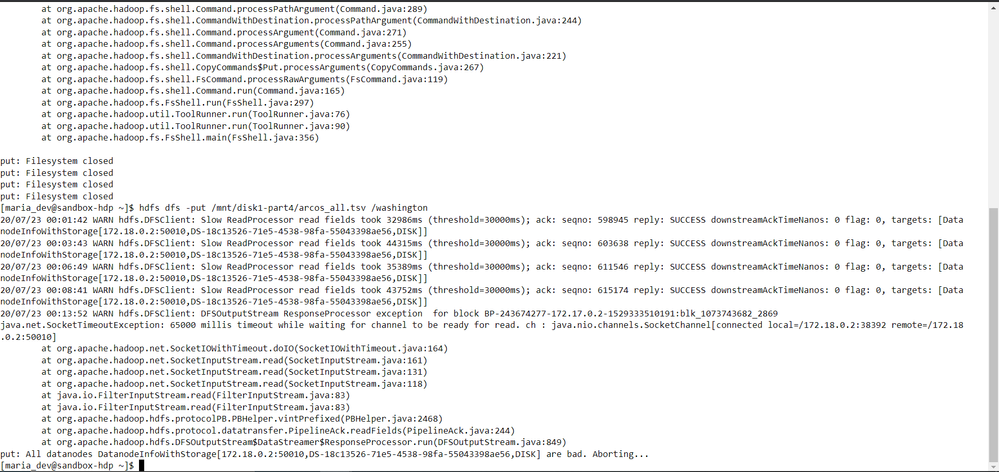

@aakulov @Shelton Using the hdfs dfs -put has given me the following error. Could it be because my virtual machine (and the hadoop cluster running on top of it), the VDI file all reside on a external hard drive? It is not an SSD and read/write speeds aren't extremely fast. I believe I can get better performance if everything ran off my machine's own internal hard drive (which is an SSD).

Would using distcp help in this scenario?