Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Unusual data placement on file rollover in Nif...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Unusual data placement on file rollover in Nifi - HDFS

- Labels:

-

Apache Hadoop

-

Apache Kafka

-

Apache NiFi

Created on 05-03-2017 04:56 PM - edited 08-17-2019 07:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

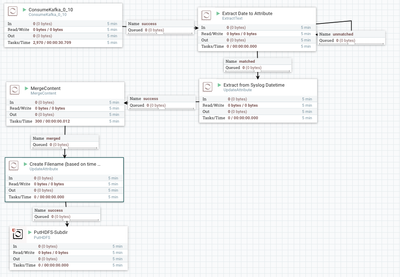

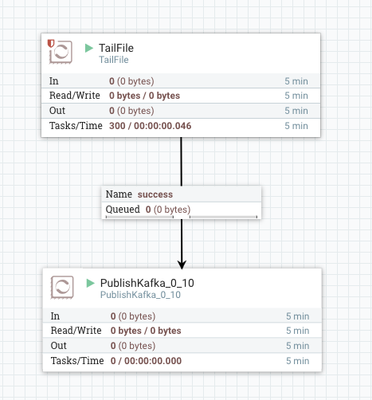

So here is our setup.

Server 1: TailFile -> PublishKafka

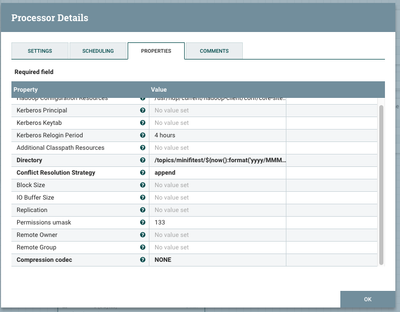

Server 2: ConsumeKafka -> ExtractText -> Update Attribute -> MergeContent -> UpdateAttribute (create filename) -> PutHDFS

We currently have it set up to parse out the timestamp from the files and save them as variable using the ExtractText command so we can create our filename and HDFS directories with the variables in this format:

Examples: May_03_16_39 (May 3rd at 16:39 pm)

May_03_16_40 (May 3rd at 16:40 pm)

May_03_16_41 (May 3rd at 16:41 pm)

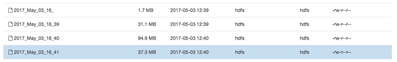

Our directory structure goes down to the minute: 2017/May/03/16/39

What we see is that during the file rollover, it puts a few seconds of data from the end of one file and a few seconds from the beginning of the next file into a file called: May_03_16_

Please see screenshots of file structure output, PutHDFS config, UpdateAttribute (create filename) config and if you could use anything else that would help let me know.

We are using the append function with PutHDFS to put all files of the same minute into a specific file.

Created on 05-04-2017 06:46 PM - edited 08-17-2019 07:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is why:

at 10,000 messages being sent to the Kafka topic / second for total of 1,000,000 we always see the odd displaced data in the filename without the minute on it no matter if the Run Duration is 500 ms, 1 s, or 2 s. (also we changed this from the lowest value because it was causing intermittent data loss)

at 1,000 message / second for total of 100,000 if we set the Run Duration to 1 s, the files are perfect, the way we want them.

Our ultimate use case is to send messages more than 10,000 / second (considerably) so maybe this will help shed some light.

Created 05-03-2017 05:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Looks like you are using $now vs. the syslog datetime for the rollover. Is there a reason for that? I expect to use the source (syslog) timestamp for the rollover, so you will have only matching timestamps in the hdfs file.

Created 05-03-2017 07:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the idea. I corrected that but am still seeing the same behavior.

Created 05-03-2017 07:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you post the full date format used?

Created on 05-04-2017 06:24 PM - edited 08-17-2019 07:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

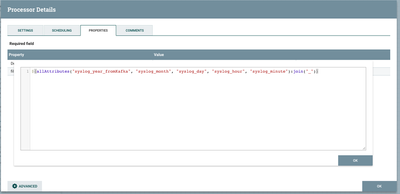

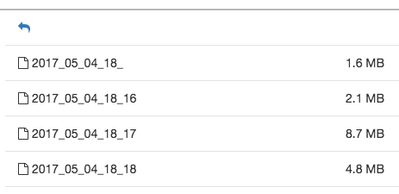

Are you referring to the string used to separate the date in PutHDFS?

/topics/minifitest/${allAttributes("syslog_year", "syslog_month", "syslog_day", "syslog_hour"):join("/")}

Here is our date format example: 2017-05-04 17:15:14,655

We split up 2017 into syslog_year, 05 into syslog_month, 04 into syslog_day, 17 into syslog_hour, 15 in syslog_minute ... etc etc

Ultimately we use this string to generate the filename:

${allAttributes("syslog_year", "syslog_month", "syslog_day", "syslog_hour", "syslog_minute"):join("_")}

It all parses into directories correctly but then our files over three minutes end up in three correctly named folders (as screenshot) with missing chunks in the filename missing the minute...

Created 05-04-2017 06:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

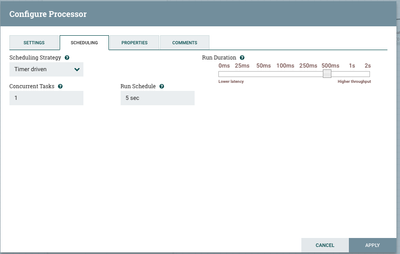

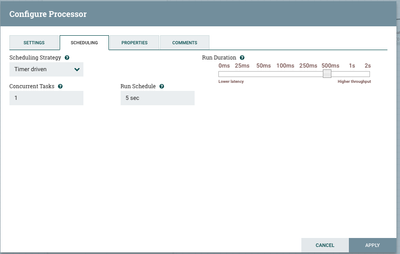

I suspect that there is a connection between the number of messages being sent and Run Duration in our ExtractText processor (see screenshot)

This is why:

at 10,000 messages being sent to the Kafka topic / second for total of 1,000,000 we always see the odd displaced data in the filename without the minute on it no matter if the Run Duration is 500 ms, 1 s, or 2 s. (also we changed this from the lowest value because it was causing intermittent data loss)

at 1,000 message / second for total of 100,000 if we set the Run Duration to 1 s, the files are perfect, the way we want them.

Our ultimate use case is to send messages more than 10,000 / second (considerably) so maybe this will help shed some light.

Created on 05-04-2017 06:46 PM - edited 08-17-2019 07:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is why:

at 10,000 messages being sent to the Kafka topic / second for total of 1,000,000 we always see the odd displaced data in the filename without the minute on it no matter if the Run Duration is 500 ms, 1 s, or 2 s. (also we changed this from the lowest value because it was causing intermittent data loss)

at 1,000 message / second for total of 100,000 if we set the Run Duration to 1 s, the files are perfect, the way we want them.

Our ultimate use case is to send messages more than 10,000 / second (considerably) so maybe this will help shed some light.

Created 05-04-2017 07:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Changing the Concurrent Tasks in ExtractText to 3 and reducing the Run Duration to 500ms fixed the problem.