Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Upgrading HDP 3.1.0 to 3.1.4 : remove old vers...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Upgrading HDP 3.1.0 to 3.1.4 : remove old version

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have one question regarding the upgrade of HDP 3.1.0.0-78 to 3.1.4.0-315 on Ubuntu 18, and the remove of the old version once the upgrade is finished.

I can see only the new version In Ambari / Stack and versions / version view, but I would like to know if I have to remove the binaries of the old version on the servers or do something else.

I read the post https://community.cloudera.com/t5/Support-Questions/How-to-remove-an-old-HDP-version/td-p/116161, saying that there is an API call that removes the old HDP version

Thanks in advance

Created 07-28-2020 02:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 07-29-2020 06:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 07-29-2020 06:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Stephbat

Did you follow the Workaround:1 through 3? I see you have a similar error

File "/var/lib/ambari-agent/cache/custom_actions/scripts/remove_previous_stacks.py1) go to each ambari-agent node and edit the file remove_previous_stacks.py

# vi /var/lib/ambari-agent/cache/custom_actions/scripts/remove_previous_stacks.py

2) go to line: 77

edit the line from :

all_installed_packages = self.pkg_provider.all_installed_packages()

to

all_installed_packages = self.pkg_provider.installed_packages()

3) Retry the operation via curl again, please substitute only the Ambari_host below

ex:

curl 'http://Ambari_host:8080/api/v1/clusters/<cluster_name>/requests' -u admin:admin -H "X-Requested-By: ambari" -X POST -d'{"RequestInfo":{"context":"remove_previous_stacks", "action" : "remove_previous_stacks", "parameters" : {"version":"3.1.0.0-78"}}, "Requests/resource_filters": [{"hosts":"Ambari_host"}]}'And please revert

Created 07-29-2020 06:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

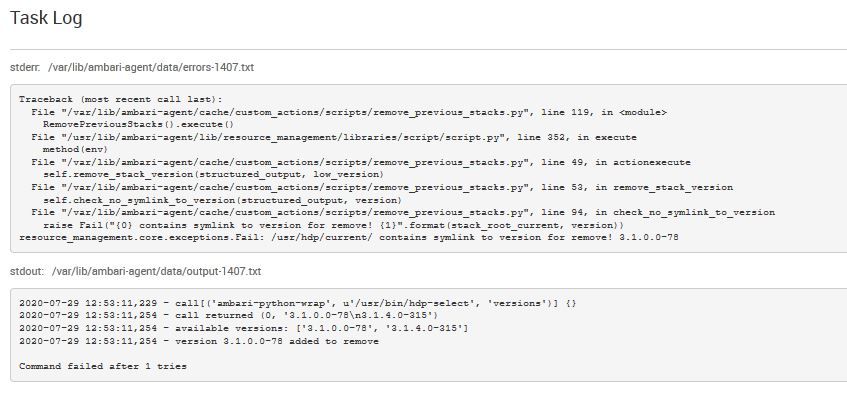

Yes, I tried this workaround, and I still get this error :

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/custom_actions/scripts/remove_previous_stacks.py", line 118, in <module>

RemovePreviousStacks().execute()

File "/usr/lib/ambari-agent/lib/resource_management/libraries/script/script.py", line 352, in execute

method(env)

File "/var/lib/ambari-agent/cache/custom_actions/scripts/remove_previous_stacks.py", line 49, in actionexecute

self.remove_stack_version(structured_output, low_version)

File "/var/lib/ambari-agent/cache/custom_actions/scripts/remove_previous_stacks.py", line 53, in remove_stack_version

self.check_no_symlink_to_version(structured_output, version)

File "/var/lib/ambari-agent/cache/custom_actions/scripts/remove_previous_stacks.py", line 93, in check_no_symlink_to_version

raise Fail("{0} contains symlink to version for remove! {1}".format(stack_root_current, version))

resource_management.core.exceptions.Fail: /usr/hdp/current/ contains symlink to version for remove! 3.1.0.0-78It seems that it fails because there are still symink to the old version in the directory /usr/hdp/current (at least on the server where ambari-server is hosted) :

root@server-01:~# ls -l /usr/hdp/current | grep 3.1.0.0-78

lrwxrwxrwx 1 root root 25 Jul 28 06:27 atlas-server -> /usr/hdp/3.1.0.0-78/atlas

lrwxrwxrwx 1 root root 31 Jul 28 06:27 hadoop-hdfs-datanode -> /usr/hdp/3.1.0.0-78/hadoop-hdfs

root@server-01:~# ls -l /usr/hdp/current | grep 3.1.4.0-315

lrwxrwxrwx 1 root root 26 Jul 28 10:44 atlas-client -> /usr/hdp/3.1.4.0-315/atlas

lrwxrwxrwx 1 root root 27 Jul 28 10:51 hadoop-client -> /usr/hdp/3.1.4.0-315/hadoop

lrwxrwxrwx 1 root root 32 Jul 28 10:51 hadoop-hdfs-client -> /usr/hdp/3.1.4.0-315/hadoop-hdfs

Created 07-29-2020 03:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Bizarre all the symlinks should point to the newer version 3.1.4.0-315. You should recreate the symlink point to the new version.

The re-run the steps

Created 07-30-2020 05:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In order to remove or modify the symlinks that point to the old version, I have executed the command '/usr/bin/hdp-select set all 3.1.4.0-315' on all servers of the cluster. After that, I have relaunch the API request, and I get a new error that I think I can fix with the workaround suggested before in this thread

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/custom_actions/scripts/remove_previous_stacks.py", line 119, in <module>

RemovePreviousStacks().execute()

File "/usr/lib/ambari-agent/lib/resource_management/libraries/script/script.py", line 352, in execute

method(env)

File "/var/lib/ambari-agent/cache/custom_actions/scripts/remove_previous_stacks.py", line 49, in actionexecute

self.remove_stack_version(structured_output, low_version)

File "/var/lib/ambari-agent/cache/custom_actions/scripts/remove_previous_stacks.py", line 54, in remove_stack_version

packages_to_remove = self.get_packages_to_remove(version)

File "/var/lib/ambari-agent/cache/custom_actions/scripts/remove_previous_stacks.py", line 77, in get_packages_to_remove

all_installed_packages = self.pkg_provider.all_installed_packages()

AttributeError: 'AptManager' object has no attribute 'all_installed_packages'

Created 07-30-2020 06:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After the apply of the workaround on the file /var/lib/ambari-agent/cache/custom_actions/scripts/remove_previous_stacks.py, I succeeded to execute the API request but there's no effect : the directory of the old version hasn't been removed on the servers of the cluster, and the results of the API queries GET http://localhost/api/v1/clusters/<cluster_name>/stack_versions, http://localhost/api/v1/clusters/<cluster_name>/stack_versions/<id_oldversion>, are the same.