Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: User not allowed to do 'DECRYPT_EEK' despite t...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

User not allowed to do 'DECRYPT_EEK' despite the group to which the user belong have proper access

Created 08-10-2017 12:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

I have created an encryption zone and I am not able to copy data into this encryption zone using USER_1 which belongs to GROUP_1 and getting the below error:

copyFromLocal: User:USER_1 not allowed to do 'DECRYPT_EEK' on 'key1'

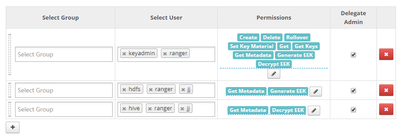

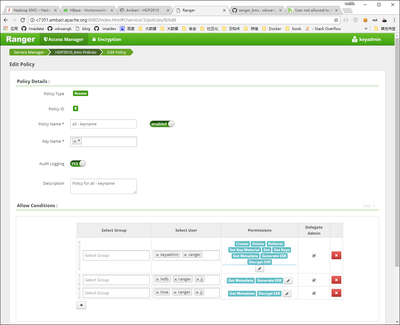

In ranger ranger kms policies I have given full access to the group GROUP_1. But still I am facing this issue. Is it like group level policies does not apply for Ranger KMS or is there some configuration I have to tweak to make it work.

Please help me understand this issue and also any clue or suggestion is appreciated.

FYI, the cluster is kerberized.

thanks in advance.

Created 08-10-2017 01:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you created a keytab for the USER_1 check in

$ ls -al /etc/security/keytabs

Did USER_1 grab a valide kerberos ticket as the USER_1 run

$ klist

Do yo have any output?

USER_1 might have been blacklisted by the Ambari property, hadoop.kms.blacklist.DECRYPT_EEK. Thats the most probable reason why you are unable to decrypt being an 'USER_1' user.

Did you give read permission to USER_1 to that encryption zone?

Created 08-10-2017 01:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you created a keytab for the USER_1 check in

I am not using keytab for access.

Did USER_1 grab a valide kerberos ticket as the USER_1 run

Yes I have created proper ticket using kinit command

USER_1 might have been blacklisted by the Ambari property, hadoop.kms.blacklist.DECRYPT_EEK. Thats the most probable reason why you are unable to decrypt being an 'USER_1' user.

No I have seen into ranger KMS configs only hdfs user is blacklisted.

Did you give read permission to USER_1 to that encryption zone?

what do you mean by this ?

==============================================================

FYI, As soon as I add USER_1 in the access list. it works just fine. It seems to be not working when group level access is given.

attaching some logswhich might help you understand the problem better:

<em>#hadoop fs -copyFromLocal test3 /test/1234

copyFromLocal: User:USER_1 not allowed to do 'DECRYPT_EEK' on '1234'

17/08/10 13:48:45 WARN retry.RetryInvocationHandler: Exception while invoking ClientNamenodeProtocolTranslatorPB.complete over null. Not retrying because try once and fail.

org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.hdfs.server.namenode.LeaseExpiredException): No lease on /test/1234/test3._COPYING_ (inode 329412): File does not exist. Holder DFSClient_NONMAPREDUCE_-196143005_1 does not have any open files.

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkLease(FSNamesystem.java:3521)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.completeFileInternal(FSNamesystem.java:3611)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.completeFile(FSNamesystem.java:3578)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.complete(NameNodeRpcServer.java:905)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.complete(ClientNamenodeProtocolServerSideTranslatorPB.java:544)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:640)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:982)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2313)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2309)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1724)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2307)

at org.apache.hadoop.ipc.Client.getRpcResponse(Client.java:1552)

at org.apache.hadoop.ipc.Client.call(Client.java:1496)

at org.apache.hadoop.ipc.Client.call(Client.java:1396)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:233)

at com.sun.proxy.$Proxy16.complete(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.complete(ClientNamenodeProtocolTranslatorPB.java:501)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:278)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:194)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:176)

at com.sun.proxy.$Proxy17.complete(Unknown Source)

at org.apache.hadoop.hdfs.DFSOutputStream.completeFile(DFSOutputStream.java:2361)

at org.apache.hadoop.hdfs.DFSOutputStream.closeImpl(DFSOutputStream.java:2338)

at org.apache.hadoop.hdfs.DFSOutputStream.close(DFSOutputStream.java:2303)

at org.apache.hadoop.hdfs.DFSClient.closeAllFilesBeingWritten(DFSClient.java:947)

at org.apache.hadoop.hdfs.DFSClient.closeOutputStreams(DFSClient.java:979)

at org.apache.hadoop.hdfs.DistributedFileSystem.close(DistributedFileSystem.java:1192)

at org.apache.hadoop.fs.FileSystem$Cache.closeAll(FileSystem.java:2852)

at org.apache.hadoop.fs.FileSystem$Cache$ClientFinalizer.run(FileSystem.java:2869)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:471)

at java.util.concurrent.FutureTask.run(FutureTask.java:262)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615)

at java.lang.Thread.run(Thread.java:745)<br></em>Created on 08-17-2017 08:49 AM - edited 08-17-2019 09:13 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I meet the same problem:

$ hdfs dfs -put ./ca.key /tmp/webb

put: User:jj not allowed to do 'DECRYPT_EEK' on 'zonekey1'

I fix the problem by add permissions to user 'jj'.

Created 08-17-2017 06:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am not really looking for this kind of solution. It works when you give permission at user level but i want it to work on group level. I think I have explained well the question if not let me know what I can do to make it more clear.

thanks anyways for your reply.

Created 08-17-2017 09:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So it was a permissions issue as I had earlier stated! Can you validate the same on your cluster

Created 08-17-2017 06:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No @Geoffrey Shelton Okot this is not the solution. I want to give the permission on the group level.

Created 08-17-2017 07:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

KMS has an ACL file named "kms-acls.xml". Can you copy and paste or attach the contents in a file in this thread?

Created 08-18-2017 05:02 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I checked the kms-acls.xml file and the value for all the properties is * set. Is there any property I need to change to make it work ?

Created on 08-17-2017 11:45 PM - edited 08-17-2019 09:13 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

my kms is new installed. I check kms-acls.xml, all conf is default (almost is "*")。 I changed the policy, and it work.