I'm using Cloudera manager 7.4.4 and running the spark(2.4.4) application. I'm facing below warning and application is going on to infinite loop.

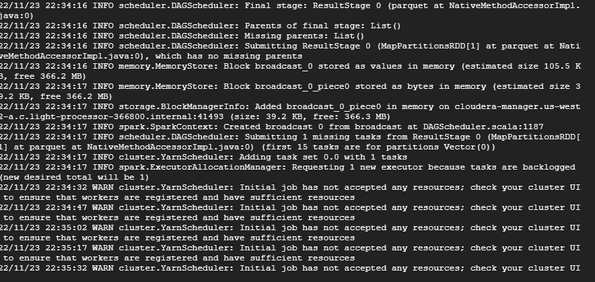

WARN cluster.YarnScheduler: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources

I'm using a simple spark-submit command. spark-submit <filename.py>

my python version is 2.7

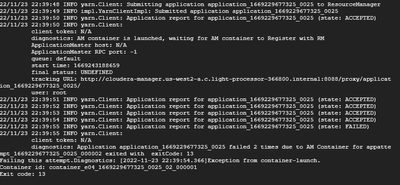

When I'm running the below command I got many other errors

spark-submit --master yarn --deploy-mode cluster --driver-memory 5g --executor-memory 5g --num-executors 3 --executor-cores 2 <filename.py>

Below are the errors