Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Warning: fs.defaultFS is not set

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Warning: fs.defaultFS is not set

- Labels:

-

Cloudera Manager

-

HDFS

Created 06-11-2017 03:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I have a 8 node cloudera cluster with three master nodes contains master deamons up and running and three slave nodes contains all slave deamons up and running and two edge nodes to access hadoop services. Hue is installed on both of the edge nodes. It is managed from cloudera manager.

I have deployed client configuraion across all nodes. Environement variable is set correctly. Checked hadoop configuration in my edgenodes. But unable to access hadoop services by hdfs fs shell command. Endpoints are configured properly and hue works fine. I can run all hdfs fs shell commands in my master and slave nodes. It is a very basic question. I have checked the possible corners in the server. But I cant locate the problem. Could you please help me get rid of the issue.

sudo -u hdfs hadoop fs -ls / Warning: fs.defaultFS is not set when running "ls" command. Found 21 items -rw-r--r-- 1 root root 0 2017-06-07 18:31 /.autorelabel -rw-r--r-- 1 root root 0 2017-05-16 19:55 /1 dr-xr-xr-x - root root 28672 2017-06-10 06:16 /bin dr-xr-xr-x - root root 4096 2017-05-16 20:03 /boot drwxr-xr-x - root root 3040 2017-06-07 18:31 /dev drwxr-xr-x - root root 8192 2017-06-10 07:22 /etc drwxr-xr-x - root root 56 2017-06-10 07:22 /home dr-xr-xr-x - root root 4096 2017-06-10 06:15 /lib dr-xr-xr-x - root root 32768 2017-06-10 06:15 /lib64 drwxr-xr-x - root root 6 2016-11-05 15:38 /media drwxr-xr-x - root root 35 2017-06-07 01:57 /mnt drwxr-xr-x - root root 45 2017-06-10 06:14 /opt dr-xr-xr-x - root root 0 2017-06-08 11:04 /proc dr-xr-x--- - root root 4096 2017-06-10 06:23 /root drwxr-xr-x - root root 860 2017-06-10 06:16 /run dr-xr-xr-x - root root 20480 2017-06-07 19:47 /sbin drwxr-xr-x - root root 6 2016-11-05 15:38 /srv dr-xr-xr-x - root root 0 2017-06-08 11:04 /sys drwxrwxrwt - root root 4096 2017-06-11 10:32 /tmp drwxr-xr-x - root root 167 2017-06-07 19:43 /usr drwxr-xr-x - root root 4096 2017-06-07 19:46 /var

Best Regards,

RK.

Created 06-13-2017 12:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey rkkrishnaa,

Has the edge node been assigned a the "HDFS Gateway" role? You can confirm this by clicking on "Hosts" and expanding the roles.

Cheers

Created 06-13-2017 12:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey rkkrishnaa,

Has the edge node been assigned a the "HDFS Gateway" role? You can confirm this by clicking on "Hosts" and expanding the roles.

Cheers

Created 02-15-2018 09:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 08-09-2018 08:02 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Whats the resolution?

Created 09-30-2018 05:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Brother

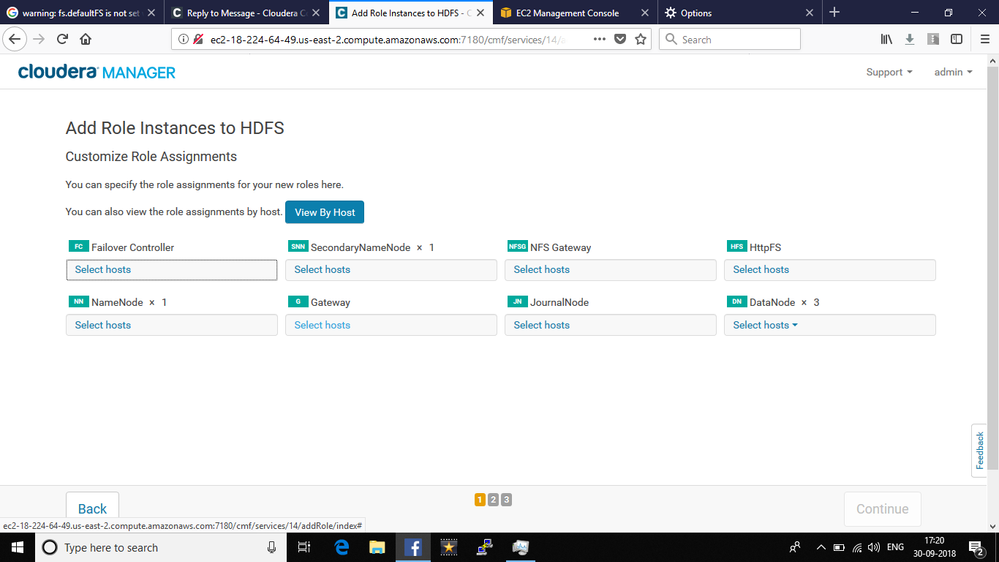

Just go to HDFS --> Instances --> (Then click on) Add role instances --> & just select & configure "Gateway" on a host.

Your problem will be resolved... in-sha-ALLAH.

Created 08-13-2019 12:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This can be a case to case incident. In some cases we need to remove and replace the content of the /opt/cloudera/parcels/CDH/lib/hadoop/etc/hadoop directory from the other nodes of the same cluster if this node is added from some other cluster. This is because the directory may not be cleared before adding the server to the new cluster. The existing files form the old cluster may not work as expected in the new cluster as the parameters in the configuration files may vary from cluster to cluster. So we need to forcefully remove the contents and add them manually.