Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: What are the important metrics to notice for e...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

What are the important metrics to notice for each Stage in Spark UI?

- Labels:

-

Apache Spark

Created on 11-21-2016 05:50 PM - edited 08-19-2019 04:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am running a spark job of hdfs file size of 182.4 gb. This is the config I passed to get the fastest computing time which was around 4 mins.

spark-submit --master yarn-cluster --executor-memory 64G --num-executors 30 --driver-memory 4g --executor-cores 4 --queue xxx test.jar

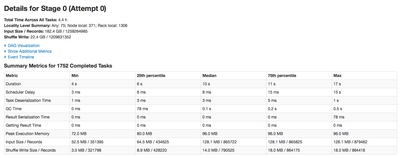

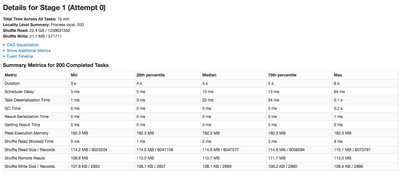

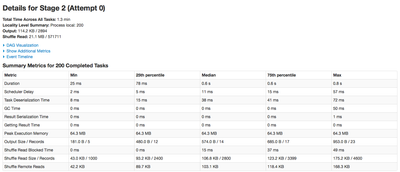

Below screenshots all the metrics report from spark UI of each stage after job completion. I want to know what factors should I be looking into while comparing this metrics from columns Min, 25th percentile, Median, 75th percentile and Max.

------------------------------------------------------------------------------------------------------------------------------------------------------------------------

- STAGE 0

------------------------------------------------------------------------------------------------------------------------------------------------------------------------

- STAGE 1

------------------------------------------------------------------------------------------------------------------------------------------------------------------------

- STAGE 2

------------------------------------------------------------------------------------------------------------------------------------------------------------------------

Created 11-22-2016 07:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Too add to what @Scott Shaw said, the biggest thing we'd be looking for initially is data skew. So we can take a look at a couple things to help determine this.

The first is to take a look at the input size. With input size, we can completely ignore the min, and take a look at the 25, median and 75th percentiles. We see that in your job the are fairly close together, and we also the see the max is never dramatically more than the median. If we saw the max and 75% percentile were very large, we would definitely see data skew.

Another indicator of data skew is the task duration. Again ignore the minimum, we're definitely going to inevitably get a small partition due to one reason or another. Focus on the 25th median 75th and max. In a perfect world the seperation between the 4 would be a tiny amount. So seeing 6s, 10s, 11s, 17s, they may seem like significantly different but theyre actually relatively close. The only time we would have a cause for concern would be when the 75% and max are quite a bit greater then 25% and median. When I saw significant, I'm talking about most tasks take ~30s and the max taking 10 mins. That would be a clear indicator of data skew.

Created 11-21-2016 06:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Adnan Alvee. Having already narrowed performance issues down to a particular job, the primary thing you will want to look for within each stage is skew. Skew is when a small number of tasks take significantly longer to execute than others. You'll want to look specifically at task runtime to see if something is wrong.

As you drill down into slower running tasks you'll want to focus on where they are slow. Is the slowness in writing data, reading data, or computation? This can narrow things down to a particular problem with a node or maybe you don't have enough disks to handle the scratch space for shuffles.

Keep in mind that Spark scales linearly. Your processing may be slow simply due to not enough hardware. You'll want to focus on how much memory and cpu you've allocated to your executers as well has how many disks you have in each node.

It also looks as if your #executers is quite large. Consider having fewer executors with more resources per executor. Also the executor memory is memory per executor. The number you have is a bit large. Try playing with those numbers and see if it makes a difference.

Hope this helps.

Created 11-22-2016 07:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Too add to what @Scott Shaw said, the biggest thing we'd be looking for initially is data skew. So we can take a look at a couple things to help determine this.

The first is to take a look at the input size. With input size, we can completely ignore the min, and take a look at the 25, median and 75th percentiles. We see that in your job the are fairly close together, and we also the see the max is never dramatically more than the median. If we saw the max and 75% percentile were very large, we would definitely see data skew.

Another indicator of data skew is the task duration. Again ignore the minimum, we're definitely going to inevitably get a small partition due to one reason or another. Focus on the 25th median 75th and max. In a perfect world the seperation between the 4 would be a tiny amount. So seeing 6s, 10s, 11s, 17s, they may seem like significantly different but theyre actually relatively close. The only time we would have a cause for concern would be when the 75% and max are quite a bit greater then 25% and median. When I saw significant, I'm talking about most tasks take ~30s and the max taking 10 mins. That would be a clear indicator of data skew.