Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: What happens inside the Spark Component Adding...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

What happens inside the Spark Component Adding through Ambari ?

Created 02-25-2016 04:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi there,

I am trying to install Spark on 16 node HDP cluster. When I select spark component in the Ambari (Add components page), what happens. Does it install spark library on all the nodes and install Spark server on the master node?

Previously I ran Spark on local mode but want to know how does the installation work under the hood?

Regards.

Created on 02-25-2016 05:04 PM - edited 08-18-2019 05:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

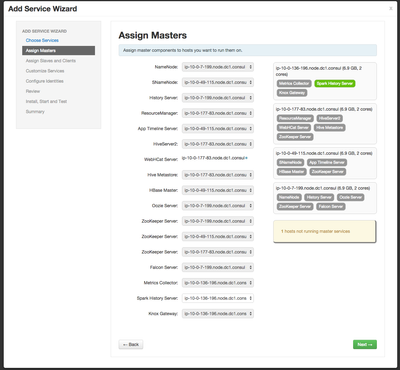

@Smart Solutions you will have to select where you want Spark History Server to run and which machines to install Spark clients on. There is a page further down the road that will allow you to pick which nodes to select what on. For example on Sandbox, if I click either URL below, will take me to sandbox.hortonworks.com, on your cluster, URLs will point to whatever servers you defined.

Created on 02-25-2016 05:04 PM - edited 08-18-2019 05:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Smart Solutions you will have to select where you want Spark History Server to run and which machines to install Spark clients on. There is a page further down the road that will allow you to pick which nodes to select what on. For example on Sandbox, if I click either URL below, will take me to sandbox.hortonworks.com, on your cluster, URLs will point to whatever servers you defined.

Created 02-25-2016 06:13 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks does it mean we install just Spark clients and nothing related to master processes or server on HDP? While configuring through Ambari?

Created 02-25-2016 06:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

no, you install master services as well. Spark History for example is a service. I don't have access to an installation right now otherwise I'd shown you install steps. Basically components are categorized by clients and master services. You check and uncheck which machines will serve as what. @Smart Solutions

Created 02-25-2016 05:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Take a look on this

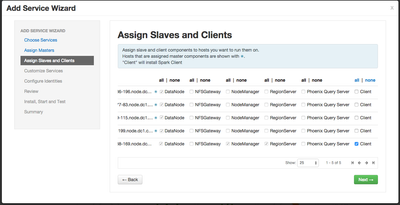

You can pick nodes where you want your clients.

When you install Spark, the following directories will be created:

/usr/hdp/current/spark-clientfor submitting Spark jobs/usr/hdp/current/spark-historyfor launching Spark master processes, such as the Spark History Server/usr/hdp/current/spark-thriftserverfor the Spark Thrift Server

Created on 02-26-2016 12:09 AM - edited 08-18-2019 05:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

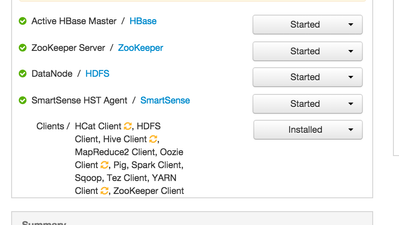

@Smart Solutions See this

You need master services and clients.

You can see that I have spark client in 3 nodes

when I clicked spark clients and then clicks one of the nodes then you can see all the clients installed in that node

See : Clients

Created on 03-03-2016 05:24 PM - edited 08-18-2019 05:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When you add Spark through Ambari, you will be asked to choose where to deploy master service (Spark History Service)

And then to choose where to deploy clients services

Finally you will be asked for several properties