Support Questions

- Cloudera Community

- Support

- Support Questions

- What is a good approach for Spilitting 100GB file ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

What is a good approach for Spilitting 100GB file in to multiple files.?

- Labels:

-

Apache NiFi

Created 10-26-2016 04:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi ,

I have a 100 GB file while I will have to Split in to multiple (may be 1000) files depending on the value "Tagname" in the sample data below and write in to HDFS.

Tagname,Timestamp,Value,Quality,QualityDetail,PercentGood

ABC04.PI_B04_EX01_A_STPDATTRG.F_CV,2/18/2015 1:03:32 AM,627,Good,NonSpecific,100 ABC04.PI_B04_EX01_A_STPDATTRG.F_CV,2/18/2015 1:03:33 AM,628,Good,NonSpecific,100 ABC05.X4_WET_MX_DDR.F_CV,2/18/2015 12:18:00 AM,12,Good,NonSpecific,100 ABC05.X4_WET_MX_DDR.F_CV,2/18/2015 12:18:01 AM,4,Good,NonSpecific,100 ABC04.PI_B04_FDR_A_STPDATTRG.F_CV,2/18/2015 1:04:19 AM,3979,Good,NonSpecific,100 ABC04.PI_B04_FDR_A_STPDATTRG.F_CV,2/18/2015 9:35:23 PM,4018,Good,NonSpecific,100 ABC04.PI_B04_FDR_A_STPDATTRG.F_CV,2/18/2015 9:35:24 PM,4019,Good,NonSpecific,100

In reality the "Tagname" will be continues to be the same(may be 10K+) until its value changes. I need to create one file for each Tag.

Do i have to split the file in to smaller files (may be 20 , 5GB files) using SplitFile.? If i do that will it split exactly at the end of lines.? Do I have to read line by line using ExtractText or any better approach.?

Can i use ConvertCSVToAvro and then ConvertAVROToJson and then split the Json file by Tag using SplitJson..??

Can i use do i have to change any default NiFi settings for this.?

Regards,

Sai

Created on 10-26-2016 07:10 PM - edited 08-18-2019 05:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

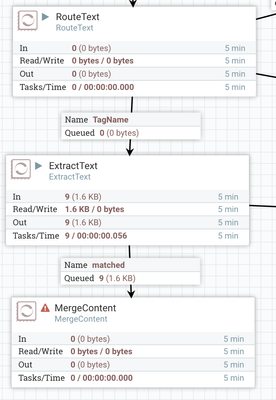

I agree that you may still need to split your very large incoming FlowFile into smaller FlowFiles to better manage heap memory usage, but you should be able to use the RouteText and ExtractText as follows to accomplish what you want:

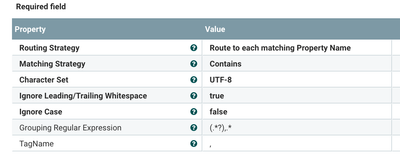

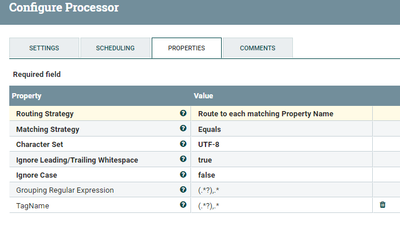

RouteText configured as follows:

All Grouped lines will be routed to relationship "TagName" as a new FlowFile.

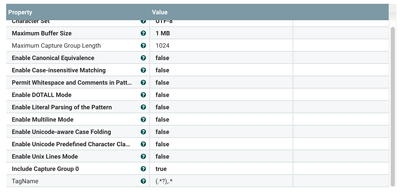

They feed into an ExtractText configured as follows:

This will extract the TagName as an attribute of on the FlowFile which you can then use as the correlationAttribute name in the MergeContent processor that follows.

Thanks,

Matt

Created 10-26-2016 04:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You may consider using the RouteText processor to route the individual lines from your source FlowFile to relationships based upon your various Tagnames and then use mergeContent processors to merger those lines back in to a single FlowFile.

Created 10-26-2016 05:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Also you should use a series of SplitText processors in a row rather than one, the first could split into 100,000 rows or something, then the next to 1000, then the next to 1. Those numbers (and the number of SplitTexts) can be tuned for your dataset, but should prevent any single processor from hanging or running out of memory.

Created 10-26-2016 05:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Same hold true for the MergeContent side of this flow. Have a MergeContent merger the first 10,000 FlowFiles and a second merger multiple 10,000 line FlowFiles into even larger merged FlowFiles. This again will help prevent running in to OOM errors.

Created on 10-26-2016 05:48 PM - edited 08-18-2019 05:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @mclark,

There will be thousands of tagnames do I have to specify those as relationships.? I hope you are talking about the following way which I tried on a smaller file. which it split in to 5 files based on the grouping reg ex. but it routed all the split files in to unmatched relationship. I think that should be fine I can drive my remaining process from Unmatched.

now will it work if the file is huge (100GB).? does it need to read the whole file before it splits based on groups.?

routeText properties:

Created 10-26-2016 05:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

sorry , I didn't see your reply.

may be I should use the above approach with splitText. So it would be combination of multiple splitText and RouteText processes.?? how do I extract the tagName (first column) from the text so that I can use that for Merge process.?

Regards,

Sai

Created 10-26-2016 06:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Multiple SplitTexts just to get the size of each flow file down to a manageable number of lines (not 1 as I suggested above, but not the whole file either), then RouteText with the Grouping Regular Expression the way you have it, then multiple dynamic properties (similar to your TagName above), each with a value of what you want to match:

Tag1 with value ABC04.PI_B04_EX01_A_STPDATTRG.F_CV

Tag2 with value ABC05.X4_WET_MX_DDR.F_CV

...etc.

Once you Apply the changes and reopen the dialog you should see relationships like Tag1 and Tag2, you can then route those relationships to the appropriate branch of the flow. In each branch, you may need multiple MergeContents like @mclark describes above, to incrementally build up larger files. At the end of each branch, you should have a flow file full of entries with the same tag name.

An alternative is to use SplitTexts down to 1 flow file per line, then ExtractText to put the tag name in an attribute, then RouteOnAttribute to route the files, then the MergeContents to build up a single file with all the lines with the same tag name. This seems slower to me, so I'm hoping the other solution works.

Created 10-26-2016 06:38 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

if I have 5K tags it would be difficult to write dynamic properties for all .I am trying to see how I can get the Tag name dynamically from RouteText , the one that I wrote above is not doing it.

Created on 10-26-2016 07:10 PM - edited 08-18-2019 05:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I agree that you may still need to split your very large incoming FlowFile into smaller FlowFiles to better manage heap memory usage, but you should be able to use the RouteText and ExtractText as follows to accomplish what you want:

RouteText configured as follows:

All Grouped lines will be routed to relationship "TagName" as a new FlowFile.

They feed into an ExtractText configured as follows:

This will extract the TagName as an attribute of on the FlowFile which you can then use as the correlationAttribute name in the MergeContent processor that follows.

Thanks,

Matt

Created on 10-26-2016 07:33 PM - edited 08-18-2019 05:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can actually cut the ExtractText processor out of this flow. I forgot the RouteText processor generates a "RouteText.Group" FlowFile attribute. You can just use that attribute as the "Correlation Attribute Name" in the MergeContent processor.