Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: What is the Big data framework for collect dat...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

What is the Big data framework for collect data from ftp/sftp?

- Labels:

-

Apache Flume

-

Apache Hadoop

-

Apache NiFi

-

HDFS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello everyone, I am new for big data framework. Currently I am working for collect many type of files from ftp/sftp server and put in HDFS. I just research about apache Nifi it good to go, but the interface look not good to monitor because all flow in the same place. Anyone have more experience about this could you recommend the best framework for this task?

Thanks,

Created 12-10-2021 04:25 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi ,

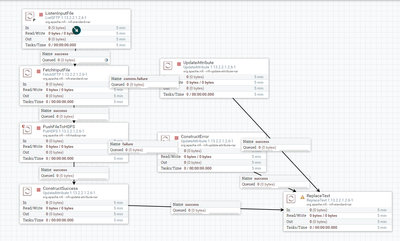

Please find the sample flow for List SFTPand Fetch SFTP processor and put into target HDFS path.

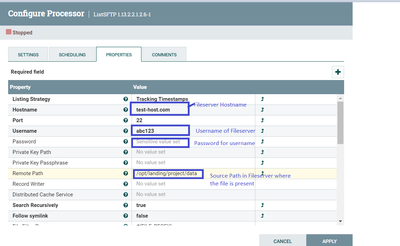

1. Processor ListSFTP - Keep listening input folder for example /opt/landing/project/data from Fileshare server. Once a new file arrival , the listsftp takes only name of the file and pass to FetchSFTP nifi processor to fetch the file from source folder.

Properties to mention in ListSFTP processor are highlighted below

2. Once latest file has been idenified by ListSFTP processor , the fetchSFTP processor to fetch the file from Source path.

Properties to configure in FetchSFTP processor.

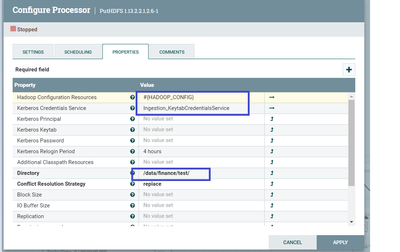

3. In PUTHDFS processor , please configure the highlighted values of your project and required folder.

If your cluster is kerberos enabled , please add the kerbers controller service to access HDFS from NiFi.

4. Success and failure relationship of the PutHDFS nifi processor can be used to monitor the Flow status and status can stored in Hbase for queering flow status.

Created 11-24-2021 11:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Currently we are using NiFi to pull the files from SFTP server and put into HDFS using NiFi listSFTP processor and FetchSTP processor and we can track the status of the flow whether it is success or failure and the ingestion status can be stored in persistent storage for example hbase or hdfs.We can query the status of the Ingestion anytime .

Another option we did in previous projects that we pulled the files from NAS storage to local file system(edge node or gateway node) then to Hadoop using SFTP copy unix command to put the file to HDFS using hdfs commands. The process has been done in shell script .Scheduled through control-M.

Between what you mean by all flow in same place ?

We can develop single NiFi flow to pull the files from SFTP server and put into Hadoop files system target path.

Created 11-25-2021 01:38 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for reply, Do you have example to build ftp/sftp in Nifi?

Created 12-10-2021 04:25 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi ,

Please find the sample flow for List SFTPand Fetch SFTP processor and put into target HDFS path.

1. Processor ListSFTP - Keep listening input folder for example /opt/landing/project/data from Fileshare server. Once a new file arrival , the listsftp takes only name of the file and pass to FetchSFTP nifi processor to fetch the file from source folder.

Properties to mention in ListSFTP processor are highlighted below

2. Once latest file has been idenified by ListSFTP processor , the fetchSFTP processor to fetch the file from Source path.

Properties to configure in FetchSFTP processor.

3. In PUTHDFS processor , please configure the highlighted values of your project and required folder.

If your cluster is kerberos enabled , please add the kerbers controller service to access HDFS from NiFi.

4. Success and failure relationship of the PutHDFS nifi processor can be used to monitor the Flow status and status can stored in Hbase for queering flow status.