Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Whats the best way to read multiline cvs and ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Whats the best way to read multiline cvs and transpose it to columns

- Labels:

-

Apache Hadoop

-

Apache Spark

Created on 06-25-2016 08:39 PM - edited 08-18-2019 05:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a requirement where in I need to ingest multiline CSV with semistructured records with some rows need to be converted to column and some rows needs to be both rows and column.

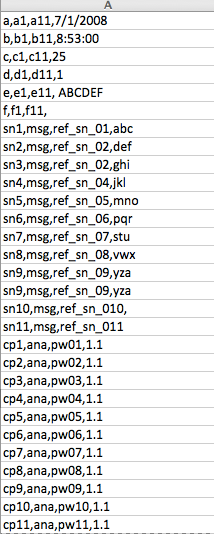

below is the input CSV file look like:

a,a1,a11,7/1/2008

b,b1,b11,8:53:00

c,c1,c11,25

d,d1,d11,1

e,e1,e11, ABCDEF

f,f1,f11,

sn1,msg,ref_sn_01,abc

sn2,msg,ref_sn_02,def

sn3,msg,ref_sn_02,ghi

sn4,msg,ref_sn_04,jkl

sn5,msg,ref_sn_05,mno

sn6,msg,ref_sn_06,pqr

sn7,msg,ref_sn_07,stu

sn8,msg,ref_sn_08,vwx

sn9,msg,ref_sn_09,yza

sn9,msg,ref_sn_09,yza

sn10,msg,ref_sn_010,

sn11,msg,ref_sn_011

cp1,ana,pw01,1.1

cp2,ana,pw02,1.1

cp3,ana,pw03,1.1

cp4,ana,pw04,1.1

cp5,ana,pw05,1.1

cp6,ana,pw06,1.1

cp7,ana,pw07,1.1

cp8,ana,pw08,1.1

cp9,ana,pw09,1.1

cp10,ana,pw10,1.1

cp11,ana,pw11,1.1

Below is the expected output:

please let me know whats the best to read it and load it in HDFS/Hive.

Created 06-26-2016 05:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is quite a custom requirement that you are converting some rows to column and other rows to both rows and column. You'll have to write a lot of your code but take advantage of pivot functionality in Spark. Check following link.

https://databricks.com/blog/2016/02/09/reshaping-data-with-pivot-in-apache-spark.html

sc.parallelize(rdd.collect.toSeq.transpose)

See the link here for more details.

Created 06-26-2016 05:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is quite a custom requirement that you are converting some rows to column and other rows to both rows and column. You'll have to write a lot of your code but take advantage of pivot functionality in Spark. Check following link.

https://databricks.com/blog/2016/02/09/reshaping-data-with-pivot-in-apache-spark.html

sc.parallelize(rdd.collect.toSeq.transpose)

See the link here for more details.

Created 06-27-2016 03:43 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your response. yes it is quite a custom requirement. I thought its better to check with the community if anyone has implemented this kinda stuff.

I am trying to use either hadoop custom input format or python UDF's to get this done. There seems to be no straightforward way of doing this in spark. I can not use spark pivot also as it supports only column as of now right?.