Support Questions

- Cloudera Community

- Support

- Support Questions

- When the number of indexers of lily hbase indexer ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

When the number of indexers of lily hbase indexer service is created to 200+, a large number of TIME_WAIT ports appear

- Labels:

-

Apache HBase

Created on 09-28-2018 03:31 PM - edited 08-17-2019 10:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Version: CDH5.13.3, corresponding to hbase1.2.0

Nodes: Nine work nodes, three management nodes

Main role assignment: Nine regionserver, datanode, and solr server, three of which were lily hbase indexer

Background: Hbase for solr, solr stores the secondary index of hbase, and the index is automatically synchronized through lily hbase indexer

Question:

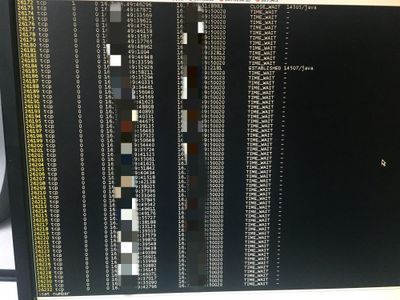

When creating the indexer number to 200+, we found a lot of TIME_WAIT port (almost 30000), in the regionserver log found this :"Retrying the connect to server: xx.xx.com/ipAddress:50020. Already tried 1 time (s); Retry the policy is RetryUpToMaximumCountWithFixedSleep (maxRetries = 10, sleepTime = 1000 MILLISECONDS)". when delete all the indexers , port back to normal, hbase back to normal Initial suspicion is that this version of hbase's multi-wal is in conflict with the replication functionality. Originally configured with 3 WAL, then we changed to single and re-created with 200+ indexers,although TIME_WAIT port down to about 10,000,but is still not solved,Can you give me some advice??

Created 11-27-2018 01:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I've been experiencing similar problem with large number of TIME_WAIT sockets. I knew it is related to replication, so I started researching replication options and found following:

I've set replication.source.sleepforretries to 1 according to these instructions:

They say it is 1 for 1 second, but if you look at the HBase source code, you'll see that it is milliseconds and should be 1000 for 1 second. After changing replication.source.sleepforretries from 1 to 1000 with replication enabled, the number of TIME_WAIT (TIME-WAIT) sockets dropped to normal value.

So check it, maybe you have set it at 1. And to Hortonworks, please fix the docs.

Created 11-27-2018 01:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I've been experiencing similar problem with large number of TIME_WAIT sockets. I knew it is related to replication, so I started researching replication options and found following:

I've set replication.source.sleepforretries to 1 according to these instructions:

They say it is 1 for 1 second, but if you look at the HBase source code, you'll see that it is milliseconds and should be 1000 for 1 second. After changing replication.source.sleepforretries from 1 to 1000 with replication enabled, the number of TIME_WAIT (TIME-WAIT) sockets dropped to normal value.

So check it, maybe you have set it at 1. And to Hortonworks, please fix the docs.