Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Where are Nifi attributes written?

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Where are Nifi attributes written?

- Labels:

-

Apache NiFi

Created 12-06-2016 09:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Some processor's written attributes are readily available within the FIowFile attributes downstream. For example 'executesql.row.count' is populated after ExecuteSQL. I'm not seeing the same behavior with many of the other attributes such as SplitJson. Are we expected to use Groovy script or some other customer process to extract these values? A simple example would be appreciated.

Created 12-06-2016 09:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Each processor is responsible for reading and writing whichever attributes it wants to for the purposes of its processing, and those attributes are available in each processor's documentation. SplitJson for example writes the following attributes to each output flow file:

| Name | Description |

|---|---|

| fragment.identifier | All split FlowFiles produced from the same parent FlowFile will have the same randomly generated UUID added for this attribute |

| fragment.index | A one-up number that indicates the ordering of the split FlowFiles that were created from a single parent FlowFile |

| fragment.count | The number of split FlowFiles generated from the parent FlowFile |

| segment.original.filename | The filename of the parent FlowFile |

These were added to NiFi 1.0.0 (HDF 2.0) under NIFI-2632, so if you are using a version of NiFi/HDF before that, that's why you won't see these attributes populated by SplitJson.

Created 12-06-2016 09:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You access all attributes using the expression language, typically to use attributes and their derivation as values in other attributes of a processor:

- https://docs.hortonworks.com/HDPDocuments/HDF1/HDF-1.1.1/bk_HDF_GettingStarted/content/ExpressionLan...

- http://docs.hortonworks.com/HDPDocuments/HDF1/HDF-1.2/bk_ExpressionLanguageGuide/content/ch_expressi...

See this for an excellent overview of attributes, how they change with the lifetime of a flow and how they provide programmatic power in your flows: https://docs.hortonworks.com/HDPDocuments/HDF1/HDF-1.1.1/bk_HDF_GettingStarted/content/working-with-...

If this is what you are looking for, let me know by accepting the answer; else, let me know of any gaps or followup questions.

Created on 12-06-2016 09:52 PM - edited 08-19-2019 01:21 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the prompt reply. I've also tried to address this question in item:

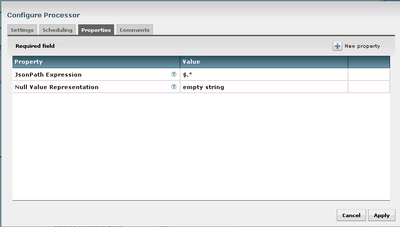

I'm trying to get similar behavior as can be seen below for 'executesql.row.count' by using a user-defined property (property - iteration : value - ${'fragment.index'} <with and without single quote, set prior to 'SplitJson' or set post 'SplitJson')

I'm only able to get 'No value set' or 'Empty value set' no matter what I try.

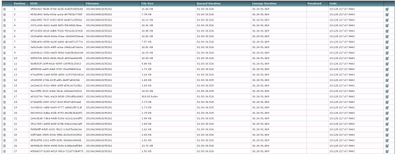

The splitjson is very straight forward and successfully builds many json array objects using the expression below.

All I'm attempting to due is keep track of the queue position, so I can act on the last row to post the last transaction date. The attribute 'queue position' would also be of interest, but it also contains no data.

Thanks,

~Sean

Created 12-06-2016 09:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Each processor is responsible for reading and writing whichever attributes it wants to for the purposes of its processing, and those attributes are available in each processor's documentation. SplitJson for example writes the following attributes to each output flow file:

| Name | Description |

|---|---|

| fragment.identifier | All split FlowFiles produced from the same parent FlowFile will have the same randomly generated UUID added for this attribute |

| fragment.index | A one-up number that indicates the ordering of the split FlowFiles that were created from a single parent FlowFile |

| fragment.count | The number of split FlowFiles generated from the parent FlowFile |

| segment.original.filename | The filename of the parent FlowFile |

These were added to NiFi 1.0.0 (HDF 2.0) under NIFI-2632, so if you are using a version of NiFi/HDF before that, that's why you won't see these attributes populated by SplitJson.

Created 12-06-2016 09:55 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ah, I see we're at NiFi version 0.5.1.1.1.2.1-34, so that explains why I am not seeing these attributes.

Created 12-09-2016 02:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For those who may be at a back level HDF version as we are a good workaround is to use the SplitContent instead as it utilizes many of the attributes Matt has documented above for the SplitJson processor.

Created 12-06-2016 10:13 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Matt Burgess You are correct about my being back level for this support. Can anyone suggest a workaround, such as another processor attribute that could do something similar?