Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Why can't I run more than 1 query in parallel ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Why can't I run more than 1 query in parallel in Hive?

- Labels:

-

Apache Hive

Created on 07-03-2017 02:27 PM - edited 08-17-2019 05:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a small one node hdp2.6 cluster (8 CPUs, 32GB ram), and I cannot run more than 1 query at a time, although I was pretty sure that I configures the relevant settings to allow more than one container.

The relevant configs are:

yarn-site/yarn.nodemanager.resource.memory-mb = 27660

yarn-site/yarn.scheduler.minimum-allocation-mb = 5532

yarn-site/yarn.scheduler.maximum-allocation-mb = 27660

mapred-site/mapreduce.map.memory.mb = 5532

mapred-site/mapreduce.reduce.memory.mb = 11064

mapred-site/mapreduce.map.java.opts = -Xmx4425m

mapred-site/mapreduce.reduce.java.opts = -Xmx8851m

mapred-site/yarn.app.mapreduce.am.resource.mb = 11059

mapred-site/yarn.app.mapreduce.am.command-opts = -Xmx8851m -Dhdp.version=${hdp.version}

hive-site/hive.execution.engine = tez

hive-site/hive.tez.container.size = 5532

hive-site/hive.auto.convert.join.noconditionaltask.size = 1546859315

tez-site/tez.runtime.unordered.output.buffer.size-mb = 414

tez-interactive-site/tez.am.resource.memory.mb = 5532

tez-site/tez.am.resource.memory.mb = 5532

tez-site/tez.task.resource.memory.mb = 5532

tez-site/tez.runtime.io.sort.mb = 1351

hive-site/hive.tez.java.opts = -server -Xmx4425m -Djava.net.preferIPv4Stack=true -XX:NewRatio=8 -XX:+UseNUMA -XX:+UseParallelGC -XX:+PrintGCDetails -verbose:gc -XX:+PrintGCTimeStamps

capacity-scheduler/yarn.scheduler.capacity.resource-calculator = org.apache.hadoop.yarn.util.resource.DominantResourceCalculatororg.apache.hadoop.yarn.util.resource.DominantResourceCalculator

yarn-site/yarn.nodemanager.resource.cpu-vcores = 6

yarn-site/yarn.scheduler.maximum-allocation-vcores = 6

mapred-site/mapreduce.map.output.compress = true

hive-site/hive.exec.compress.intermediate = true

hive-site/hive.exec.compress.output = true

hive-interactive-env/enable_hive_interactive = false

Which if I understand it well, gives 5GB per container.

If I run a hive query, it will use 5GB, 1 core, leaving about 15GB and 5 cores for the rest. I do not understand why the next query cannot start at the same time.

Any help would be much welcome.

Created 07-09-2020 04:21 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In Mapreduce the Reducer output would wait after all ten Mapper is finished. We recommend to use Tez.

Created 07-04-2017 06:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

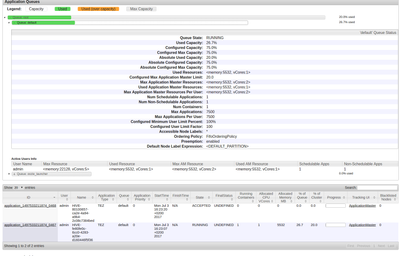

@Guillaume Roger: The issue seems to be happening as the maximum application master limit has been set to 20%.

Maximum percent of resources in the cluster which can be used to run application masters - controls number of concurrent active applications. Limits on each queue are directly proportional to their queue capacities and user limits.

Since the limit here has been reached, I guess the application is not able to spawn another application master and hence the query is not running. Can we try increasing the parameter yarn.scheduler.capacity.maximum-am-resource-percent / yarn.scheduler.capacity.<queue-path>.maximum-am-resource-percent to 35% and see if it resolves the issue?

Created 07-04-2017 01:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Vani, Thanks for your answer.

I do not see an immediate change, but I carry on looking in this direction.

What would be a good logical value for this maximum-am-resource-percent?

Currently the AM memory (tez-site/tez.am.resource.memory.mb) is set to the min container size (5GB in my case). Does that make sense?

Created 07-04-2017 02:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If AM memory (tez-site/tez.am.resource.memory.mb) is set to the min container size, only 1 application can be launched at any given point of time. Please tune the property depending on your requirement .

Created 07-06-2017 10:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Vani I am trying to understand what will this memory be used for. My understanding is that:

- any application will require its own AM

- one AM will use 1 container only

- tez-site/tez.am.resource.memory.mb defines the memory usable by the total of all AM

So logically

- all AM memory should never be more than half of the available memory (for the worst case scenario where all application only use one container)

- I should allocate in tez-site/tez.am.resource.memory.mb (minimum container size * expected number of applications)

Could you confirm my understanding?

Created 07-10-2017 04:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hoping the attached link will help:-

https://community.hortonworks.com/articles/14309/demystify-tez-tuning-step-by-step.html

Created 07-11-2019 12:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Guillaume Roger, did you manage to sort your issue out. I am facing the same problem i.e unable to run 2 concurrent jobs although there is enought memory to spin new containers.

Any help will be highly appreciated..

Created 07-07-2020 11:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Stuck here with the same issue on HDP 3.0.1, still searching the correct parameters to set.

Platform is Comunity Edition on PowerPC

Created 07-08-2020 01:38 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There are 2 things forground thread and background thread.

Foreground thread is used for parsing/complilation.The hive.server2.async.exec.threads determine how many concurrent threads can Hiveserver2 handle,it defaults to 500.

Background thread comes after compliation/parsing phase,where it runs Tez jobs. This depends on number of Tez AM .

In this case, you need to increase the Tez AM's.

hive.server2.tez.sessions.per.default.queue=2

So if you have one Hiveserver2,it will run 2 background threads.

Please refer below document for more information:

Created 07-09-2020 02:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

just i have hive.server2.tez.sessions.per.default.queue=4 ...