Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: YARN Registry DNS Start failed (Hortonworks 3)

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

YARN Registry DNS Start failed (Hortonworks 3)

- Labels:

-

Apache Ambari

-

Apache Hive

-

Apache YARN

Created on 10-23-2018 06:28 AM - edited 08-17-2019 07:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

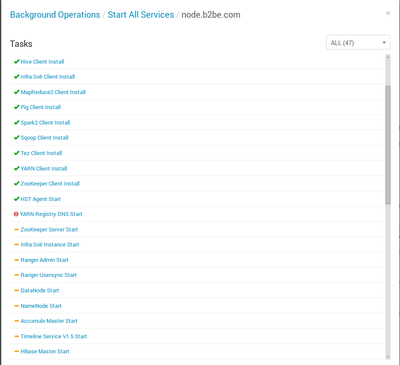

Currently I have an issue with the YARN Registry DNS Start, it failed and I have no idea why. (You can see it as the attached screenshot)

Here is the Task Log :

stderr: /var/lib/ambari-agent/data/errors-224.txt

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/stacks/HDP/3.0/services/YARN/package/scripts/yarn_registry_dns.py", line 93, in <module>

RegistryDNS().execute()

File "/usr/lib/ambari-agent/lib/resource_management/libraries/script/script.py", line 351, in execute

method(env)

File "/var/lib/ambari-agent/cache/stacks/HDP/3.0/services/YARN/package/scripts/yarn_registry_dns.py", line 53, in start

service('registrydns',action='start')

File "/usr/lib/ambari-agent/lib/ambari_commons/os_family_impl.py", line 89, in thunk

return fn(*args, **kwargs)

File "/var/lib/ambari-agent/cache/stacks/HDP/3.0/services/YARN/package/scripts/service.py", line 93, in service

Execute(daemon_cmd, user = usr, not_if = check_process)

File "/usr/lib/ambari-agent/lib/resource_management/core/base.py", line 166, in __init__

self.env.run()

File "/usr/lib/ambari-agent/lib/resource_management/core/environment.py", line 160, in run

self.run_action(resource, action)

File "/usr/lib/ambari-agent/lib/resource_management/core/environment.py", line 124, in run_action

provider_action()

File "/usr/lib/ambari-agent/lib/resource_management/core/providers/system.py", line 263, in action_run

returns=self.resource.returns)

File "/usr/lib/ambari-agent/lib/resource_management/core/shell.py", line 72, in inner

result = function(command, **kwargs)

File "/usr/lib/ambari-agent/lib/resource_management/core/shell.py", line 102, in checked_call

tries=tries, try_sleep=try_sleep, timeout_kill_strategy=timeout_kill_strategy, returns=returns)

File "/usr/lib/ambari-agent/lib/resource_management/core/shell.py", line 150, in _call_wrapper

result = _call(command, **kwargs_copy)

File "/usr/lib/ambari-agent/lib/resource_management/core/shell.py", line 314, in _call

raise ExecutionFailed(err_msg, code, out, err)

resource_management.core.exceptions.ExecutionFailed: Execution of 'ulimit -c unlimited; export HADOOP_LIBEXEC_DIR=/usr/hdp/3.0.1.0-187/hadoop/libexec && /usr/hdp/3.0.1.0-187/hadoop-yarn/bin/yarn --config /usr/hdp/3.0.1.0-187/hadoop/conf --daemon start registrydns' returned 1. mesg: ttyname failed: Inappropriate ioctl for device

ERROR: Cannot set priority of registrydns process 30580stdout: /var/lib/ambari-agent/data/output-224.txt

2018-10-23 13:51:36,482 - Stack Feature Version Info: Cluster Stack=3.0, Command Stack=None, Command Version=3.0.1.0-187 -> 3.0.1.0-187

2018-10-23 13:51:36,493 - Using hadoop conf dir: /usr/hdp/3.0.1.0-187/hadoop/conf

2018-10-23 13:51:36,640 - Stack Feature Version Info: Cluster Stack=3.0, Command Stack=None, Command Version=3.0.1.0-187 -> 3.0.1.0-187

2018-10-23 13:51:36,644 - Using hadoop conf dir: /usr/hdp/3.0.1.0-187/hadoop/conf

2018-10-23 13:51:36,646 - Group['livy'] {}

2018-10-23 13:51:36,646 - Group['spark'] {}

2018-10-23 13:51:36,646 - Group['ranger'] {}

2018-10-23 13:51:36,647 - Group['hdfs'] {}

2018-10-23 13:51:36,647 - Group['zeppelin'] {}

2018-10-23 13:51:36,647 - Group['hadoop'] {}

2018-10-23 13:51:36,647 - Group['users'] {}

2018-10-23 13:51:36,647 - Group['knox'] {}

2018-10-23 13:51:36,648 - User['yarn-ats'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2018-10-23 13:51:36,648 - User['hive'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2018-10-23 13:51:36,649 - User['infra-solr'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2018-10-23 13:51:36,650 - User['zookeeper'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2018-10-23 13:51:36,650 - User['atlas'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2018-10-23 13:51:36,651 - User['ams'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2018-10-23 13:51:36,652 - User['ranger'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['ranger', 'hadoop'], 'uid': None}

2018-10-23 13:51:36,653 - User['tez'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop', 'users'], 'uid': None}

2018-10-23 13:51:36,653 - User['zeppelin'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['zeppelin', 'hadoop'], 'uid': None}

2018-10-23 13:51:36,654 - User['accumulo'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2018-10-23 13:51:36,654 - User['livy'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['livy', 'hadoop'], 'uid': None}

2018-10-23 13:51:36,655 - User['spark'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['spark', 'hadoop'], 'uid': None}

2018-10-23 13:51:36,655 - User['ambari-qa'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop', 'users'], 'uid': None}

2018-10-23 13:51:36,656 - User['kafka'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2018-10-23 13:51:36,656 - User['hdfs'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hdfs', 'hadoop'], 'uid': None}

2018-10-23 13:51:36,657 - User['sqoop'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2018-10-23 13:51:36,657 - User['yarn'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2018-10-23 13:51:36,658 - User['mapred'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2018-10-23 13:51:36,659 - User['hbase'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2018-10-23 13:51:36,659 - User['knox'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop', 'knox'], 'uid': None}

2018-10-23 13:51:36,660 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2018-10-23 13:51:36,661 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa 0'] {'not_if': '(test $(id -u ambari-qa) -gt 1000) || (false)'}

2018-10-23 13:51:36,664 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa 0'] due to not_if

2018-10-23 13:51:36,665 - Directory['/tmp/hbase-hbase'] {'owner': 'hbase', 'create_parents': True, 'mode': 0775, 'cd_access': 'a'}

2018-10-23 13:51:36,665 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2018-10-23 13:51:36,666 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2018-10-23 13:51:36,666 - call['/var/lib/ambari-agent/tmp/changeUid.sh hbase'] {}

2018-10-23 13:51:36,670 - call returned (0, '1023')

2018-10-23 13:51:36,671 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase 1023'] {'not_if': '(test $(id -u hbase) -gt 1000) || (false)'}

2018-10-23 13:51:36,676 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase 1023'] due to not_if

2018-10-23 13:51:36,676 - Group['hdfs'] {}

2018-10-23 13:51:36,677 - User['hdfs'] {'fetch_nonlocal_groups': True, 'groups': ['hdfs', 'hadoop', u'hdfs']}

2018-10-23 13:51:36,677 - FS Type: HDFS

2018-10-23 13:51:36,677 - Directory['/etc/hadoop'] {'mode': 0755}

2018-10-23 13:51:36,686 - File['/usr/hdp/3.0.1.0-187/hadoop/conf/hadoop-env.sh'] {'content': InlineTemplate(...), 'owner': 'hdfs', 'group': 'hadoop'}

2018-10-23 13:51:36,686 - Directory['/var/lib/ambari-agent/tmp/hadoop_java_io_tmpdir'] {'owner': 'hdfs', 'group': 'hadoop', 'mode': 01777}

2018-10-23 13:51:36,705 - Execute[('setenforce', '0')] {'not_if': '(! which getenforce ) || (which getenforce && getenforce | grep -q Disabled)', 'sudo': True, 'only_if': 'test -f /selinux/enforce'}

2018-10-23 13:51:36,709 - Skipping Execute[('setenforce', '0')] due to not_if

2018-10-23 13:51:36,709 - Directory['/var/log/hadoop'] {'owner': 'root', 'create_parents': True, 'group': 'hadoop', 'mode': 0775, 'cd_access': 'a'}

2018-10-23 13:51:36,710 - Directory['/var/run/hadoop'] {'owner': 'root', 'create_parents': True, 'group': 'root', 'cd_access': 'a'}

2018-10-23 13:51:36,711 - Directory['/var/run/hadoop/hdfs'] {'owner': 'hdfs', 'cd_access': 'a'}

2018-10-23 13:51:36,711 - Directory['/tmp/hadoop-hdfs'] {'owner': 'hdfs', 'create_parents': True, 'cd_access': 'a'}

2018-10-23 13:51:36,713 - File['/usr/hdp/3.0.1.0-187/hadoop/conf/commons-logging.properties'] {'content': Template('commons-logging.properties.j2'), 'owner': 'hdfs'}

2018-10-23 13:51:36,713 - File['/usr/hdp/3.0.1.0-187/hadoop/conf/health_check'] {'content': Template('health_check.j2'), 'owner': 'hdfs'}

2018-10-23 13:51:36,718 - File['/usr/hdp/3.0.1.0-187/hadoop/conf/log4j.properties'] {'content': InlineTemplate(...), 'owner': 'hdfs', 'group': 'hadoop', 'mode': 0644}

2018-10-23 13:51:36,725 - File['/usr/hdp/3.0.1.0-187/hadoop/conf/hadoop-metrics2.properties'] {'content': InlineTemplate(...), 'owner': 'hdfs', 'group': 'hadoop'}

2018-10-23 13:51:36,726 - File['/usr/hdp/3.0.1.0-187/hadoop/conf/task-log4j.properties'] {'content': StaticFile('task-log4j.properties'), 'mode': 0755}

2018-10-23 13:51:36,726 - File['/usr/hdp/3.0.1.0-187/hadoop/conf/configuration.xsl'] {'owner': 'hdfs', 'group': 'hadoop'}

2018-10-23 13:51:36,728 - File['/etc/hadoop/conf/topology_mappings.data'] {'owner': 'hdfs', 'content': Template('topology_mappings.data.j2'), 'only_if': 'test -d /etc/hadoop/conf', 'group': 'hadoop', 'mode': 0644}

2018-10-23 13:51:36,730 - File['/etc/hadoop/conf/topology_script.py'] {'content': StaticFile('topology_script.py'), 'only_if': 'test -d /etc/hadoop/conf', 'mode': 0755}

2018-10-23 13:51:36,732 - Skipping unlimited key JCE policy check and setup since it is not required

2018-10-23 13:51:36,957 - Using hadoop conf dir: /usr/hdp/3.0.1.0-187/hadoop/conf

2018-10-23 13:51:36,957 - Stack Feature Version Info: Cluster Stack=3.0, Command Stack=None, Command Version=3.0.1.0-187 -> 3.0.1.0-187

2018-10-23 13:51:36,972 - Using hadoop conf dir: /usr/hdp/3.0.1.0-187/hadoop/conf

2018-10-23 13:51:36,978 - Directory['/var/log/hadoop-yarn'] {'group': 'hadoop', 'cd_access': 'a', 'create_parents': True, 'ignore_failures': True, 'mode': 0775, 'owner': 'yarn'}

2018-10-23 13:51:36,979 - Directory['/var/run/hadoop-yarn'] {'owner': 'yarn', 'create_parents': True, 'group': 'hadoop', 'cd_access': 'a'}

2018-10-23 13:51:36,979 - Directory['/var/run/hadoop-yarn/yarn'] {'owner': 'yarn', 'create_parents': True, 'group': 'hadoop', 'cd_access': 'a'}

2018-10-23 13:51:36,979 - Directory['/var/log/hadoop-yarn/yarn'] {'owner': 'yarn', 'group': 'hadoop', 'create_parents': True, 'cd_access': 'a'}

2018-10-23 13:51:36,980 - Directory['/var/run/hadoop-mapreduce'] {'owner': 'mapred', 'create_parents': True, 'group': 'hadoop', 'cd_access': 'a'}

2018-10-23 13:51:36,980 - Directory['/var/run/hadoop-mapreduce/mapred'] {'owner': 'mapred', 'create_parents': True, 'group': 'hadoop', 'cd_access': 'a'}

2018-10-23 13:51:36,980 - Directory['/var/log/hadoop-mapreduce'] {'owner': 'mapred', 'create_parents': True, 'group': 'hadoop', 'cd_access': 'a'}

2018-10-23 13:51:36,981 - Directory['/var/log/hadoop-mapreduce/mapred'] {'owner': 'mapred', 'group': 'hadoop', 'create_parents': True, 'cd_access': 'a'}

2018-10-23 13:51:36,981 - Directory['/usr/hdp/3.0.1.0-187/hadoop/conf/embedded-yarn-ats-hbase'] {'owner': 'yarn-ats', 'group': 'hadoop', 'create_parents': True, 'cd_access': 'a'}

2018-10-23 13:51:36,987 - Directory['/usr/lib/ambari-logsearch-logfeeder/conf'] {'create_parents': True, 'mode': 0755, 'cd_access': 'a'}

2018-10-23 13:51:36,987 - Generate Log Feeder config file: /usr/lib/ambari-logsearch-logfeeder/conf/input.config-yarn.json

2018-10-23 13:51:36,987 - File['/usr/lib/ambari-logsearch-logfeeder/conf/input.config-yarn.json'] {'content': Template('input.config-yarn.json.j2'), 'mode': 0644}

2018-10-23 13:51:36,988 - XmlConfig['core-site.xml'] {'group': 'hadoop', 'conf_dir': '/usr/hdp/3.0.1.0-187/hadoop/conf', 'mode': 0644, 'configuration_attributes': {u'final': {u'fs.defaultFS': u'true'}}, 'owner': 'hdfs', 'configurations': ...}

2018-10-23 13:51:36,993 - Generating config: /usr/hdp/3.0.1.0-187/hadoop/conf/core-site.xml

2018-10-23 13:51:36,993 - File['/usr/hdp/3.0.1.0-187/hadoop/conf/core-site.xml'] {'owner': 'hdfs', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0644, 'encoding': 'UTF-8'}

2018-10-23 13:51:37,011 - Writing File['/usr/hdp/3.0.1.0-187/hadoop/conf/core-site.xml'] because contents don't match

2018-10-23 13:51:37,011 - XmlConfig['hdfs-site.xml'] {'group': 'hadoop', 'conf_dir': '/usr/hdp/3.0.1.0-187/hadoop/conf', 'mode': 0644, 'configuration_attributes': {u'final': {u'dfs.datanode.failed.volumes.tolerated': u'true', u'dfs.datanode.data.dir': u'true', u'dfs.namenode.http-address': u'true', u'dfs.namenode.name.dir': u'true', u'dfs.webhdfs.enabled': u'true'}}, 'owner': 'hdfs', 'configurations': ...}

2018-10-23 13:51:37,015 - Generating config: /usr/hdp/3.0.1.0-187/hadoop/conf/hdfs-site.xml

2018-10-23 13:51:37,015 - File['/usr/hdp/3.0.1.0-187/hadoop/conf/hdfs-site.xml'] {'owner': 'hdfs', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0644, 'encoding': 'UTF-8'}

2018-10-23 13:51:37,045 - XmlConfig['mapred-site.xml'] {'group': 'hadoop', 'conf_dir': '/usr/hdp/3.0.1.0-187/hadoop/conf', 'mode': 0644, 'configuration_attributes': {}, 'owner': 'yarn', 'configurations': ...}

2018-10-23 13:51:37,049 - Generating config: /usr/hdp/3.0.1.0-187/hadoop/conf/mapred-site.xml

2018-10-23 13:51:37,049 - File['/usr/hdp/3.0.1.0-187/hadoop/conf/mapred-site.xml'] {'owner': 'yarn', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0644, 'encoding': 'UTF-8'}

2018-10-23 13:51:37,074 - Changing owner for /usr/hdp/3.0.1.0-187/hadoop/conf/mapred-site.xml from 1022 to yarn

2018-10-23 13:51:37,075 - XmlConfig['yarn-site.xml'] {'group': 'hadoop', 'conf_dir': '/usr/hdp/3.0.1.0-187/hadoop/conf', 'mode': 0644, 'configuration_attributes': {u'hidden': {u'hadoop.registry.dns.bind-port': u'true'}}, 'owner': 'yarn', 'configurations': ...}

2018-10-23 13:51:37,079 - Generating config: /usr/hdp/3.0.1.0-187/hadoop/conf/yarn-site.xml

2018-10-23 13:51:37,079 - File['/usr/hdp/3.0.1.0-187/hadoop/conf/yarn-site.xml'] {'owner': 'yarn', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0644, 'encoding': 'UTF-8'}

2018-10-23 13:51:37,174 - XmlConfig['capacity-scheduler.xml'] {'group': 'hadoop', 'conf_dir': '/usr/hdp/3.0.1.0-187/hadoop/conf', 'mode': 0644, 'configuration_attributes': {}, 'owner': 'yarn', 'configurations': ...}

2018-10-23 13:51:37,180 - Generating config: /usr/hdp/3.0.1.0-187/hadoop/conf/capacity-scheduler.xml

2018-10-23 13:51:37,181 - File['/usr/hdp/3.0.1.0-187/hadoop/conf/capacity-scheduler.xml'] {'owner': 'yarn', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0644, 'encoding': 'UTF-8'}

2018-10-23 13:51:37,193 - Changing owner for /usr/hdp/3.0.1.0-187/hadoop/conf/capacity-scheduler.xml from 1019 to yarn

2018-10-23 13:51:37,193 - XmlConfig['hbase-site.xml'] {'group': 'hadoop', 'conf_dir': '/usr/hdp/3.0.1.0-187/hadoop/conf/embedded-yarn-ats-hbase', 'mode': 0644, 'configuration_attributes': {}, 'owner': 'yarn-ats', 'configurations': ...}

2018-10-23 13:51:37,199 - Generating config: /usr/hdp/3.0.1.0-187/hadoop/conf/embedded-yarn-ats-hbase/hbase-site.xml

2018-10-23 13:51:37,199 - File['/usr/hdp/3.0.1.0-187/hadoop/conf/embedded-yarn-ats-hbase/hbase-site.xml'] {'owner': 'yarn-ats', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0644, 'encoding': 'UTF-8'}

2018-10-23 13:51:37,221 - XmlConfig['resource-types.xml'] {'group': 'hadoop', 'conf_dir': '/usr/hdp/3.0.1.0-187/hadoop/conf', 'mode': 0644, 'configuration_attributes': {}, 'owner': 'yarn', 'configurations': {u'yarn.resource-types.yarn.io_gpu.maximum-allocation': u'8', u'yarn.resource-types': u''}}

2018-10-23 13:51:37,226 - Generating config: /usr/hdp/3.0.1.0-187/hadoop/conf/resource-types.xml

2018-10-23 13:51:37,226 - File['/usr/hdp/3.0.1.0-187/hadoop/conf/resource-types.xml'] {'owner': 'yarn', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0644, 'encoding': 'UTF-8'}

2018-10-23 13:51:37,228 - File['/etc/security/limits.d/yarn.conf'] {'content': Template('yarn.conf.j2'), 'mode': 0644}

2018-10-23 13:51:37,229 - File['/etc/security/limits.d/mapreduce.conf'] {'content': Template('mapreduce.conf.j2'), 'mode': 0644}

2018-10-23 13:51:37,233 - File['/usr/hdp/3.0.1.0-187/hadoop/conf/yarn-env.sh'] {'content': InlineTemplate(...), 'owner': 'yarn', 'group': 'hadoop', 'mode': 0755}

2018-10-23 13:51:37,233 - File['/usr/hdp/3.0.1.0-187/hadoop-yarn/bin/container-executor'] {'group': 'hadoop', 'mode': 02050}

2018-10-23 13:51:37,235 - File['/usr/hdp/3.0.1.0-187/hadoop/conf/container-executor.cfg'] {'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0644}

2018-10-23 13:51:37,236 - Directory['/cgroups_test/cpu'] {'group': 'hadoop', 'create_parents': True, 'mode': 0755, 'cd_access': 'a'}

2018-10-23 13:51:37,237 - File['/usr/hdp/3.0.1.0-187/hadoop/conf/mapred-env.sh'] {'content': InlineTemplate(...), 'owner': 'hdfs', 'mode': 0755}

2018-10-23 13:51:37,239 - Directory['/var/log/hadoop-yarn/nodemanager/recovery-state'] {'owner': 'yarn', 'group': 'hadoop', 'create_parents': True, 'mode': 0755, 'cd_access': 'a'}

2018-10-23 13:51:37,241 - File['/usr/hdp/3.0.1.0-187/hadoop/conf/taskcontroller.cfg'] {'content': Template('taskcontroller.cfg.j2'), 'owner': 'hdfs'}

2018-10-23 13:51:37,241 - XmlConfig['mapred-site.xml'] {'group': 'hadoop', 'conf_dir': '/usr/hdp/3.0.1.0-187/hadoop/conf', 'mode': 0644, 'configuration_attributes': {}, 'owner': 'mapred', 'configurations': ...}

2018-10-23 13:51:37,246 - Generating config: /usr/hdp/3.0.1.0-187/hadoop/conf/mapred-site.xml

2018-10-23 13:51:37,247 - File['/usr/hdp/3.0.1.0-187/hadoop/conf/mapred-site.xml'] {'owner': 'mapred', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0644, 'encoding': 'UTF-8'}

2018-10-23 13:51:37,271 - Changing owner for /usr/hdp/3.0.1.0-187/hadoop/conf/mapred-site.xml from 1021 to mapred

2018-10-23 13:51:37,272 - XmlConfig['capacity-scheduler.xml'] {'group': 'hadoop', 'conf_dir': '/usr/hdp/3.0.1.0-187/hadoop/conf', 'mode': 0644, 'configuration_attributes': {}, 'owner': 'hdfs', 'configurations': ...}

2018-10-23 13:51:37,276 - Generating config: /usr/hdp/3.0.1.0-187/hadoop/conf/capacity-scheduler.xml

2018-10-23 13:51:37,277 - File['/usr/hdp/3.0.1.0-187/hadoop/conf/capacity-scheduler.xml'] {'owner': 'hdfs', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0644, 'encoding': 'UTF-8'}

2018-10-23 13:51:37,284 - Changing owner for /usr/hdp/3.0.1.0-187/hadoop/conf/capacity-scheduler.xml from 1021 to hdfs

2018-10-23 13:51:37,284 - XmlConfig['ssl-client.xml'] {'group': 'hadoop', 'conf_dir': '/usr/hdp/3.0.1.0-187/hadoop/conf', 'mode': 0644, 'configuration_attributes': {}, 'owner': 'hdfs', 'configurations': ...}

2018-10-23 13:51:37,290 - Generating config: /usr/hdp/3.0.1.0-187/hadoop/conf/ssl-client.xml

2018-10-23 13:51:37,290 - File['/usr/hdp/3.0.1.0-187/hadoop/conf/ssl-client.xml'] {'owner': 'hdfs', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0644, 'encoding': 'UTF-8'}

2018-10-23 13:51:37,294 - Directory['/usr/hdp/3.0.1.0-187/hadoop/conf/secure'] {'owner': 'root', 'create_parents': True, 'group': 'hadoop', 'cd_access': 'a'}

2018-10-23 13:51:37,295 - XmlConfig['ssl-client.xml'] {'group': 'hadoop', 'conf_dir': '/usr/hdp/3.0.1.0-187/hadoop/conf/secure', 'mode': 0644, 'configuration_attributes': {}, 'owner': 'hdfs', 'configurations': ...}

2018-10-23 13:51:37,299 - Generating config: /usr/hdp/3.0.1.0-187/hadoop/conf/secure/ssl-client.xml

2018-10-23 13:51:37,299 - File['/usr/hdp/3.0.1.0-187/hadoop/conf/secure/ssl-client.xml'] {'owner': 'hdfs', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0644, 'encoding': 'UTF-8'}

2018-10-23 13:51:37,302 - XmlConfig['ssl-server.xml'] {'group': 'hadoop', 'conf_dir': '/usr/hdp/3.0.1.0-187/hadoop/conf', 'mode': 0644, 'configuration_attributes': {}, 'owner': 'hdfs', 'configurations': ...}

2018-10-23 13:51:37,306 - Generating config: /usr/hdp/3.0.1.0-187/hadoop/conf/ssl-server.xml

2018-10-23 13:51:37,306 - File['/usr/hdp/3.0.1.0-187/hadoop/conf/ssl-server.xml'] {'owner': 'hdfs', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0644, 'encoding': 'UTF-8'}

2018-10-23 13:51:37,310 - File['/usr/hdp/3.0.1.0-187/hadoop/conf/ssl-client.xml.example'] {'owner': 'mapred', 'group': 'hadoop', 'mode': 0644}

2018-10-23 13:51:37,310 - File['/usr/hdp/3.0.1.0-187/hadoop/conf/ssl-server.xml.example'] {'owner': 'mapred', 'group': 'hadoop', 'mode': 0644}

2018-10-23 13:51:37,311 - Execute['export HADOOP_LIBEXEC_DIR=/usr/hdp/3.0.1.0-187/hadoop/libexec && /usr/hdp/3.0.1.0-187/hadoop-yarn/bin/yarn --config /usr/hdp/3.0.1.0-187/hadoop/conf --daemon stop registrydns'] {'only_if': 'ambari-sudo.sh -H -E test -f /var/run/hadoop-yarn/yarn/hadoop-yarn-root-registrydns.pid && ambari-sudo.sh -H -E pgrep -F /var/run/hadoop-yarn/yarn/hadoop-yarn-root-registrydns.pid', 'user': 'root'}

2018-10-23 13:51:37,317 - Skipping Execute['export HADOOP_LIBEXEC_DIR=/usr/hdp/3.0.1.0-187/hadoop/libexec && /usr/hdp/3.0.1.0-187/hadoop-yarn/bin/yarn --config /usr/hdp/3.0.1.0-187/hadoop/conf --daemon stop registrydns'] due to only_if

2018-10-23 13:51:37,317 - call['! ( ambari-sudo.sh -H -E test -f /var/run/hadoop-yarn/yarn/hadoop-yarn-root-registrydns.pid && ambari-sudo.sh -H -E pgrep -F /var/run/hadoop-yarn/yarn/hadoop-yarn-root-registrydns.pid )'] {'tries': 5, 'try_sleep': 5, 'env': {'HADOOP_LIBEXEC_DIR': '/usr/hdp/3.0.1.0-187/hadoop/libexec'}}

2018-10-23 13:51:37,322 - call returned (0, '')

2018-10-23 13:51:37,322 - Execute['export HADOOP_LIBEXEC_DIR=/usr/hdp/3.0.1.0-187/hadoop/libexec && /usr/hdp/3.0.1.0-187/hadoop-yarn/bin/yarn --config /usr/hdp/3.0.1.0-187/hadoop/conf --daemon stop registrydns'] {'only_if': 'ambari-sudo.sh -H -E test -f /var/run/hadoop-yarn/yarn/hadoop-yarn-registrydns.pid && ambari-sudo.sh -H -E pgrep -F /var/run/hadoop-yarn/yarn/hadoop-yarn-registrydns.pid', 'user': 'yarn'}

2018-10-23 13:51:37,325 - Skipping Execute['export HADOOP_LIBEXEC_DIR=/usr/hdp/3.0.1.0-187/hadoop/libexec && /usr/hdp/3.0.1.0-187/hadoop-yarn/bin/yarn --config /usr/hdp/3.0.1.0-187/hadoop/conf --daemon stop registrydns'] due to only_if

2018-10-23 13:51:37,325 - call['! ( ambari-sudo.sh -H -E test -f /var/run/hadoop-yarn/yarn/hadoop-yarn-registrydns.pid && ambari-sudo.sh -H -E pgrep -F /var/run/hadoop-yarn/yarn/hadoop-yarn-registrydns.pid )'] {'tries': 5, 'try_sleep': 5, 'env': {'PATH': '/usr/sbin:/sbin:/usr/lib/ambari-server/*:/usr/sbin:/sbin:/usr/lib/ambari-server/*:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/snap/bin:/var/lib/ambari-agent:/var/lib/ambari-agent', 'HADOOP_LIBEXEC_DIR': '/usr/hdp/3.0.1.0-187/hadoop/libexec'}}

2018-10-23 13:51:37,328 - call returned (0, '')

2018-10-23 13:51:37,329 - Execute['ulimit -c unlimited; export HADOOP_LIBEXEC_DIR=/usr/hdp/3.0.1.0-187/hadoop/libexec && /usr/hdp/3.0.1.0-187/hadoop-yarn/bin/yarn --config /usr/hdp/3.0.1.0-187/hadoop/conf --daemon start registrydns'] {'not_if': 'ambari-sudo.sh -H -E test -f /var/run/hadoop-yarn/yarn/hadoop-yarn-root-registrydns.pid && ambari-sudo.sh -H -E pgrep -F /var/run/hadoop-yarn/yarn/hadoop-yarn-root-registrydns.pid', 'user': 'root'}

2018-10-23 13:51:44,426 - Execute['find /var/log/hadoop-yarn/yarn -maxdepth 1 -type f -name '*' -exec echo '==> {} <==' \; -exec tail -n 40 {} \;'] {'logoutput': True, 'ignore_failures': True, 'user': 'root'}

mesg: ttyname failed: Inappropriate ioctl for device

==> /var/log/hadoop-yarn/yarn/hadoop-yarn-root-registrydns-node.b2be.com.out.4 <==

==> /var/log/hadoop-yarn/yarn/hadoop-yarn-root-registrydns-MYPTJ020DT-Linux.out <==

==> /var/log/hadoop-yarn/yarn/hadoop-yarn-root-registrydns-MYPTJ020DT-Linux.out.2 <==

==> /var/log/hadoop-yarn/yarn/hadoop-yarn-root-registrydns-node.b2be.com.log <==

at sun.nio.ch.DatagramChannelImpl.bind(DatagramChannelImpl.java:691)

at sun.nio.ch.DatagramSocketAdaptor.bind(DatagramSocketAdaptor.java:91)

at org.apache.hadoop.registry.server.dns.RegistryDNS.openUDPChannel(RegistryDNS.java:1014)

... 8 more

2018-10-23 13:51:37,810 INFO dns.PrivilegedRegistryDNSStarter (LogAdapter.java:info(51)) - STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting RegistryDNSServer

STARTUP_MSG: host = node.b2be.com/10.0.5.177

STARTUP_MSG: args = []

STARTUP_MSG: version = 3.1.1.3.0.1.0-187

STARTUP_MSG: classpath = /usr/hdp/3.0.1.0-187/hadoop/conf:/usr/hdp/3.0.1.0-187/hadoop/lib/accessors-smart-1.2.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/asm-5.0.4.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/avro-1.7.7.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/commons-beanutils-1.9.3.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/commons-cli-1.2.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/commons-codec-1.11.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/commons-collections-3.2.2.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/commons-compress-1.4.1.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/commons-configuration2-2.1.1.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/commons-io-2.5.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/commons-lang-2.6.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/commons-lang3-3.4.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/commons-logging-1.1.3.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/commons-math3-3.1.1.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/commons-net-3.6.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/curator-client-2.12.0.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/curator-framework-2.12.0.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/curator-recipes-2.12.0.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/gson-2.2.4.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/guava-11.0.2.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/htrace-core4-4.1.0-incubating.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/httpclient-4.5.2.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/httpcore-4.4.4.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/jackson-annotations-2.9.5.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/jackson-core-2.9.5.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/jackson-core-asl-1.9.13.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/jackson-databind-2.9.5.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/jackson-jaxrs-1.9.13.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/jackson-mapper-asl-1.9.13.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/jackson-xc-1.9.13.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/javax.servlet-api-3.1.0.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/jaxb-api-2.2.11.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/jaxb-impl-2.2.3-1.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/jcip-annotations-1.0-1.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/jersey-core-1.19.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/jersey-json-1.19.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/jersey-server-1.19.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/jersey-servlet-1.19.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/jettison-1.1.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/jetty-http-9.3.19.v20170502.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/jetty-io-9.3.19.v20170502.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/jetty-security-9.3.19.v20170502.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/jetty-server-9.3.19.v20170502.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/jetty-servlet-9.3.19.v20170502.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/jetty-util-9.3.19.v20170502.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/jetty-webapp-9.3.19.v20170502.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/jetty-xml-9.3.19.v20170502.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/jsch-0.1.54.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/json-smart-2.3.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/jsp-api-2.1.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/jsr305-3.0.0.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/jsr311-api-1.1.1.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/jul-to-slf4j-1.7.25.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/kerb-admin-1.0.1.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/kerb-client-1.0.1.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/kerb-common-1.0.1.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/kerb-core-1.0.1.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/kerb-crypto-1.0.1.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/kerb-identity-1.0.1.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/kerb-server-1.0.1.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/kerb-simplekdc-1.0.1.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/kerb-util-1.0.1.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/kerby-asn1-1.0.1.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/kerby-config-1.0.1.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/kerby-pkix-1.0.1.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/kerby-util-1.0.1.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/kerby-xdr-1.0.1.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/log4j-1.2.17.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/metrics-core-3.2.4.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/netty-3.10.5.Final.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/nimbus-jose-jwt-4.41.1.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/paranamer-2.3.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/protobuf-java-2.5.0.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/ranger-hdfs-plugin-shim-1.1.0.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/ranger-plugin-classloader-1.1.0.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/ranger-yarn-plugin-shim-1.1.0.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/re2j-1.1.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/slf4j-api-1.7.25.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/slf4j-log4j12-1.7.25.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/snappy-java-1.0.5.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/stax2-api-3.1.4.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/token-provider-1.0.1.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/woodstox-core-5.0.3.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/xz-1.0.jar:/usr/hdp/3.0.1.0-187/hadoop/lib/zookeeper-3.4.6.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop/.//hadoop-annotations-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop/.//hadoop-annotations.jar:/usr/hdp/3.0.1.0-187/hadoop/.//hadoop-auth-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop/.//hadoop-auth.jar:/usr/hdp/3.0.1.0-187/hadoop/.//hadoop-azure-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop/.//hadoop-azure-datalake-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop/.//hadoop-azure-datalake.jar:/usr/hdp/3.0.1.0-187/hadoop/.//hadoop-azure.jar:/usr/hdp/3.0.1.0-187/hadoop/.//hadoop-common-3.1.1.3.0.1.0-187-tests.jar:/usr/hdp/3.0.1.0-187/hadoop/.//hadoop-common-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop/.//hadoop-common-tests.jar:/usr/hdp/3.0.1.0-187/hadoop/.//hadoop-common.jar:/usr/hdp/3.0.1.0-187/hadoop/.//hadoop-kms-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop/.//hadoop-kms.jar:/usr/hdp/3.0.1.0-187/hadoop/.//hadoop-nfs-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop/.//hadoop-nfs.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/./:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/accessors-smart-1.2.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/asm-5.0.4.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/avro-1.7.7.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/commons-beanutils-1.9.3.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/commons-cli-1.2.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/commons-codec-1.11.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/commons-collections-3.2.2.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/commons-compress-1.4.1.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/commons-configuration2-2.1.1.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/commons-daemon-1.0.13.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/commons-io-2.5.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/commons-lang-2.6.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/commons-lang3-3.4.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/commons-logging-1.1.3.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/commons-math3-3.1.1.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/commons-net-3.6.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/curator-client-2.12.0.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/curator-framework-2.12.0.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/curator-recipes-2.12.0.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/gson-2.2.4.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/guava-11.0.2.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/htrace-core4-4.1.0-incubating.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/httpclient-4.5.2.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/httpcore-4.4.4.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/jackson-annotations-2.9.5.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/jackson-core-2.9.5.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/jackson-core-asl-1.9.13.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/jackson-databind-2.9.5.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/jackson-jaxrs-1.9.13.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/jackson-mapper-asl-1.9.13.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/jackson-xc-1.9.13.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/javax.servlet-api-3.1.0.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/jaxb-api-2.2.11.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/jaxb-impl-2.2.3-1.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/jcip-annotations-1.0-1.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/jersey-core-1.19.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/jersey-json-1.19.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/jersey-server-1.19.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/jersey-servlet-1.19.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/jettison-1.1.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/jetty-http-9.3.19.v20170502.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/jetty-io-9.3.19.v20170502.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/jetty-security-9.3.19.v20170502.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/jetty-server-9.3.19.v20170502.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/jetty-servlet-9.3.19.v20170502.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/jetty-util-9.3.19.v20170502.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/jetty-util-ajax-9.3.19.v20170502.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/jetty-webapp-9.3.19.v20170502.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/jetty-xml-9.3.19.v20170502.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/jsch-0.1.54.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/json-simple-1.1.1.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/json-smart-2.3.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/jsr305-3.0.0.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/jsr311-api-1.1.1.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/kerb-admin-1.0.1.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/kerb-client-1.0.1.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/kerb-common-1.0.1.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/kerb-core-1.0.1.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/kerb-crypto-1.0.1.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/kerb-identity-1.0.1.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/kerb-server-1.0.1.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/kerb-simplekdc-1.0.1.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/kerb-util-1.0.1.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/kerby-asn1-1.0.1.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/kerby-config-1.0.1.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/kerby-pkix-1.0.1.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/kerby-util-1.0.1.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/kerby-xdr-1.0.1.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/leveldbjni-all-1.8.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/log4j-1.2.17.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/netty-3.10.5.Final.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/netty-all-4.0.52.Final.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/nimbus-jose-jwt-4.41.1.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/okhttp-2.7.5.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/okio-1.6.0.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/paranamer-2.3.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/protobuf-java-2.5.0.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/re2j-1.1.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/snappy-java-1.0.5.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/stax2-api-3.1.4.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/token-provider-1.0.1.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/woodstox-core-5.0.3.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/xz-1.0.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/lib/zookeeper-3.4.6.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/.//hadoop-hdfs-3.1.1.3.0.1.0-187-tests.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/.//hadoop-hdfs-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/.//hadoop-hdfs-client-3.1.1.3.0.1.0-187-tests.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/.//hadoop-hdfs-client-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/.//hadoop-hdfs-client-tests.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/.//hadoop-hdfs-client.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/.//hadoop-hdfs-httpfs-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/.//hadoop-hdfs-httpfs.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/.//hadoop-hdfs-native-client-3.1.1.3.0.1.0-187-tests.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/.//hadoop-hdfs-native-client-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/.//hadoop-hdfs-native-client-tests.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/.//hadoop-hdfs-native-client.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/.//hadoop-hdfs-nfs-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/.//hadoop-hdfs-nfs.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/.//hadoop-hdfs-rbf-3.1.1.3.0.1.0-187-tests.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/.//hadoop-hdfs-rbf-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/.//hadoop-hdfs-rbf-tests.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/.//hadoop-hdfs-rbf.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/.//hadoop-hdfs-tests.jar:/usr/hdp/3.0.1.0-187/hadoop-hdfs/.//hadoop-hdfs.jar::/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//aliyun-sdk-oss-2.8.3.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//aws-java-sdk-bundle-1.11.271.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//azure-data-lake-store-sdk-2.2.7.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//azure-keyvault-core-1.0.0.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//azure-storage-7.0.0.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//gcs-connector-1.9.0.3.0.1.0-187-shaded.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-aliyun-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-aliyun.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-archive-logs-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-archive-logs.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-archives-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-archives.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-aws-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-aws.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-azure-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-azure-datalake-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-azure-datalake.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-azure.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-datajoin-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-datajoin.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-distcp-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-distcp.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-extras-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-extras.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-fs2img-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-fs2img.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-gridmix-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-gridmix.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-kafka-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-kafka.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-mapreduce-client-app-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-mapreduce-client-app.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-mapreduce-client-common-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-mapreduce-client-common.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-mapreduce-client-core-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-mapreduce-client-core.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-mapreduce-client-hs-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-mapreduce-client-hs-plugins-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-mapreduce-client-hs-plugins.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-mapreduce-client-hs.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-mapreduce-client-jobclient-3.1.1.3.0.1.0-187-tests.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-mapreduce-client-jobclient-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-mapreduce-client-jobclient-tests.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-mapreduce-client-jobclient.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-mapreduce-client-nativetask-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-mapreduce-client-nativetask.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-mapreduce-client-shuffle-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-mapreduce-client-shuffle.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-mapreduce-client-uploader-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-mapreduce-client-uploader.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-mapreduce-examples-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-mapreduce-examples.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-openstack-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-openstack.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-resourceestimator-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-resourceestimator.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-rumen-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-rumen.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-sls-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-sls.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-streaming-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//hadoop-streaming.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//jdom-1.1.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//kafka-clients-0.8.2.1.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//lz4-1.2.0.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//netty-buffer-4.1.17.Final.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//netty-codec-4.1.17.Final.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//netty-codec-http-4.1.17.Final.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//netty-common-4.1.17.Final.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//netty-handler-4.1.17.Final.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//netty-resolver-4.1.17.Final.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//netty-transport-4.1.17.Final.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//ojalgo-43.0.jar:/usr/hdp/3.0.1.0-187/hadoop-mapreduce/.//wildfly-openssl-1.0.4.Final.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/./:/usr/hdp/3.0.1.0-187/hadoop-yarn/lib/HikariCP-java7-2.4.12.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/lib/aopalliance-1.0.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/lib/dnsjava-2.1.7.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/lib/ehcache-3.3.1.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/lib/fst-2.50.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/lib/geronimo-jcache_1.0_spec-1.0-alpha-1.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/lib/guice-4.0.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/lib/guice-servlet-4.0.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/lib/jackson-jaxrs-base-2.9.5.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/lib/jackson-jaxrs-json-provider-2.9.5.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/lib/jackson-module-jaxb-annotations-2.9.5.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/lib/java-util-1.9.0.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/lib/javax.inject-1.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/lib/jersey-client-1.19.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/lib/jersey-guice-1.19.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/lib/json-io-2.5.1.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/lib/metrics-core-3.2.4.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/lib/mssql-jdbc-6.2.1.jre7.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/lib/objenesis-1.0.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/lib/snakeyaml-1.16.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/lib/swagger-annotations-1.5.4.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/.//hadoop-yarn-api-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/.//hadoop-yarn-api.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/.//hadoop-yarn-applications-distributedshell-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/.//hadoop-yarn-applications-distributedshell.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/.//hadoop-yarn-applications-unmanaged-am-launcher-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/.//hadoop-yarn-applications-unmanaged-am-launcher.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/.//hadoop-yarn-client-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/.//hadoop-yarn-client.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/.//hadoop-yarn-common-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/.//hadoop-yarn-common.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/.//hadoop-yarn-registry-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/.//hadoop-yarn-registry.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/.//hadoop-yarn-server-applicationhistoryservice-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/.//hadoop-yarn-server-applicationhistoryservice.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/.//hadoop-yarn-server-common-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/.//hadoop-yarn-server-common.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/.//hadoop-yarn-server-nodemanager-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/.//hadoop-yarn-server-nodemanager.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/.//hadoop-yarn-server-resourcemanager-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/.//hadoop-yarn-server-resourcemanager.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/.//hadoop-yarn-server-router-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/.//hadoop-yarn-server-router.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/.//hadoop-yarn-server-sharedcachemanager-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/.//hadoop-yarn-server-sharedcachemanager.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/.//hadoop-yarn-server-tests-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/.//hadoop-yarn-server-tests.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/.//hadoop-yarn-server-timeline-pluginstorage-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/.//hadoop-yarn-server-timeline-pluginstorage.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/.//hadoop-yarn-server-web-proxy-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/.//hadoop-yarn-server-web-proxy.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/.//hadoop-yarn-services-api-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/.//hadoop-yarn-services-api.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/.//hadoop-yarn-services-core-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/hadoop-yarn/.//hadoop-yarn-services-core.jar:/usr/hdp/3.0.1.0-187/tez/hadoop-shim-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/hadoop-shim-2.8-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/tez-api-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/tez-common-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/tez-dag-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/tez-examples-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/tez-history-parser-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/tez-javadoc-tools-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/tez-job-analyzer-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/tez-mapreduce-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/tez-protobuf-history-plugin-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/tez-runtime-internals-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/tez-runtime-library-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/tez-tests-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/tez-yarn-timeline-cache-plugin-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/tez-yarn-timeline-history-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/tez-yarn-timeline-history-with-acls-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/tez-yarn-timeline-history-with-fs-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/lib/RoaringBitmap-0.4.9.jar:/usr/hdp/3.0.1.0-187/tez/lib/async-http-client-1.9.40.jar:/usr/hdp/3.0.1.0-187/tez/lib/commons-cli-1.2.jar:/usr/hdp/3.0.1.0-187/tez/lib/commons-codec-1.4.jar:/usr/hdp/3.0.1.0-187/tez/lib/commons-collections-3.2.2.jar:/usr/hdp/3.0.1.0-187/tez/lib/commons-collections4-4.1.jar:/usr/hdp/3.0.1.0-187/tez/lib/commons-io-2.4.jar:/usr/hdp/3.0.1.0-187/tez/lib/commons-lang-2.6.jar:/usr/hdp/3.0.1.0-187/tez/lib/commons-math3-3.1.1.jar:/usr/hdp/3.0.1.0-187/tez/lib/gcs-connector-1.9.0.3.0.1.0-187-shaded.jar:/usr/hdp/3.0.1.0-187/tez/lib/guava-11.0.2.jar:/usr/hdp/3.0.1.0-187/tez/lib/hadoop-aws-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/lib/hadoop-azure-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/lib/hadoop-azure-datalake-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/lib/hadoop-hdfs-client-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/lib/hadoop-mapreduce-client-common-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/lib/hadoop-mapreduce-client-core-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/lib/hadoop-yarn-server-timeline-pluginstorage-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/lib/jersey-client-1.19.jar:/usr/hdp/3.0.1.0-187/tez/lib/jersey-json-1.19.jar:/usr/hdp/3.0.1.0-187/tez/lib/jettison-1.3.4.jar:/usr/hdp/3.0.1.0-187/tez/lib/jetty-server-9.3.22.v20171030.jar:/usr/hdp/3.0.1.0-187/tez/lib/jetty-util-9.3.22.v20171030.jar:/usr/hdp/3.0.1.0-187/tez/lib/jsr305-3.0.0.jar:/usr/hdp/3.0.1.0-187/tez/lib/metrics-core-3.1.0.jar:/usr/hdp/3.0.1.0-187/tez/lib/protobuf-java-2.5.0.jar:/usr/hdp/3.0.1.0-187/tez/lib/servlet-api-2.5.jar:/usr/hdp/3.0.1.0-187/tez/lib/slf4j-api-1.7.10.jar:/usr/hdp/3.0.1.0-187/tez/conf:/usr/hdp/3.0.1.0-187/tez/conf_llap:/usr/hdp/3.0.1.0-187/tez/hadoop-shim-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/hadoop-shim-2.8-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/lib:/usr/hdp/3.0.1.0-187/tez/man:/usr/hdp/3.0.1.0-187/tez/tez-api-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/tez-common-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/tez-dag-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/tez-examples-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/tez-history-parser-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/tez-javadoc-tools-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/tez-job-analyzer-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/tez-mapreduce-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/tez-protobuf-history-plugin-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/tez-runtime-internals-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/tez-runtime-library-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/tez-tests-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/tez-yarn-timeline-cache-plugin-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/tez-yarn-timeline-history-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/tez-yarn-timeline-history-with-acls-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/tez-yarn-timeline-history-with-fs-0.9.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/ui:/usr/hdp/3.0.1.0-187/tez/lib/async-http-client-1.9.40.jar:/usr/hdp/3.0.1.0-187/tez/lib/commons-cli-1.2.jar:/usr/hdp/3.0.1.0-187/tez/lib/commons-codec-1.4.jar:/usr/hdp/3.0.1.0-187/tez/lib/commons-collections-3.2.2.jar:/usr/hdp/3.0.1.0-187/tez/lib/commons-collections4-4.1.jar:/usr/hdp/3.0.1.0-187/tez/lib/commons-io-2.4.jar:/usr/hdp/3.0.1.0-187/tez/lib/commons-lang-2.6.jar:/usr/hdp/3.0.1.0-187/tez/lib/commons-math3-3.1.1.jar:/usr/hdp/3.0.1.0-187/tez/lib/gcs-connector-1.9.0.3.0.1.0-187-shaded.jar:/usr/hdp/3.0.1.0-187/tez/lib/guava-11.0.2.jar:/usr/hdp/3.0.1.0-187/tez/lib/hadoop-aws-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/lib/hadoop-azure-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/lib/hadoop-azure-datalake-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/lib/hadoop-hdfs-client-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/lib/hadoop-mapreduce-client-common-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/lib/hadoop-mapreduce-client-core-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/lib/hadoop-yarn-server-timeline-pluginstorage-3.1.1.3.0.1.0-187.jar:/usr/hdp/3.0.1.0-187/tez/lib/jersey-client-1.19.jar:/usr/hdp/3.0.1.0-187/tez/lib/jersey-json-1.19.jar:/usr/hdp/3.0.1.0-187/tez/lib/jettison-1.3.4.jar:/usr/hdp/3.0.1.0-187/tez/lib/jetty-server-9.3.22.v20171030.jar:/usr/hdp/3.0.1.0-187/tez/lib/jetty-util-9.3.22.v20171030.jar:/usr/hdp/3.0.1.0-187/tez/lib/jsr305-3.0.0.jar:/usr/hdp/3.0.1.0-187/tez/lib/metrics-core-3.1.0.jar:/usr/hdp/3.0.1.0-187/tez/lib/protobuf-java-2.5.0.jar:/usr/hdp/3.0.1.0-187/tez/lib/RoaringBitmap-0.4.9.jar:/usr/hdp/3.0.1.0-187/tez/lib/servlet-api-2.5.jar:/usr/hdp/3.0.1.0-187/tez/lib/slf4j-api-1.7.10.jar:/usr/hdp/3.0.1.0-187/tez/lib/tez.tar.gz

STARTUP_MSG: build = git@github.com:hortonworks/hadoop.git -r 2820e4d6fc7ec31ac42187083ed5933c823e9784; compiled by 'jenkins' on 2018-09-19T11:03Z

STARTUP_MSG: java = 1.8.0_112

************************************************************/

2018-10-23 13:51:37,816 INFO dns.PrivilegedRegistryDNSStarter (LogAdapter.java:info(51)) - registered UNIX signal handlers for [TERM, HUP, INT]

2018-10-23 13:51:37,957 INFO dns.RegistryDNS (RegistryDNS.java:initializeChannels(195)) - Opening TCP and UDP channels on /0.0.0.0 port 53

2018-10-23 13:51:37,962 ERROR dns.PrivilegedRegistryDNSStarter (PrivilegedRegistryDNSStarter.java:init(61)) - Error initializing Registry DNS

java.net.BindException: Problem binding to [node.b2be.com:53] java.net.BindException: Address already in use; For more details see: http://wiki.apache.org/hadoop/BindException

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:831)

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:736)

at org.apache.hadoop.registry.server.dns.RegistryDNS.openUDPChannel(RegistryDNS.java:1016)

at org.apache.hadoop.registry.server.dns.RegistryDNS.addNIOUDP(RegistryDNS.java:925)

at org.apache.hadoop.registry.server.dns.RegistryDNS.initializeChannels(RegistryDNS.java:196)

at org.apache.hadoop.registry.server.dns.PrivilegedRegistryDNSStarter.init(PrivilegedRegistryDNSStarter.java:59)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.commons.daemon.support.DaemonLoader.load(DaemonLoader.java:207)

Caused by: java.net.BindException: Address already in use

at sun.nio.ch.Net.bind0(Native Method)

at sun.nio.ch.Net.bind(Net.java:433)

at sun.nio.ch.DatagramChannelImpl.bind(DatagramChannelImpl.java:691)

at sun.nio.ch.DatagramSocketAdaptor.bind(DatagramSocketAdaptor.java:91)

at org.apache.hadoop.registry.server.dns.RegistryDNS.openUDPChannel(RegistryDNS.java:1014)

... 8 more

==> /var/log/hadoop-yarn/yarn/hadoop-yarn-root-registrydns-node.b2be.com.out <==

==> /var/log/hadoop-yarn/yarn/rm-audit.log <==

2018-10-16 13:21:08,279 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:22:08,275 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:23:08,283 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:24:08,296 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:25:08,279 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:26:08,288 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:27:08,278 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:28:08,287 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:29:08,292 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:30:08,266 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:31:08,266 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:32:08,274 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:33:08,287 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:34:08,296 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:35:08,279 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:36:08,293 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:37:08,266 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:38:08,267 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:39:08,266 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:40:08,275 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:41:08,280 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:42:08,277 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:43:08,270 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:44:08,279 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:45:08,266 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:46:08,266 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:47:08,278 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:48:08,268 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:49:08,268 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:50:08,291 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:51:08,277 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:52:08,266 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:53:08,284 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:54:08,270 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:55:08,269 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:56:08,269 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:57:08,278 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:58:08,302 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 13:59:08,273 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

2018-10-16 14:00:08,266 INFO resourcemanager.RMAuditLogger: USER=yarn OPERATION=Get Applications Request TARGET=ClientRMService RESULT=SUCCESS

==> /var/log/hadoop-yarn/yarn/hadoop-yarn-timelinereader-MYPTJ020DT-Linux.out <==

core file size (blocks, -c) unlimited

data seg size (kbytes, -d) unlimited

scheduling priority (-e) 0

file size (blocks, -f) unlimited

pending signals (-i) 61739

max locked memory (kbytes, -l) 64

max memory size (kbytes, -m) unlimited

open files (-n) 32768

pipe size (512 bytes, -p) 8

POSIX message queues (bytes, -q) 819200

real-time priority (-r) 0

stack size (kbytes, -s) 8192

cpu time (seconds, -t) unlimited

max user processes (-u) 65536

virtual memory (kbytes, -v) unlimited

file locks (-x) unlimited

Oct 16, 2018 8:52:08 AM com.sun.jersey.api.core.PackagesResourceConfig init

INFO: Scanning for root resource and provider classes in the packages:

org.apache.hadoop.yarn.server.timelineservice.reader

org.apache.hadoop.yarn.webapp

org.apache.hadoop.yarn.webapp

Oct 16, 2018 8:52:08 AM com.sun.jersey.api.core.ScanningResourceConfig logClasses

INFO: Root resource classes found:

class org.apache.hadoop.yarn.server.timelineservice.reader.TimelineReaderWebServices

Oct 16, 2018 8:52:08 AM com.sun.jersey.api.core.ScanningResourceConfig logClasses

INFO: Provider classes found:

class org.apache.hadoop.yarn.webapp.YarnJacksonJaxbJsonProvider

class org.apache.hadoop.yarn.webapp.GenericExceptionHandler

Oct 16, 2018 8:52:08 AM com.sun.jersey.server.impl.application.WebApplicationImpl _initiate

INFO: Initiating Jersey application, version 'Jersey: 1.19 02/11/2015 03:25 AM'

==> /var/log/hadoop-yarn/yarn/hadoop-yarn-resourcemanager-MYPTJ020DT-Linux.log <==

2018-10-16 14:00:53,383 INFO resourcemanager.ResourceManager (ResourceManager.java:transitionToStandby(1302)) - Transitioning to standby state

2018-10-16 14:00:53,383 INFO client.SystemServiceManagerImpl (SystemServiceManagerImpl.java:serviceStop(137)) - Stopping org.apache.hadoop.yarn.service.client.SystemServiceManagerImpl

2018-10-16 14:00:53,384 WARN amlauncher.ApplicationMasterLauncher (ApplicationMasterLauncher.java:run(122)) - org.apache.hadoop.yarn.server.resourcemanager.amlauncher.ApplicationMasterLauncher$LauncherThread interrupted. Returning.

2018-10-16 14:00:53,384 INFO ipc.Server (Server.java:stop(3082)) - Stopping server on 8030

2018-10-16 14:00:53,387 INFO ipc.Server (Server.java:run(1185)) - Stopping IPC Server listener on 8030

2018-10-16 14:00:53,388 INFO ipc.Server (Server.java:run(1319)) - Stopping IPC Server Responder

2018-10-16 14:00:53,392 INFO ipc.Server (Server.java:stop(3082)) - Stopping server on 8025

2018-10-16 14:00:53,396 INFO ipc.Server (Server.java:run(1185)) - Stopping IPC Server listener on 8025

2018-10-16 14:00:53,396 INFO ipc.Server (Server.java:run(1319)) - Stopping IPC Server Responder

2018-10-16 14:00:53,397 ERROR event.EventDispatcher (EventDispatcher.java:run(61)) - Returning, interrupted : java.lang.InterruptedException

2018-10-16 14:00:53,396 INFO util.AbstractLivelinessMonitor (AbstractLivelinessMonitor.java:run(156)) - NMLivelinessMonitor thread interrupted

2018-10-16 14:00:53,399 INFO activities.ActivitiesManager (ActivitiesManager.java:run(145)) - org.apache.hadoop.yarn.server.resourcemanager.scheduler.activities.ActivitiesManager thread interrupted

2018-10-16 14:00:53,399 INFO capacity.CapacityScheduler (CapacityScheduler.java:run(611)) - AsyncScheduleThread[Thread-12] exited!

2018-10-16 14:00:53,399 ERROR capacity.CapacityScheduler (CapacityScheduler.java:run(649)) - java.lang.InterruptedException

2018-10-16 14:00:53,399 INFO capacity.CapacityScheduler (CapacityScheduler.java:run(653)) - ResourceCommitterService exited!

2018-10-16 14:00:53,400 INFO monitor.SchedulingMonitor (SchedulingMonitor.java:serviceStop(90)) - Stop SchedulingMonitor (ProportionalCapacityPreemptionPolicy)

2018-10-16 14:00:53,400 INFO monitor.SchedulingMonitorManager (SchedulingMonitorManager.java:silentlyStopSchedulingMonitor(144)) - Sucessfully stopped monitor=SchedulingMonitor (ProportionalCapacityPreemptionPolicy)

2018-10-16 14:00:53,401 INFO event.AsyncDispatcher (AsyncDispatcher.java:serviceStop(155)) - AsyncDispatcher is draining to stop, ignoring any new events.

2018-10-16 14:00:53,402 INFO util.AbstractLivelinessMonitor (AbstractLivelinessMonitor.java:run(156)) - org.apache.hadoop.yarn.server.resourcemanager.rmapp.monitor.RMAppLifetimeMonitor thread interrupted

2018-10-16 14:00:53,402 INFO util.AbstractLivelinessMonitor (AbstractLivelinessMonitor.java:run(156)) - AMLivelinessMonitor thread interrupted

2018-10-16 14:00:53,402 INFO util.AbstractLivelinessMonitor (AbstractLivelinessMonitor.java:run(156)) - AMLivelinessMonitor thread interrupted

2018-10-16 14:00:53,402 INFO util.AbstractLivelinessMonitor (AbstractLivelinessMonitor.java:run(156)) - org.apache.hadoop.yarn.server.resourcemanager.rmcontainer.ContainerAllocationExpirer thread interrupted

2018-10-16 14:00:53,402 ERROR delegation.AbstractDelegationTokenSecretManager (AbstractDelegationTokenSecretManager.java:run(696)) - ExpiredTokenRemover received java.lang.InterruptedException: sleep interrupted

2018-10-16 14:00:53,402 INFO impl.MetricsSystemImpl (MetricsSystemImpl.java:stop(210)) - Stopping ResourceManager metrics system...

2018-10-16 14:00:53,405 INFO impl.MetricsSinkAdapter (MetricsSinkAdapter.java:publishMetricsFromQueue(141)) - timeline thread interrupted.

2018-10-16 14:00:53,406 INFO impl.MetricsSystemImpl (MetricsSystemImpl.java:stop(216)) - ResourceManager metrics system stopped.

2018-10-16 14:00:53,406 INFO impl.MetricsSystemImpl (MetricsSystemImpl.java:shutdown(607)) - ResourceManager metrics system shutdown complete.

2018-10-16 14:00:53,406 INFO event.AsyncDispatcher (AsyncDispatcher.java:serviceStop(155)) - AsyncDispatcher is draining to stop, ignoring any new events.

2018-10-16 14:00:53,407 INFO imps.CuratorFrameworkImpl (CuratorFrameworkImpl.java:backgroundOperationsLoop(821)) - backgroundOperationsLoop exiting

2018-10-16 14:00:53,470 INFO timeline.HadoopTimelineMetricsSink (AbstractTimelineMetricsSink.java:emitMetricsJson(212)) - Unable to POST metrics to collector, http://MYPTJ020DT-Linux:6188/ws/v1/timeline/metrics, statusCode = 404

2018-10-16 14:00:53,470 INFO timeline.HadoopTimelineMetricsSink (AbstractTimelineMetricsSink.java:emitMetricsJson(239)) - Unable to connect to collector, http://MYPTJ020DT-Linux:6188/ws/v1/timeline/metrics

This exceptions will be ignored for next 100 times

2018-10-16 14:00:53,703 INFO zookeeper.ZooKeeper (ZooKeeper.java:close(684)) - Session: 0x16677026a980011 closed

2018-10-16 14:00:53,703 INFO zookeeper.ClientCnxn (ClientCnxn.java:run(524)) - EventThread shut down

2018-10-16 14:00:53,704 INFO resourcemanager.ResourceManager (ResourceManager.java:transitionToStandby(1309)) - Transitioned to standby state

2018-10-16 14:00:53,705 INFO resourcemanager.ResourceManager (LogAdapter.java:info(49)) - SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down ResourceManager at MYPTJ020DT-Linux/127.0.1.1

************************************************************/

==> /var/log/hadoop-yarn/yarn/privileged-root-registrydns-MYPTJ020DT-Linux.out.3 <==

core file size (blocks, -c) 0

data seg size (kbytes, -d) unlimited

scheduling priority (-e) 0

file size (blocks, -f) unlimited

pending signals (-i) 61739

max locked memory (kbytes, -l) 64

max memory size (kbytes, -m) unlimited

open files (-n) 32768

pipe size (512 bytes, -p) 8

POSIX message queues (bytes, -q) 819200

real-time priority (-r) 0

stack size (kbytes, -s) 8192

cpu time (seconds, -t) unlimited

max user processes (-u) 65536

virtual memory (kbytes, -v) unlimited

file locks (-x) unlimited

==> /var/log/hadoop-yarn/yarn/privileged-root-registrydns-MYPTJ020DT-Linux.out.1 <==

core file size (blocks, -c) 0

data seg size (kbytes, -d) unlimited

scheduling priority (-e) 0

file size (blocks, -f) unlimited

pending signals (-i) 61739

max locked memory (kbytes, -l) 64

max memory size (kbytes, -m) unlimited

open files (-n) 32768

pipe size (512 bytes, -p) 8

POSIX message queues (bytes, -q) 819200

real-time priority (-r) 0

stack size (kbytes, -s) 8192

cpu time (seconds, -t) unlimited

max user processes (-u) 65536

virtual memory (kbytes, -v) unlimited

file locks (-x) unlimited

==> /var/log/hadoop-yarn/yarn/hadoop-yarn-root-registrydns-node.b2be.com.out.1 <==

==> /var/log/hadoop-yarn/yarn/privileged-root-registrydns-node.b2be.com.err.4 <==

java.net.BindException: Problem binding to [node.b2be.com:53] java.net.BindException: Address already in use; For more details see: http://wiki.apache.org/hadoop/BindException

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:831)

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:736)

at org.apache.hadoop.registry.server.dns.RegistryDNS.openUDPChannel(RegistryDNS.java:1016)

at org.apache.hadoop.registry.server.dns.RegistryDNS.addNIOUDP(RegistryDNS.java:925)

at org.apache.hadoop.registry.server.dns.RegistryDNS.initializeChannels(RegistryDNS.java:196)

at org.apache.hadoop.registry.server.dns.PrivilegedRegistryDNSStarter.init(PrivilegedRegistryDNSStarter.java:59)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.commons.daemon.support.DaemonLoader.load(DaemonLoader.java:207)

Caused by: java.net.BindException: Address already in use

at sun.nio.ch.Net.bind0(Native Method)

at sun.nio.ch.Net.bind(Net.java:433)

at sun.nio.ch.DatagramChannelImpl.bind(DatagramChannelImpl.java:691)

at sun.nio.ch.DatagramSocketAdaptor.bind(DatagramSocketAdaptor.java:91)

at org.apache.hadoop.registry.server.dns.RegistryDNS.openUDPChannel(RegistryDNS.java:1014)

... 8 more

Cannot load daemon

Service exit with a return value of 3

==> /var/log/hadoop-yarn/yarn/hadoop-yarn-root-registrydns-MYPTJ020DT-Linux.out.1 <==

==> /var/log/hadoop-yarn/yarn/hadoop-mapreduce.jobsummary.log <==

==> /var/log/hadoop-yarn/yarn/privileged-root-registrydns-node.b2be.com.out.1 <==

core file size (blocks, -c) 0

data seg size (kbytes, -d) unlimited

scheduling priority (-e) 0

file size (blocks, -f) unlimited

pending signals (-i) 61748

max locked memory (kbytes, -l) 64

max memory size (kbytes, -m) unlimited

open files (-n) 32768

pipe size (512 bytes, -p) 8

POSIX message queues (bytes, -q) 819200

real-time priority (-r) 0

stack size (kbytes, -s) 8192

cpu time (seconds, -t) unlimited

max user processes (-u) 65536

virtual memory (kbytes, -v) unlimited

file locks (-x) unlimited

==> /var/log/hadoop-yarn/yarn/privileged-root-registrydns-MYPTJ020DT-Linux.err.3 <==

java.net.BindException: Problem binding to [MYPTJ020DT-Linux:53] java.net.BindException: Address already in use; For more details see: http://wiki.apache.org/hadoop/BindException

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:831)

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:736)

at org.apache.hadoop.registry.server.dns.RegistryDNS.openUDPChannel(RegistryDNS.java:1016)

at org.apache.hadoop.registry.server.dns.RegistryDNS.addNIOUDP(RegistryDNS.java:925)

at org.apache.hadoop.registry.server.dns.RegistryDNS.initializeChannels(RegistryDNS.java:196)

at org.apache.hadoop.registry.server.dns.PrivilegedRegistryDNSStarter.init(PrivilegedRegistryDNSStarter.java:59)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.commons.daemon.support.DaemonLoader.load(DaemonLoader.java:207)

Caused by: java.net.BindException: Address already in use

at sun.nio.ch.Net.bind0(Native Method)

at sun.nio.ch.Net.bind(Net.java:433)

at sun.nio.ch.DatagramChannelImpl.bind(DatagramChannelImpl.java:691)

at sun.nio.ch.DatagramSocketAdaptor.bind(DatagramSocketAdaptor.java:91)

at org.apache.hadoop.registry.server.dns.RegistryDNS.openUDPChannel(RegistryDNS.java:1014)

... 8 more

Cannot load daemon

Service exit with a return value of 3

==> /var/log/hadoop-yarn/yarn/privileged-root-registrydns-MYPTJ020DT-Linux.err.1 <==

java.net.BindException: Problem binding to [MYPTJ020DT-Linux:53] java.net.BindException: Address already in use; For more details see: http://wiki.apache.org/hadoop/BindException

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:831)

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:736)

at org.apache.hadoop.registry.server.dns.RegistryDNS.openUDPChannel(RegistryDNS.java:1016)

at org.apache.hadoop.registry.server.dns.RegistryDNS.addNIOUDP(RegistryDNS.java:925)

at org.apache.hadoop.registry.server.dns.RegistryDNS.initializeChannels(RegistryDNS.java:196)

at org.apache.hadoop.registry.server.dns.PrivilegedRegistryDNSStarter.init(PrivilegedRegistryDNSStarter.java:59)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.commons.daemon.support.DaemonLoader.load(DaemonLoader.java:207)

Caused by: java.net.BindException: Address already in use

at sun.nio.ch.Net.bind0(Native Method)

at sun.nio.ch.Net.bind(Net.java:433)

at sun.nio.ch.DatagramChannelImpl.bind(DatagramChannelImpl.java:691)

at sun.nio.ch.DatagramSocketAdaptor.bind(DatagramSocketAdaptor.java:91)

at org.apache.hadoop.registry.server.dns.RegistryDNS.openUDPChannel(RegistryDNS.java:1014)

... 8 more

Cannot load daemon

Service exit with a return value of 3

==> /var/log/hadoop-yarn/yarn/privileged-root-registrydns-MYPTJ020DT-Linux.err.2 <==

java.net.BindException: Problem binding to [MYPTJ020DT-Linux:53] java.net.BindException: Address already in use; For more details see: http://wiki.apache.org/hadoop/BindException

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:831)

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:736)

at org.apache.hadoop.registry.server.dns.RegistryDNS.openUDPChannel(RegistryDNS.java:1016)

at org.apache.hadoop.registry.server.dns.RegistryDNS.addNIOUDP(RegistryDNS.java:925)