Hi,

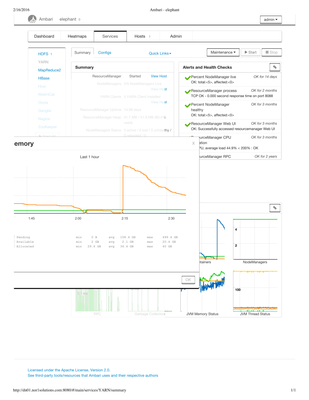

We have a cluster of 5 nodes currently running HDP2.0. Recently we observed that YARN is using 2000% of the memory.

Currently we allocated 2GB for yarn memory and the metrics showed 40GB was used for our current job. All nodes are still "alive". Will that be a problem? Should we increase the allocated memory for yarn cluster?