Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: [Zeppelin]: pyspark is not responding!

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

[Zeppelin]: pyspark is not responding!

- Labels:

-

Apache Zeppelin

Created on 05-08-2018 10:44 AM - edited 08-18-2019 01:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 05-08-2018 12:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Khouloud Landari The error message is very generic. To be able to further help you finding the solution please provide:

1. Check /var/log/zeppelin/zeppelin-interpreter-spark2-spark-zeppelin-*.log - And copy the important pieces you consider worth sharing

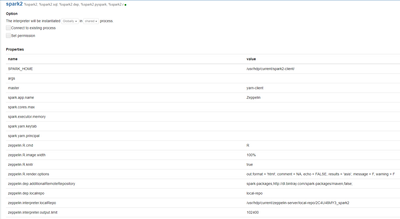

2. From zeppelin UI > Interpreter > Take screenshot of spark2 interpreter configuration and share - Also try restarting the interpreter and check if that helps or not.

3. Run the following command from zeppelin host:

SPARK_MAJOR_VERSION=2 pyspark --master yarn --verbose

and copy the output you get in the console to this post

With this information we should be able to draw further conclusions as to what could be causing the issue.

Note: If you add a comment to this post please make sure you tag my name using @ and my name. This way I will be able to know you have updated with more information.

Created on 05-08-2018 04:44 PM - edited 08-18-2019 01:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you, @Felix Albani! Actually, besides that error message, anything I try on zeppelin with pyspark, keeps pending and never run.

So, I've tried everything you mentioned:

1. Some of the info I got:

INFO [2018-05-05 05:59:31,823] ({pool-1-thread-1} PySparkInterpreter.java[interrupt]:421) - Sending SIGINT signal to PID : 7627

WARN [2018-05-05 06:39:08,446] ({ResponseProcessor for block BP-32082187-172.17.0.2-1517480669419:blk_1073742956_2146} DFSOutputStream.java[run]:958) - DFSOutputStream ResponseProcessor exception for block BP-

32082187-172.17.0.2-1517480669419:blk_1073742956_2146 java.io.EOFException: Premature EOF: no length prefix available

at org.apache.hadoop.hdfs.protocolPB.PBHelper.vintPrefixed(PBHelper.java:2468)

at org.apache.hadoop.hdfs.protocol.datatransfer.PipelineAck.readFields(PipelineAck.java:244)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer$ResponseProcessor.run(DFSOutputStream.java:849)

2. I've tried restarting it but it doesn't work!

Created on 05-08-2018 04:51 PM - edited 08-18-2019 01:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

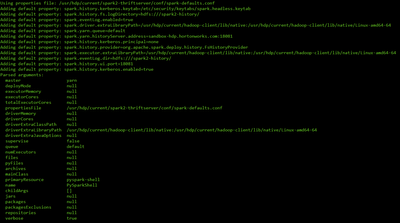

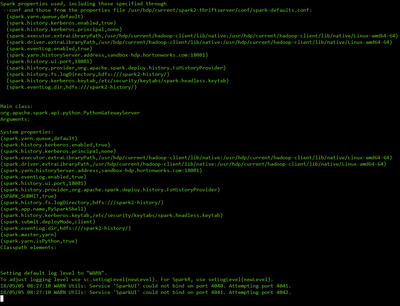

3. The output is:

Created 05-08-2018 05:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Khouloud Landari Do you see it stuck after those WARN Service SparkUI could not bind to port 4041? If that is the case I think the problem maybe is not able to start an application on yarn. What happens is spark2 pyspark launches a yarn application on your cluster and I think this is what is probably failing.

Try this command and let me know if this works:

SPARK_MAJOR_VERSION=2 pyspark --master local --verbose

Also I would advise you to check the Resource Manager logs. RM logs can be found on RM host under /var/log/hadoop-yarn

This will probably show what the problem is with yarn and why your zeppelin user is not able to start applications on the hadoop cluster.

HTH