Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: ZooKeeperSaslClient - SASL authentication fai...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

ZooKeeperSaslClient - SASL authentication failed using login context 'Client'

- Labels:

-

Apache Spark

-

Apache YARN

-

Apache Zookeeper

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I am facing the below issue while trying to run a spark streaming job from Kafka.

ERROR ZooKeeperSaslClient:244 - SASL authentication failed using login context 'Client'

Exception in thread "main" org.I0Itec.zkclient.exception.ZkAuthFailedException: Authentication failure

at org.I0Itec.zkclient.ZkClient.waitForKeeperState(ZkClient.java:946)

at org.I0Itec.zkclient.ZkClient.waitUntilConnected(ZkClient.java:923)

at org.I0Itec.zkclient.ZkClient.connect(ZkClient.java:1230)

at org.I0Itec.zkclient.ZkClient.<init>(ZkClient.java:156)

at org.I0Itec.zkclient.ZkClient.<init>(ZkClient.java:130)

at kafka.utils.ZkUtils$.createZkClientAndConnection(ZkUtils.scala:75)

at org.abbvie.utilities.OffsetManager$.getLastCommittedOffsets(OffsetManager.scala:93)

at org.abbvie.enrollment.PatientEnrollmentDriver$.functionToCreateContext(PatientEnrollmentDriver.scala:194)

at org.abbvie.enrollment.PatientEnrollmentDriver$$anonfun$1.apply(PatientEnrollmentDriver.scala:124)

at org.abbvie.enrollment.PatientEnrollmentDriver$$anonfun$1.apply(PatientEnrollmentDriver.scala:124)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.streaming.StreamingContext$.getActiveOrCreate(StreamingContext.scala:774)

at org.abbvie.enrollment.PatientEnrollmentDriver$.main(PatientEnrollmentDriver.scala:124)

at org.abbvie.enrollment.PatientEnrollmentDriver.main(PatientEnrollmentDriver.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:775)

at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:180)

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:205)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:119)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

My jaas.conf file is as below.

KafkaClient {

com.sun.security.auth.module.Krb5LoginModule required

doNotPrompt=false

principal="<principal>"

useKeyTab=true

serviceName="kafka"

keyTab="<keytab_path>"

client=true;

};

Client {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

useTicketCache=false

keyTab="<keytab_path>"

principal="<principal>";

};

I have tried multiple solutions available online. Nothing seems to work.

Can anyone please help me with the root cause?

Regards,

Adarsh K S

Created 10-18-2019 08:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Adarsh_ks

Can you try adding to Client

serviceName="zookeeper"

Client {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

useTicketCache=false

keyTab="<keytab_path>"

serviceName="zookeeper"

principal="<principal>";

};Let us know the results.

Thanks,

Manuel.

Created 10-31-2019 06:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Manuel,

I have tried the below solution, but its not working.

Also, I am sharing the details of hbase-site.xml below.

<configuration>

<property>

<name>hbase.rootdir</name>

<value><hbase_rootdir></value>

</property>

<property>

<name>hbase.replication</name>

<value>true</value>

</property>

<property>

<name>hbase.client.write.buffer</name>

<value>2097152</value>

</property>

<property>

<name>hbase.client.pause</name>

<value>100</value>

</property>

<property>

<name>hbase.client.retries.number</name>

<value>35</value>

</property>

<property>

<name>hbase.client.scanner.caching</name>

<value>100</value>

</property>

<property>

<name>hbase.client.keyvalue.maxsize</name>

<value>10485760</value>

</property>

<property>

<name>hbase.ipc.client.allowsInterrupt</name>

<value>true</value>

</property>

<property>

<name>hbase.client.primaryCallTimeout.get</name>

<value>10</value>

</property>

<property>

<name>hbase.client.primaryCallTimeout.multiget</name>

<value>10</value>

</property>

<property>

<name>hbase.fs.tmp.dir</name>

<value>/user/${user.name}/hbase-staging</value>

</property>

<property>

<name>hbase.client.scanner.timeout.period</name>

<value>60000</value>

</property>

<property>

<name>hbase.coprocessor.master.classes</name>

<value>org.apache.hadoop.hbase.security.access.AccessController</value>

</property>

<property>

<name>hbase.coprocessor.region.classes</name>

<value>org.apache.hadoop.hbase.security.access.AccessController,org.apache.hadoop.hbase.security.token.TokenProvider,org.apache.hadoop.hbase.security.access.SecureBulkLoadEndpoint</value>

</property>

<property>

<name>hbase.regionserver.thrift.http</name>

<value>true</value>

</property>

<property>

<name>hbase.thrift.ssl.enabled</name>

<value>false</value>

</property>

<property>

<name>hbase.thrift.support.proxyuser</name>

<value>true</value>

</property>

<property>

<name>hbase.rpc.timeout</name>

<value>60000</value>

</property>

<property>

<name>hbase.snapshot.enabled</name>

<value>true</value>

</property>

<property>

<name>hbase.snapshot.master.timeoutMillis</name>

<value>60000</value>

</property>

<property>

<name>hbase.snapshot.region.timeout</name>

<value>60000</value>

</property>

<property>

<name>hbase.snapshot.master.timeout.millis</name>

<value>60000</value>

</property>

<property>

<name>hbase.security.authentication</name>

<value>kerberos</value>

</property>

<property>

<name>hbase.rpc.protection</name>

<value>authentication</value>

</property>

<property>

<name>zookeeper.session.timeout</name>

<value>60000</value>

</property>

<property>

<name>zookeeper.znode.parent</name>

<value>/hbase</value>

</property>

<property>

<name>zookeeper.znode.rootserver</name>

<value>root-region-server</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value><zookeeper_quorum></value>

</property>

<property>

<name>hbase.zookeeper.property.clientPort</name>

<value>2181</value>

</property>

<property>

<name>hbase.master.kerberos.principal</name>

<value>hbase/_HOST@<domain_name></value>

</property>

<property>

<name>hbase.regionserver.kerberos.principal</name>

<value>hbase/_HOST@<domain_name></value>

</property>

<property>

<name>hbase.rest.kerberos.principal</name>

<value>hbase/_HOST@<domain_name></value>

</property>

<property>

<name>hbase.thrift.kerberos.principal</name>

<value>HTTP/_HOST@<domain_name></value>

</property>

<property>

<name>hadoop.security.authorization</name>

<value>true</value>

</property>

<property>

<name>hbase.rest.ssl.enabled</name>

<value>false</value>

</property>

</configuration>

Created 11-04-2019 06:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Are you facing the same issue after the changes? kindly share the exception if different from the original.

Client {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

useTicketCache=false

keyTab="<keytab_path>"

serviceName="zookeeper"

principal="<principal>";

};

Make sure that the keytab and principal specified are accessible from the user that is running the job.

Created 11-04-2019 02:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@

There are so many moving parts in your config to help investigate could you share the below files, you should redact site-specific info. Apart from the info already given can you share your architecture? HDP version,Cluster size,zookeeper and Kafka logs.

- zookeeper_jaas.conf

- kafka_server_jaas.conf

- zookeeper.properties

- server.properties

Are all other kerberized component functioning normally

Please revert

Created 04-22-2023 07:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

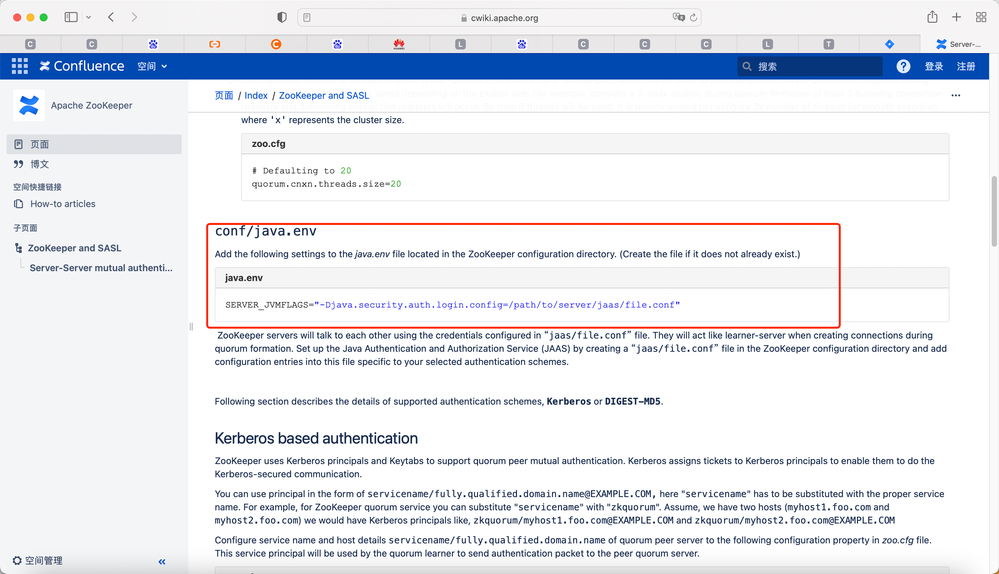

I got it. you can do like this. link:https://cwiki.apache.org/confluence/display/ZOOKEEPER/Server-Server+mutual+authentication