Linux /ect/hosts

######127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ####::1 localhost localhost.localdomain localhost6 localhost6.localdomain6q 192.168.122.1 master.hadoop master

Virsualbox Network:

NAT

Java Code run Successfully on virtualbox (gust with hadoop) but cannot run successfully form windows (host)

import java.io.IOException;

import java.net.URI;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

public class HadoopFileSystemCat {

public static void main(String[] args) throws IOException {

String uri = "hdfs://localhost:9000/TES";

Configuration conf = new Configuration();

conf.set("fs.hdfs.impl", "org.apache.hadoop.hdfs.DistributedFileSystem");

FileSystem fs = FileSystem.get(URI.create(uri), conf);

FSDataInputStream in = null;

try {

in = fs.open(new Path(uri));

System.out.println(fs.getFileBlockLocations(new Path(uri), 0, 0)[0]);

System.out.println(fs.getFileBlockLocations(new Path(uri), 0, 0)[0].getNames()[0]);

IOUtils.copyBytes(in, System.out, 4096, false);

} finally {

IOUtils.closeStream(in);

}

}

}Code Output

0,10,localhost 192.168.122.1:9866

Exception Message

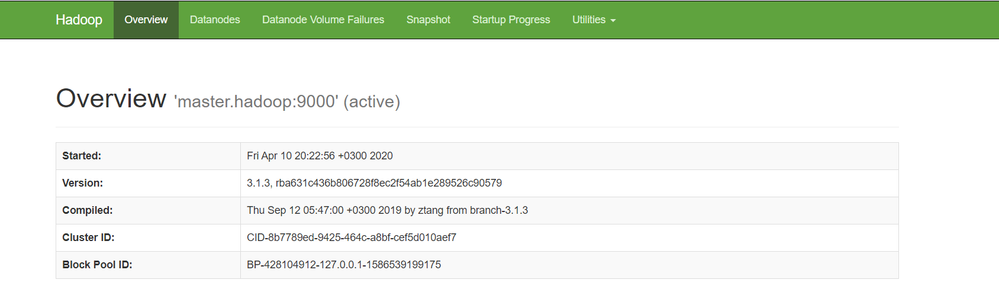

Exception in thread "main" org.apache.hadoop.hdfs.BlockMissingException: Could not obtain block: BP-428104912-127.0.0.1-1586539199175:blk_1073741825_1001 file=/DDD

at org.apache.hadoop.hdfs.DFSInputStream.refetchLocations(DFSInputStream.java:875)

at org.apache.hadoop.hdfs.DFSInputStream.chooseDataNode(DFSInputStream.java:858)

at org.apache.hadoop.hdfs.DFSInputStream.chooseDataNode(DFSInputStream.java:837)

at org.apache.hadoop.hdfs.DFSInputStream.blockSeekTo(DFSInputStream.java:566)

at org.apache.hadoop.hdfs.DFSInputStream.readWithStrategy(DFSInputStream.java:756)

at org.apache.hadoop.hdfs.DFSInputStream.read(DFSInputStream.java:825)

at java.io.DataInputStream.read(DataInputStream.java:100)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:94)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:68)

at com.mycompany.hdfs_java_api.HadoopFileSystemCat.main(HadoopFileSystemCat.java:39)actually block available as we can see through terminal

[muneeralnajdi@localhost hadoop]$ bin/hdfs dfs -ls / Found 1 items -rw-r--r-- 1 muneeralnajdi supergroup 10 2020-04-10 20:25 /DDD

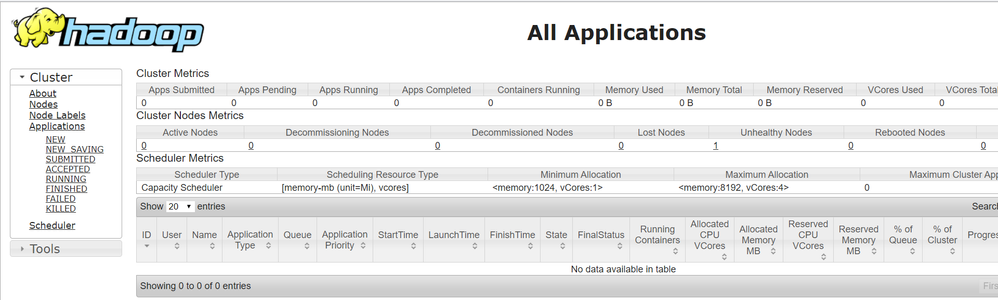

Hadoop Configuration

Core-site.xsml

<configuration> <property> <name>fs.default.name</name> <value>hdfs://master.hadoop:9000</value> </property> </configuration>

yarn-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/home/muneeralnajdi/hadoop_store/hdfs/namenode</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/home/muneeralnajdi/hadoop_store/hdfs/datanode</value>

</property>

<property>

<name>dfs.client.use.datanode.hostname</name>

<value>true</value>

<description>Whether clients should use datanode hostnames when

connecting to datanodes.

</description>

</property>

<property>

<name>dfs.namenode.http-address</name>

<value>master.hadoop:9870</value>

</property>

</configuration>hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/home/muneeralnajdi/hadoop_store/hdfs/namenode</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/home/muneeralnajdi/hadoop_store/hdfs/datanode</value>

</property>

<property>

<name>dfs.client.use.datanode.hostname</name>

<value>true</value>

<description>Whether clients should use datanode hostnames when

connecting to datanodes.

</description>

</property>

<property>

<name>dfs.namenode.http-address</name>

<value>master.hadoop:9870</value>

</property>

</configuration>Could any one help me to fix this issue

I go through some answers

Access to file on Hadoop Virtualbox Cluster

But the java API still can not access the datanode block from windows