Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: ambari cluster + both namenode are standby

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

ambari cluster + both namenode are standby

- Labels:

-

Apache Ambari

-

Apache Hadoop

Created on 12-04-2017 07:00 PM - edited 08-17-2019 08:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

we start the services in our ambari cluster as the following ( after reboot )

1. start Zk

2. start journal-node

3. start name node ( on master01 machine and on master02 machine )

and we noticed that both name-node are stand by

how to force on of the node to became active ?

from log:

tail -200 hadoop-hdfs-namenode-master03.sys65.com.log

rics to be sent will be discarded. This message will be skipped for the next 20 times.

2017-12-04 18:56:03,649 WARN namenode.FSEditLog (JournalSet.java:selectInputStreams(280)) - Unable to determine input streams from QJM to [152.87.28.153:8485, 152.87.28.152:8485, 152.87.27.162:8485]. Skipping.

java.io.IOException: Timed out waiting 20000ms for a quorum of nodes to respond.

at org.apache.hadoop.hdfs.qjournal.client.AsyncLoggerSet.waitForWriteQuorum(AsyncLoggerSet.java:137)

at org.apache.hadoop.hdfs.qjournal.client.QuorumJournalManager.selectInputStreams(QuorumJournalManager.java:471)

at org.apache.hadoop.hdfs.server.namenode.JournalSet.selectInputStreams(JournalSet.java:278)

at org.apache.hadoop.hdfs.server.namenode.FSEditLog.selectInputStreams(FSEditLog.java:1590)

at org.apache.hadoop.hdfs.server.namenode.FSEditLog.selectInputStreams(FSEditLog.java:1614)

at org.apache.hadoop.hdfs.server.namenode.ha.EditLogTailer.doTailEdits(EditLogTailer.java:251)

at org.apache.hadoop.hdfs.server.namenode.ha.EditLogTailer$EditLogTailerThread.doWork(EditLogTailer.java:402)

at org.apache.hadoop.hdfs.server.namenode.ha.EditLogTailer$EditLogTailerThread.access$300(EditLogTailer.java:355)

at org.apache.hadoop.hdfs.server.namenode.ha.EditLogTailer$EditLogTailerThread$1.run(EditLogTailer.java:372)

at org.apache.hadoop.security.SecurityUtil.doAsLoginUserOrFatal(SecurityUtil.java:476)

at org.apache.hadoop.hdfs.server.namenode.ha.EditLogTailer$EditLogTailerThread.run(EditLogTailer.java:368)

2017-12-04 18:56:03,650 INFO namenode.FSNamesystem (FSNamesystem.java:writeUnlock(1658)) - FSNamesystem write lock held for 20005 ms via

java.lang.Thread.getStackTrace(Thread.java:1556)

org.apache.hadoop.util.StringUtils.getStackTrace(StringUtils.java:945)

org.apache.hadoop.hdfs.server.namenode.FSNamesystem.writeUnlock(FSNamesystem.java:1658)

org.apache.hadoop.hdfs.server.namenode.ha.EditLogTailer.doTailEdits(EditLogTailer.java:285)

org.apache.hadoop.hdfs.server.namenode.ha.EditLogTailer$EditLogTailerThread.doWork(EditLogTailer.java:402)

org.apache.hadoop.hdfs.server.namenode.ha.EditLogTailer$EditLogTailerThread.access$300(EditLogTailer.java:355)

org.apache.hadoop.hdfs.server.namenode.ha.EditLogTailer$EditLogTailerThread$1.run(EditLogTailer.java:372)

org.apache.hadoop.security.SecurityUtil.doAsLoginUserOrFatal(SecurityUtil.java:476)

org.apache.hadoop.hdfs.server.namenode.ha.EditLogTailer$EditLogTailerThread.run(EditLogTailer.java:368)

Number of suppressed write-lock reports: 0

Longest write-lock held interval: 20005

2017-12-04 19:03:43,792 INFO ha.EditLogTailer (EditLogTailer.java:triggerActiveLogRoll(323)) - Triggering log roll on remote NameNode

2017-12-04 19:03:43,820 INFO ha.EditLogTailer (EditLogTailer.java:triggerActiveLogRoll(334)) - Skipping log roll. Remote node is not in Active state: Operation category JOURNAL is not supported in state standby

2017-12-04 19:03:49,824 INFO client.QuorumJournalManager (QuorumCall.java:waitFor(136)) - Waited 6001 ms (timeout=20000 ms) for a response for selectInputStreams. Succeeded so far:

2017-12-04 19:03:50,825 INFO client.QuorumJournalManager (QuorumCall.java:waitFor(136)) - Waited 7003 ms (timeout=20000 ms) for a response for selectInputStreams. Succeeded so far:

You have mail in /var/spool/mail/root

Created 12-05-2017 10:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We see the following error in your NameNode log:

2017-12-05 21:46:14,814 FATAL namenode.FSEditLog (JournalSet.java:mapJournalsAndReportErrors(398)) - Error: recoverUnfinalizedSegments failed for required journal (JournalAndStream(mgr=QJM to [10.164.28.153:8485, 10.164.28.152:8485, 10.164.27.162:8485], stream=null))

java.io.IOException: Timed out waiting 120000ms for a quorum of nodes to respond.

at org.apache.hadoop.hdfs.qjournal.client.AsyncLoggerSet.waitForWriteQuorum(AsyncLoggerSet.java:137)

This indicates that the Journal Nodes have some issues and hence NameNode is not coming up.

This kind of error can be seen due to corruption with the 'edits_inprogress_xxxxxxx' file on the JournalNode.

So please check if the 'edits_inprogress_xxxxxxx' files are corrupt on the JournalNodes, those files needs to be removed.

Please move (or take a backup of) the corrupt "edits_inprogress" file to /tmp or copy the fsimage edits directory ("/hadoop/hdfs/journal/XXXXXXX/current") from a functioning JournalNode to this node and restart JournalNode and NameNode services. You can check the JournalNode logs of all 3 nodes to find out which JournalNode is functioning fine without errors.

.

Created 12-05-2017 10:05 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

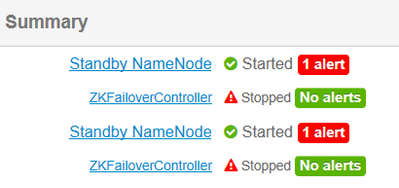

Good to see that now the Both ZKFC, All 3 JournalNodes and all 4 DataNodes are running (are green).

Regarding Both NameNode Down. We will need to investigate the NameNode logs in order to findout why they are not running.

So can you please share/attach the complete NN logs.

Created 12-05-2017 10:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We see the following error in your NameNode log:

2017-12-05 21:46:14,814 FATAL namenode.FSEditLog (JournalSet.java:mapJournalsAndReportErrors(398)) - Error: recoverUnfinalizedSegments failed for required journal (JournalAndStream(mgr=QJM to [10.164.28.153:8485, 10.164.28.152:8485, 10.164.27.162:8485], stream=null))

java.io.IOException: Timed out waiting 120000ms for a quorum of nodes to respond.

at org.apache.hadoop.hdfs.qjournal.client.AsyncLoggerSet.waitForWriteQuorum(AsyncLoggerSet.java:137)

This indicates that the Journal Nodes have some issues and hence NameNode is not coming up.

This kind of error can be seen due to corruption with the 'edits_inprogress_xxxxxxx' file on the JournalNode.

So please check if the 'edits_inprogress_xxxxxxx' files are corrupt on the JournalNodes, those files needs to be removed.

Please move (or take a backup of) the corrupt "edits_inprogress" file to /tmp or copy the fsimage edits directory ("/hadoop/hdfs/journal/XXXXXXX/current") from a functioning JournalNode to this node and restart JournalNode and NameNode services. You can check the JournalNode logs of all 3 nodes to find out which JournalNode is functioning fine without errors.

.

Created 12-06-2017 08:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Jay well done , your solution is brilliant , now name-node are both up one is active and second is stand by as should be

thank you so much for the time you spend on this case ,

Created 12-06-2017 09:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Jay , can you explain little about the file - edits_inprogress_xxxxxxx ?

Created 12-06-2017 10:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Brief of edits_inprogress__start transaction ID– This is the current edit log in progress. All transactions starting fromare in this file, and all new incoming transactions will get appended to this file. HDFS pre-allocates space in this file in 1 MB chunks for efficiency, and then fills it with incoming transactions. You’ll probably see this file’s size as a multiple of 1 MB. When HDFS finalizes the log segment, it truncates the unused portion of the space that doesn’t contain any transactions, so the finalized file’s space will shrink down.

.

More details about these files and it's functionality can be found at: https://hortonworks.com/blog/hdfs-metadata-directories-explained/

- « Previous

- Next »