I want to create a flow In NIFI where i want to consumer data from 3 Kafka topics and produce that data into 3 different Kafka topics . each topic should produce data into unique topic . for example -

kafka topics 1 --> produce to topic A

kafka topics 2 --> produce to topic B

kafka topics 3 --> produce to topic C

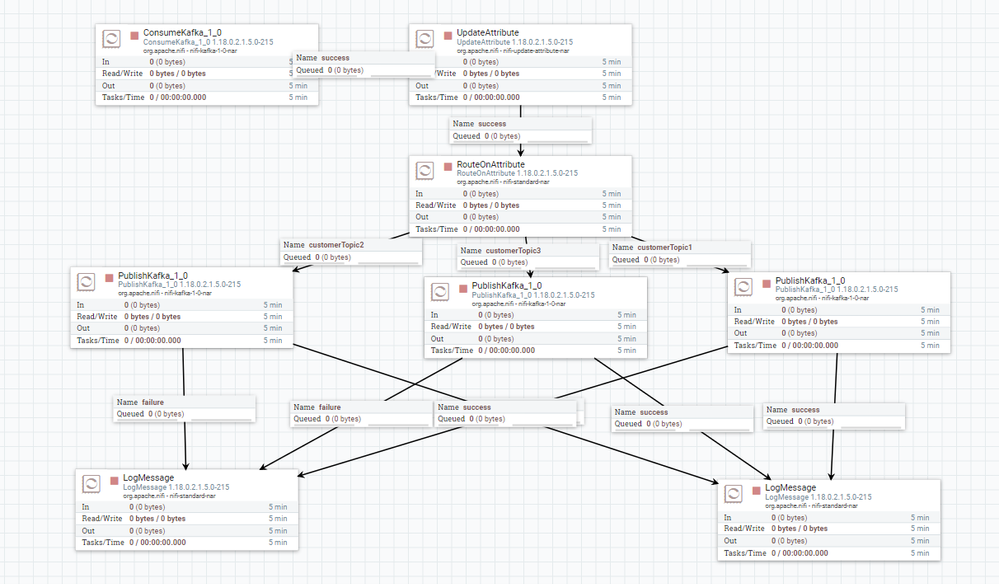

i want to use only one Consumer processor and one producer processor . Right now i am using 3 produce Kafka processor .

Can anyone suggest better approach to do so and in more optimized way.

Help me to reduce three publish processors to 1 , but it should be still able to consume from multiple topics and produce to multiple topics but dynamically , like topic1 produces data to topic A only and topic 2 to topic B and so on .

i tried using expression language . kafka.topic is the attribute which contains consumer topics. so i added if else condition for different consumer topic but the output was not the producer topic but whole string mentioned below

in update attribute added a property customerKafkaTopic=${kafka.topic:contains('alerts'):ifElse('${kafkaTopic1}',${kafka.topic:contains('events'):ifElse('${kafkaTopic2}',${kafka.topic:contains('ack'):ifElse('${kafkatopic3}','Not_found')}))}}

and passed this property customerKafkaTopic in publish kafka but it is not working .

Please help with a working approach.