Community Articles

- Cloudera Community

- Support

- Community Articles

- Spark Text Analytics - Uncovering Data-Driven Topi...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on

02-21-2017

05:48 PM

- edited on

02-12-2020

06:02 AM

by

SumitraMenon

What kind of text or unstructured data are you collecting within your company? Are you able to fully utilize this data to enhance predictive models, build reports/visualizations, and detect emerging trends found within the text?

Hadoop enables distributed, low-cost storage for this growing amount of unstructured data. In this post, I'll show one way to analyze unstructured data using Apache Spark. Spark is advantageous for text analytics because it provides a platform for scalable, distributed computing.

When it comes to text analytics, you have a few option for analyzing text. I like to categorize these techniques like this:

- Text Mining (i.e. Text clustering, data-driven topics)

- Categorization (i.e. Tagging unstructured data into categories and sub-categories; hierarchies; taxonomies)

- Entity Extraction (i.e. Extracting patterns such as phrases, addresses, product codes, phone numbers, etc.)

- Sentiment Analysis (i.e. Tagging positive, negative, or neutral with varying levels of sentiment)

- Deep Linguistics (i.e Semantics. Understanding causality, purpose, time, etc.)

Which technique you use typically depends on the business use case and the question(s) you are trying to answer. It's also common to combine these techniques.

This post will focus on text mining in order to uncover data-driven text topics.

For this example, I chose to analyze customer reviews of their airline experience. The goal of this analysis is to use statistics to find data-driven topics across a collection of customer airlines reviews. Here's the process I took:

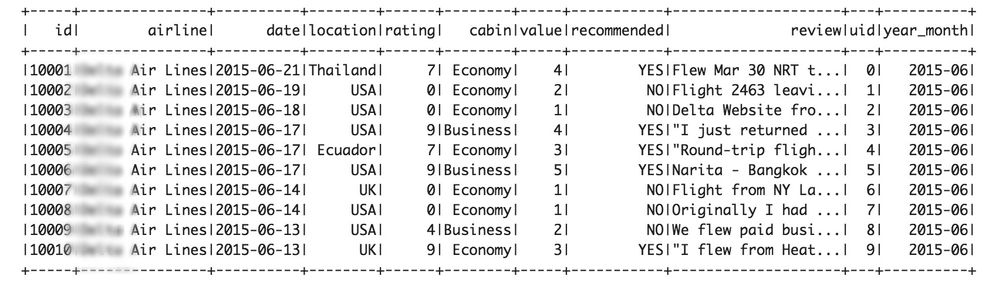

1. Load the Airline Data from HDFS

rawdata = spark.read.load("hdfs://sandbox.hortonworks.com:8020/tmp/airlines.csv", format="csv", header=True)

# Show rawdata (as DataFrame)

rawdata.show(10)

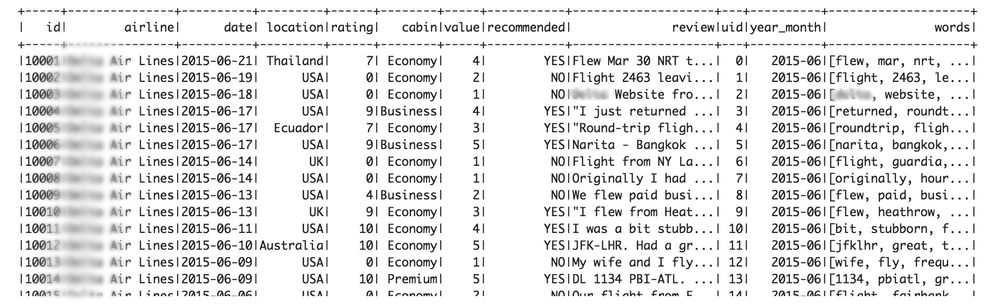

2. Pre-processing and Text Cleanup

I believe this is the most important step in any text analytics process. Here I am converting each customer review into a list of words, while removing all stopwords (which is a list of commonly used words that we want removed from our analysis). I have also removed any special characters, punctuation, and lowcased the words so that everything is uniform when I create my term frequency matrix. As part of the text pre-processing phase, you may also choose to incorporate stemming (which groups all forms a word, such as grouping "take", "takes", "took", "taking"). Depending on your use case, you could also include part-of-speech tagging, which will identify nouns, verbs, adjectives, and more. These POS tags can be used for filtering and to identify advanced linguistic relationships.

def cleanup_text(record):

text = record[8]

uid = record[9]

words = text.split()

# Default list of Stopwords

stopwords_core = ['a', u'about', u'above', u'after', u'again', u'against', u'all', u'am', u'an', u'and', u'any', u'are', u'arent', u'as', u'at',

u'be', u'because', u'been', u'before', u'being', u'below', u'between', u'both', u'but', u'by',

u'can', 'cant', 'come', u'could', 'couldnt',

u'd', u'did', u'didn', u'do', u'does', u'doesnt', u'doing', u'dont', u'down', u'during',

u'each',

u'few', 'finally', u'for', u'from', u'further',

u'had', u'hadnt', u'has', u'hasnt', u'have', u'havent', u'having', u'he', u'her', u'here', u'hers', u'herself', u'him', u'himself', u'his', u'how',

u'i', u'if', u'in', u'into', u'is', u'isnt', u'it', u'its', u'itself',

u'just',

u'll',

u'm', u'me', u'might', u'more', u'most', u'must', u'my', u'myself',

u'no', u'nor', u'not', u'now',

u'o', u'of', u'off', u'on', u'once', u'only', u'or', u'other', u'our', u'ours', u'ourselves', u'out', u'over', u'own',

u'r', u're',

u's', 'said', u'same', u'she', u'should', u'shouldnt', u'so', u'some', u'such',

u't', u'than', u'that', 'thats', u'the', u'their', u'theirs', u'them', u'themselves', u'then', u'there', u'these', u'they', u'this', u'those', u'through', u'to', u'too',

u'under', u'until', u'up',

u'very',

u'was', u'wasnt', u'we', u'were', u'werent', u'what', u'when', u'where', u'which', u'while', u'who', u'whom', u'why', u'will', u'with', u'wont', u'would',

u'y', u'you', u'your', u'yours', u'yourself', u'yourselves']

# Custom List of Stopwords - Add your own here

stopwords_custom = ['']

stopwords = stopwords_core + stopwords_custom

stopwords = [word.lower() for word in stopwords]

text_out = [re.sub('[^a-zA-Z0-9]','',word) for word in words] # Remove special characters

text_out = [word.lower() for word in text_out if len(word)>2 and word.lower() not in stopwords] # Remove stopwords and words under X length

return text_out

udf_cleantext = udf(cleanup_text , ArrayType(StringType()))

clean_text = rawdata.withColumn("words", udf_cleantext(struct([rawdata[x] for x in rawdata.columns])))

This will create an array of strings (words), which can be used within our Term-Frequency calculation.

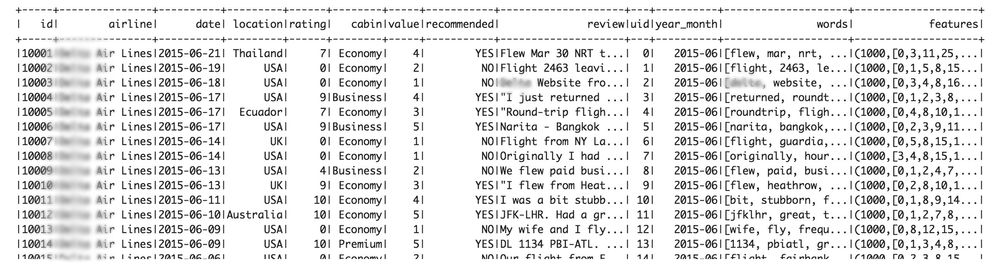

3. Generate a TF-IDF (Term Frequency Inverse Document Frequency) Matrix

In this step, I calculate the TF-IDF. Our goal here is to put a weight on each word in order to assess its significance. This algorithm down-weights words that appear very frequently. For example, if the word "airline" appeared in every customer review, then it has little power in differentiating one review from another. Whereas the words "mechanical" and "failure" (as an example) may only be seen in a small subset of customer reviews, and therefore be more important in identifying a topic of interest.

#hashingTF = HashingTF(inputCol="words", outputCol="rawFeatures", numFeatures=20) #featurizedData = hashingTF.transform(clean_text) # Term Frequency Vectorization - Option 2 (CountVectorizer) : cv = CountVectorizer(inputCol="words", outputCol="rawFeatures", vocabSize = 1000) cvmodel = cv.fit(clean_text) featurizedData = cvmodel.transform(clean_text) vocab = cvmodel.vocabulary vocab_broadcast = sc.broadcast(vocab) idf = IDF(inputCol="rawFeatures", outputCol="features") idfModel = idf.fit(featurizedData) rescaledData = idfModel.transform(featurizedData) # TFIDF

The TF-IDF algorithm produces a "feature" variable, which contains a vector set of term weights corresponding to each word within the associated customer review.

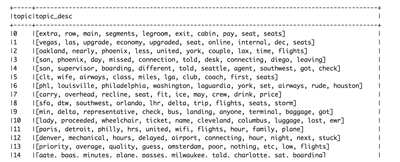

4. Use LDA to Cluster the TF-IDF Matrix

LDA (Latent Dirichlet Allocation) is a topic model that infers topics from a collection of unstructured data. The output of this algorithm is k number of topics, which correspond to the most significant and distinct topics across your set of customer reviews.

# Generate 25 Data-Driven Topics: # "em" = expectation-maximization lda = LDA(k=25, seed=123, optimizer="em", featuresCol="features") ldamodel = lda.fit(rescaledData) model.isDistributed() model.vocabSize() ldatopics = ldamodel.describeTopics() # Show the top 25 Topics ldatopics.show(25)

Based on my airline data, this code will produce the following output:

Keep in mind that these topics are data-driven (using statistical techniques) and does not require external datasets, dictionaries, or training datasets to generate these results. Because of the statistical nature of this process, some business understanding and inference needs to be applied to these topics in order to create a clear description for business users. I've relabeled a few of these as an example:

Topic 0: Seating Concerns (more legroom, exit aisle, extra room)

Topic 1: Vegas Trips (including upgrades)

Topic 7: Carryon Luggage, Overhead Space Concerns

Topic 12: Denver Delays, Mechanical Issues

So how can you improve these topics?

- Spend time on the text pre-processing phase

- Remove stopwords

- Incorporate stemming

- Consider using part-of-speech tags

- Play with the number of k, topics within the LDA algorithm. A small number of topics may not get you the granularity you need. Alternatively, too many topics could introduce redundancy or very similar topics.

5. What can you do with these results?

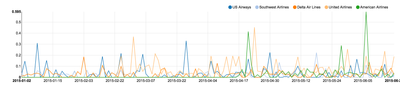

Look for trends in the Data-Driven Topics: You may start to notice an increase or decrease in a specific topic. For example, if you notice a growing trend for one of your topics (ie. mechanical/maintenance related issues out of JFK Airport), then you may need to do further investigate as to why. Text analytics can also help to undercover root causes for these mechanical issues (if you can get ahold of technician notes or maintenance logs). Or what if you notice a data-driven topic that discusses rude flight attendants or airline cleanliness; this may indicate an issue with a flight crew. Below, I have included a screenshot showing the trends for Topic 12: (Denver, Mechanical/Maintenance issues). You can see spikes for the various airlines, which indicate when customers were complaining about mechanical or maintenance related issues:

Reporting & Visualization

As another quick example, below, I am showing how you can use Apache Zeppelin, to create a time series for each topic, along with a dropdown box so that an airline (or another attribute of interest) can be selected.

Enhance Predictive Models with Text

Lastly, and likely the most important, these text topics can be used to enhance you predictive models. Since each topic can be it's own variable, you are now able to use these within your predictive model. As an example, if you add these new variables to your model, you may find that departure location, airline, and a topic related to mechanical issues are most predictive in whether or not a customer gives you a high or low rating.

Thanks for reading and please let me know if you have any feedback! I'm also working on additional examples to illustrate categorization, sentiment analytics, and how to use this data to enhance predictive models.

References:

https://spark.apache.org/docs/latest/api/python/pyspark.mllib.html#pyspark.mllib.clustering.LDA

https://spark.apache.org/docs/latest/api/python/pyspark.mllib.html#pyspark.mllib.feature.HashingTF

https://spark.apache.org/docs/2.0.2/api/python/pyspark.ml.html#pyspark.ml.feature.CountVectorizer

https://github.com/zaratsian/PySpark/blob/master/text_analytics_datadriven_topics.json

Created on 04-24-2017 03:48 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Could you please share the complete code for this text analysis? I am new to Apache Spark and would like to understand this code step by step by executing myself. You can reach me at rsp378@nyu.edu. Thanks!

Created on 05-08-2017 05:40 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

The complete code is in the Zeppelin Spark Python notebook referenced here: https://github.com/zaratsian/PySpark/blob/master/text_analytics_datadriven_topics.json