Support Questions

- Cloudera Community

- Support

- Support Questions

- distributed processing operation of dataframe with...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

distributed processing operation of dataframe with Pyspark

- Labels:

-

Apache Spark

Created 05-13-2016 02:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I would like to know please, by what method (or line of code) is that I can be convinced that treatment is executed on all my cluster node with Pyspark?

here is my code:

from pyspark.sql.types import *

from pyspark.sql import Row

rdd = sc.textFile('hdfs:../personne.txt')

rdd_split = rdd.map(lambda x: x.split(','))

rdd_people = rdd_split.map(lambda x: Row(name=x[0],age=int(x[1]),ca=int(x[2])))

df_people = sqlContext.createDataFrame(rdd_people)

df_people.registerTempTable("people")

df_people.collect()

Created on 05-13-2016 08:30 PM - edited 08-18-2019 06:21 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

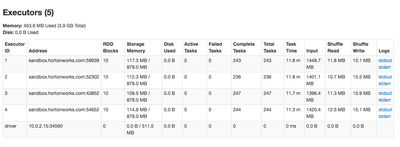

@Andrew Sears answer is correct, and once you bring up the Spark History Server URL (http://{driver-node}:4040), you can navigate to the Executors tab, which will show you lots of statistics about the driver and each executor, as shown below. Note that when running Hortonworks Data Platform (HDP), you can get here from the Spark services page, clicking on "Quick Links", and then clicking on the "Spark History Server UI" button. Following that, you will need to find your specific job under "App ID".

Created 05-13-2016 07:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you are looking for a way to monitor the job and determine which nodes it ran on, how many executors, etc, you can see this in the Spark Web UI located at <sparkhost>:4040

http://spark.apache.org/docs/latest/monitoring.html

http://stackoverflow.com/questions/35059608/pyspark-on-cluster-make-sure-all-nodes-are-used

cheers,

Andrew

Created 05-19-2016 08:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank very much

Created 05-19-2016 08:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Very big thnak you

Created on 05-13-2016 08:30 PM - edited 08-18-2019 06:21 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Andrew Sears answer is correct, and once you bring up the Spark History Server URL (http://{driver-node}:4040), you can navigate to the Executors tab, which will show you lots of statistics about the driver and each executor, as shown below. Note that when running Hortonworks Data Platform (HDP), you can get here from the Spark services page, clicking on "Quick Links", and then clicking on the "Spark History Server UI" button. Following that, you will need to find your specific job under "App ID".