Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: hash issue while using S3 as Storage Backend f...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

hash issue while using S3 as Storage Backend for HDFS

- Labels:

-

Apache Hadoop

Created on 10-05-2018 04:27 PM - edited 08-17-2019 08:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

I am facing the below issue while creating an dir on HDFS with Minio S3 as storage backend -

com.amazonaws.AmazonClientException: Unable to verify integrity of data upload. Client calculated content hash (contentMD5: 1B2M2Y8AsgTpgAmY7PhCfg== in base 64) didn't match hash (etag: null in hex) calculated by Amazon S3. You may need to delete the data stored in Amazon S3. (metadata.contentMD5: null, md5DigestStream: com.amazonaws.services.s3.internal.MD5DigestCalculatingInputStream@2d6861a6, bucketName: hadoopsa, key: dindi2/): Unable to verify integrity of data upload. Client calculated content hash (contentMD5: 1B2M2Y8AsgTpgAmY7PhCfg== in base 64) didn't match hash (etag: null in hex) calculated by Amazon S3. You may need to delete the data stored in Amazon S3.

Below error while adding file to HDFS

root@hemant-insa:~# hdfs dfs -put abc.txt s3a://sample/ put: saving output on abc.txt._COPYING_: com.amazonaws.AmazonClientException: Unable to verify integrity of data upload. Client calculated content hash (contentMD5: 1B2M2Y8AsgTpgAmY7PhCfg== in base 64) didn't match hash (etag: null in hex) calculated by Amazon S3. You may need to delete the data stored in Amazon S3. (metadata.contentMD5: 1B2M2Y8AsgTpgAmY7PhCfg==, md5DigestStream: null, bucketName: sample, key: abc.txt._COPYING_): Unable to verify integrity of data upload. Client calculated content hash (contentMD5: 1B2M2Y8AsgTpgAmY7PhCfg== in base 64) didn't match hash (etag: null in hex) calculated by Amazon S3. You may need to delete the data stored in Amazon S3. (metadata.contentMD5: 1B2M2Y8AsgTpgAmY7PhCfg==, md5DigestStream: null, bucketName: sample, key: abc.txt._COPYING_)

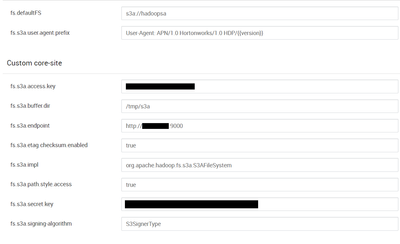

I have configured the properties as attached.

Any hints/solutions - @Predrag Minovic @Jagatheesh Ramakrishnan @Pardeep @Neeraj Sabharwal @Anshul Sisodia

Created 10-08-2018 08:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Complete list of properties configured in core-site -

fs.s3a.access.key= fs.s3a.secret.key= fs.s3a.endpoint= fs.s3a.path.style.access=false fs.s3a.attempts.maximum=20 fs.s3a.connection.establish.timeout=5000 fs.s3a.connection.timeout=200000 fs.s3a.paging.maximum=5000 fs.s3a.threads.max=10 fs.s3a.socket.send.buffer=8192 fs.s3a.socket.recv.buffer=8192 fs.s3a.threads.keepalivetime=60 fs.s3a.max.total.tasks=5 fs.s3a.multipart.size=100M fs.s3a.multipart.threshold=2147483647 fs.s3a.multiobjectdelete.enable=true fs.s3a.buffer.dir=/tmp/s3a fs.s3a.block.size=64M fs.s3a.impl=org.apache.hadoop.fs.s3a.S3AFileSystem fs.AbstractFileSystem.s3a.impl=org.apache.hadoop.fs.s3a.S3A fs.s3a.readahead.range=64K fs.s3a.etag.checksum=true

Created 10-08-2018 11:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can try below changes in your submit command as they may be causing the hash value calculated to be different :

Submit command :

I believe you want to write abc.txt in s3a bucket hadoopsa under sample folder. As you have already set hadoopsa as your defaultFS.

So you should use below command

hdfs dfs -put abc.txt /sample/ #sample folder should be existing before command run. OR hdfs dfs -put abc.txt s3a://hadoopsa/sample/

In your command when you put a file directly in s3a://sample/ it assumes sample as a bucket and tries to write in the base path.