Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: hive Query error

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

hive Query error

- Labels:

-

Apache Tez

Created on 03-31-2017 02:24 PM - edited 08-18-2019 01:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am following this tutorial https://hortonworks.com/hadoop-tutorial/how-to-refine-and-visualize-sentiment-data/ .

I am getting this error while executing the query

create table IF NOT EXISTS tweets_sentiment stored as orc as select tweet_id, case when sum( polarity ) > 0 then 'positive' when sum( polarity ) < 0 then 'negative' else 'neutral' end as sentiment from l3 group by tweet_id;

I have added the jar file as

ADDJAR/usr/hdp/2.5.0.0-1245/hive/lib/json-serde-1.3.8-SNAPSHOT-jar-with-dependencies.jar;

This is the json file in tmp/tweets_staging directory

{

"tweet_id":847679798029631488,"created_unixtime":1490937587636,"created_time":"Fri Mar 31 05:19:47 +0000 2017","lang":"it","displayname":"CislScuolaOlbia","time_zone":"Rome","msg":"RT DanFrancesconi crescereperilfuturo finito il 2?? congresso Cisl dell Area Metrobo lanuova segreteria con me Schincaglia agg Mochr??? "

}

{

"tweet_id":847679829088411648,"created_unixtime":1490937595041,"created_time":"Fri Mar 31 05:19:55 +0000 2017","lang":"en","displayname":"Texas_Stella","time_zone":"","msg":"RT GotSanctuary Australia will bar its banks from collective bargaining with Apple AAPL"

}

Error:

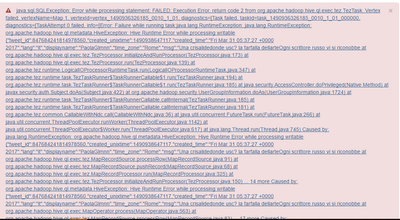

java.sql.SQLException: Error while processing statement: FAILED: Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.tez.TezTask. Vertex failed, vertexName=Map 1, vertexId=vertex_1490936326185_0010_1_01, diagnostics=[Task failed, taskId=task_1490936326185_0010_1_01_000000, diagnostics=[TaskAttempt 0 failed, info=[Error: Failure while running task:java.lang.RuntimeException: java.lang.RuntimeException: org.apache.hadoop.hive.ql.metadata.HiveException: Hive Runtime Error while processing writable {"tweet_id":847684241814978560,"created_unixtime":1490938647117,"created_time":"Fri Mar 31 05:37:27 +0000 2017","lang":"it","displayname":"PaolaGlmnn","time_zone":"Rome","msg":"Una crisalidedonde usc? la farfalla dellarteOgni scrittore russo vi si riconobbe at org.apache.hadoop.hive.ql.exec.tez.TezProcessor.initializeAndRunProcessor(TezProcessor.java:173) at org.apache.hadoop.hive.ql.exec.tez.TezProcessor.run(TezProcessor.java:139) at org.apache.tez.runtime.LogicalIOProcessorRuntimeTask.run(LogicalIOProcessorRuntimeTask.java:347) at org.apache.tez.runtime.task.TezTaskRunner$TaskRunnerCallable$1.run(TezTaskRunner.java:194) at org.apache.tez.runtime.task.TezTaskRunner$TaskRunnerCallable$1.run(TezTaskRunner.java:185) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1724) at org.apache.tez.runtime.task.TezTaskRunner$TaskRunnerCallable.callInternal(TezTaskRunner.java:185) at org.apache.tez.runtime.task.TezTaskRunner$TaskRunnerCallable.callInternal(TezTaskRunner.java:181) at org.apache.tez.common.CallableWithNdc.call(CallableWithNdc.java:36) at java.util.concurrent.FutureTask.run(FutureTask.java:266) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) at java.lang.Thread.run(Thread.java:745) Caused by: java.lang.RuntimeException: org.apache.hadoop.hive.ql.metadata.HiveException: Hive Runtime Error while processing writable {"tweet_id":847684241814978560,"created_unixtime":1490938647117,"created_time":"Fri Mar 31 05:37:27 +0000 2017","lang":"it","displayname":"PaolaGlmnn","time_zone":"Rome","msg":"Una crisalidedonde usc? la farfalla dellarteOgni scrittore russo vi si riconobbe at org.apache.hadoop.hive.ql.exec.tez.MapRecordSource.processRow(MapRecordSource.java:91) at org.apache.hadoop.hive.ql.exec.tez.MapRecordSource.pushRecord(MapRecordSource.java:68) at org.apache.hadoop.hive.ql.exec.tez.MapRecordProcessor.run(MapRecordProcessor.java:325) at org.apache.hadoop.hive.ql.exec.tez.TezProcessor.initializeAndRunProcessor(TezProcessor.java:150) ... 14 more Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: Hive Runtime Error while processing writable {"tweet_id":847684241814978560,"created_unixtime":1490938647117,"created_time":"Fri Mar 31 05:37:27 +0000 2017","lang":"it","displayname":"PaolaGlmnn","time_zone":"Rome","msg":"Una crisalidedonde usc? la farfalla dellarteOgni scrittore russo vi si riconobbe at org.apache.hadoop.hive.ql.exec.MapOperator.process(MapOperator.java:563) at org.apache.hadoop.hive.ql.exec.tez.MapRecordSource.processRow(MapRecordSource.java:83) ... 17 more Caused by: org.apache.hadoop.hive.serde2.SerDeException: Row is not a valid JSON Object - JSONException: Unterminated string at 258 [character 259 line 1] at org.openx.data.jsonserde.JsonSerDe.onMalformedJson(JsonSerDe.java:412) at org.openx.data.jsonserde.JsonSerDe.deserialize(JsonSerDe.java:174) at org.apache.hadoop.hive.ql.exec.MapOperator$MapOpCtx.readRow(MapOperator.java:149) at org.apache.hadoop.hive.ql.exec.MapOperator$MapOpCtx.access$200(MapOperator.java:113) at org.apache.hadoop.hive.ql.exec.MapOperator.process(MapOperator.java:554) ... 18 more ], TaskAttempt 1 failed, info=[Error: Failure while running task:java.lang.RuntimeException: java.lang.RuntimeException: org.apache.hadoop.hive.ql.metadata.HiveException: Hive Runtime Error while processing writable {"tweet_id":847684241814978560,"created_unixtime":1490938647117,"created_time":"Fri Mar 31 05:37:27 +0000 2017","lang":"it","displayname":"PaolaGlmnn","time_zone":"Rome","msg":"Una crisalidedonde usc? la farfalla dellarteOgni scrittore russo vi si riconobbe at org.apache.hadoop.hive.ql.exec.tez.TezProcessor.initializeAndRunProcessor(TezProcessor.java:173) at org.apache.hadoop.hive.ql.exec.tez.TezProcessor.run(TezProcessor.java:139) at org.apache.tez.runtime.LogicalIOProcessorRuntimeTask.run(LogicalIOProcessorRuntimeTask.java:347) at org.apache.tez.runtime.task.TezTaskRunner$TaskRunnerCallable$1.run(TezTaskRunner.java:194) at org.apache.tez.runtime.task.TezTaskRunner$TaskRunnerCallable$1.run(TezTaskRunner.java:185) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1724) at org.apache.tez.runtime.task.TezTaskRunner$TaskRunnerCallable.callInternal(TezTaskRunner.java:185) at org.apache.tez.runtime.task.TezTaskRunner$TaskRunnerCallable.callInternal(TezTaskRunner.java:181) at org.apache.tez.common.CallableWithNdc.call(CallableWithNdc.java:36) at java.util.concurrent.FutureTask.run(FutureTask.java:266) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) at java.lang.Thread.run(Thread.java:745) Caused by: java.lang.RuntimeException: org.apache.hadoop.hive.ql.metadata.HiveException: Hive Runtime Error while processing writable {"tweet_id":847684241814978560,"created_unixtime":1490938647117,"created_time":"Fri Mar 31 05:37:27 +0000 2017","lang":"it","displayname":"PaolaGlmnn","time_zone":"Rome","msg":"Una crisalidedonde usc? la farfalla dellarteOgni scrittore russo vi si riconobbe at org.apache.hadoop.hive.ql.exec.tez.MapRecordSource.processRow(MapRecordSource.java:91) at org.apache.hadoop.hive.ql.exec.tez.MapRecordSource.pushRecord(MapRecordSource.java:68) at org.apache.hadoop.hive.ql.exec.tez.MapRecordProcessor.run(MapRecordProcessor.java:325) at org.apache.hadoop.hive.ql.exec.tez.TezProcessor.initializeAndRunProcessor(TezProcessor.java:150) ... 14 more Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: Hive Runtime Error while processing writable {"tweet_id":847684241814978560,"created_unixtime":1490938647117,"created_time":"Fri Mar 31 05:37:27 +0000 2017","lang":"it","displayname":"PaolaGlmnn","time_zone":"Rome","msg":"Una crisalidedonde usc? la farfalla dellarteOgni scrittore russo vi si riconobbe at org.apache.hadoop.hive.ql.exec.MapOperator.process(MapOperator.java:563) at org.apache.hadoop.hive.ql.exec.tez.MapRecordSource.processRow(MapRecordSource.java:83) ... 17 more Caused by: org.apache.hadoop.hive.serde2.SerDeException: Row is not a valid JSON Object - JSONException: Unterminated string at 258 [character 259 line 1] at org.openx.data.jsonserde.JsonSerDe.onMalformedJson(JsonSerDe.java:412) at org.openx.data.jsonserde.JsonSerDe.deserialize(JsonSerDe.java:174) at org.apache.hadoop.hive.ql.exec.MapOperator$MapOpCtx.readRow(MapOperator.java:149) at org.apache.hadoop.hive.ql.exec.MapOperator$MapOpCtx.access$200(MapOperator.java:113) at org.apache.hadoop.hive.ql.exec.MapOperator.process(MapOperator.java:554) ... 18 more ], TaskAttempt 2 failed

Could someone help me please ?

Created 03-31-2017 08:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Sai Deepthi

I could see that the text is not terminated with quotes in the data and thats the reason for the error.

I believe the below data for the column "msg" is not terminated.

"tweet_id":847684241814978560,"created_unixtime":1490938647117,"created_time":"Fri Mar 31 05:37:27 +0000 2017","lang":"it","displayname":"PaolaGlmnn","time_zone":"Rome","msg":"Una crisalidedonde usc?

If not could you share your data. Because string unterminated defines that the data/command is not completed without ending quotes.

Created 03-31-2017 02:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

By any chance are you copying and pasting the queries to the hive query editor? (Or are you typing the queries manually)

Because sometimes some extra (non printable) characters gets added to the query while copying and pasting that causes some issues in the parsing.

IN your error we see some parsing error at character position 258. So please check the query.

Caused by: org.apache.hadoop.hive.serde2.SerDeException: Row is not a valid JSON Object - JSONException: Unterminated string at 258 [character 259 line 1]

at org.openx.data.jsonserde.JsonSerDe.onMalformedJson(JsonSerDe.java:412)

.

Created 03-31-2017 02:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have tried typing queries manually but the error is still der. I am adding the adding jar file each time I am executing the query to make sure.

With Regards

Created 03-31-2017 05:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Could you help me with another solution?

Created 03-31-2017 08:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Sai Deepthi

I could see that the text is not terminated with quotes in the data and thats the reason for the error.

I believe the below data for the column "msg" is not terminated.

"tweet_id":847684241814978560,"created_unixtime":1490938647117,"created_time":"Fri Mar 31 05:37:27 +0000 2017","lang":"it","displayname":"PaolaGlmnn","time_zone":"Rome","msg":"Una crisalidedonde usc?

If not could you share your data. Because string unterminated defines that the data/command is not completed without ending quotes.

Created 04-01-2017 05:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

{"tweet_id":847684224781926402,"created_unixtime":1490938643056,"created_time":"Fri Mar 31 05:37:23 +0000 2017","lang":"it","displayname":"lavoromilano1","time_zone":"","msg":"SAP FI SENIOR CONSULTANT https//tco/1o7bFH92N8 FINCONS Spa Milano Nell???ottica di un potenziamento dell???organico il Gruppo ?? alla ri???"}

{"tweet_id":847684241814978560,"created_unixtime":1490938647117,"created_time":"Fri Mar 31 05:37:27 +0000 2017","lang":"it","displayname":"PaolaGlmnn","time_zone":"Rome","msg":"Una crisalidedonde usc?? la farfalla dellarteOgni scrittore russo vi si riconobbe

Dostoevskij

raccontiGogol https//tco/ygU33PW3uB"}

{"tweet_id":847684247867478021,"created_unixtime":1490938648560,"created_time":"Fri Mar 31 05:37:28 +0000 2017","lang":"it","displayname":"ITnewsNA","time_zone":"Rome","msg":"La Fiera del Baratto e dellUsato torna a Napoli Magazine Pragma https//tco/23W25hV7vn Napoli news"}

this is the data where the error is showing displayname: "PaolaGlmn" .I unable to figure out the invalid character.

@Bala Vignesh N V

Created 04-01-2017 11:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Sai Deepthi It seems there is an invisible character. Could you try opening the file hadoop fs -cat v filename. it should allow you to see the invisible character.

Created on 04-01-2017 11:25 AM - edited 08-18-2019 01:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

ya the characters are missing.

Does deleting that json file will solve my error?

Created 04-01-2017 01:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Sai Deepthi You can either delete it or fix that particular row and load it. It will solve your problem.

Created 04-01-2017 05:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you so much. It got solved.