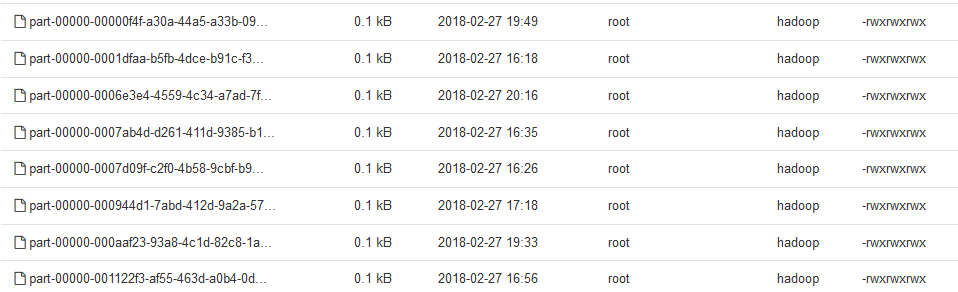

Hi All, i am processing kafka data by spark and push in to hive tables while insert into table face an issue in warehouse location it create new part file for every insert command please share some solution to avoid that problem for single select statement it will take more than 30 min.

import spark.implicits._

// Every time get new data by kafka consumer. assing to jsonStr string.

val jsonStr ="""{"b_s_isehp" : "false","event_id" : "4.0","l_bsid" : "88.0"}"""

val df = spark.read.json(Seq(jsonStr).toDS)

df.coalesce(1).write.mode("append").insertInto("tablename")