Community Articles

- Cloudera Community

- Support

- Community Articles

- Spark Structured Streaming example with CDE

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 08-28-2020 09:44 AM - edited 10-26-2020 09:45 AM

I recently had the opportunity to work with Cloudera Data Engineering to stream data from Kafka. It's quite interesting how I was able to deploy code without much worry about how to configure the back end components.

Demonstration

This demo will pull from the Twitter API using NiFi, write to payload to a Kafka topic named "twitter". Spark Streaming on Cloudera Data Engineering Experience CDE will pull from the twitter topic, extract the text field from the payload (which is the tweet itself) and write back to another Kafka topic named "tweet"

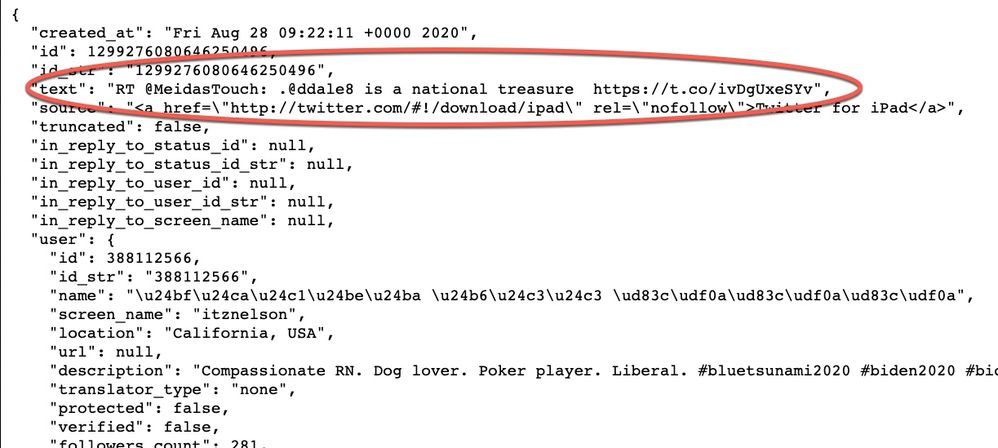

The following is an example of a twitter payload. The objective is to extract only the text field:

What is Cloudera Data Engineering?

Cloudera Data Engineering (CDE) is a serverless service for Cloudera Data Platform that allows you to submit Spark jobs to an auto-scaling cluster. CDE enables you to spend more time on your applications, and less time on infrastructure.

How do I begin with Cloudera Data Engineering (CDE)?

Complete setup instructions here.

Prerequisites

- Access to a CDE

- Some understanding of Apache Spark

- Access to a Kafka cluster

- In this demo, I use Cloudera DataHub, Streamings Messaging for rapid deployment of a Kafka Cluster on AWS

- An IDE

- I use Intellij

- I do provide the jar later on in this article

- Twitter API developer Access: https://developer.twitter.com/en/portal/dashboard

- Setting up a twitter stream

- I use Apache NiFi deployed via Cloudera DataHub on AWS

Source Code

I posted all my source code here.

If you're not interested in building the jar, that's fine. I’ve made the job Jar available here.

Oc t26, 2020 update - I added source code for how to connect CDE to Kafka DH available here. Users should be able to run the code as is without need for jaas or keytab.

Kafka Setup

This article is focused on Spark Structured Streaming with CDE. I'll be super brief here

- Create two Kafka topics

- twitter

- This topic is used to ingest the firehose data from twitter API

- tweet

- This topic is used post tweet extraction performed via Spark Structured streaming

- twitter

NiFi Setup

This article is focused on Spark Structured Streaming with CDE. I'll be super brief here.

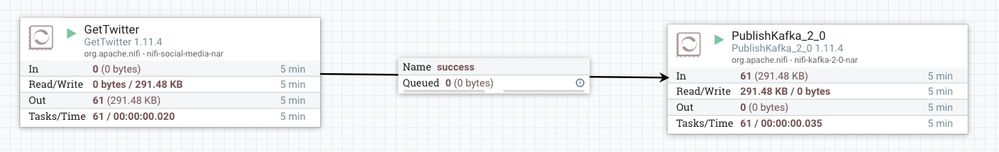

Use the GetTwitter processor (which requires twitter api developer account, free) and write to the Kafka twitter topic

Spark Code (Scala)

- Load up the Spark code on your machine from here: https://github.com/sunileman/spark-kafka-streaming

- Fire off a sbt clean and package

- A new jar will be available under target: spark-kafka-streaming_2.11-1.0.jar

- The jar is available here

What does the code do?

It will pull from the source Kafka topic (twitter), extract the text value from the payload (which is the tweet itself) and write to the target topic (tweet)

CDE

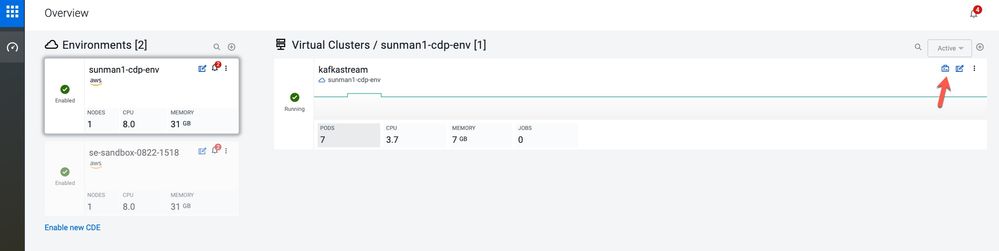

- Assuming CDE access is available, navigate to virtual clusters->View Jobs

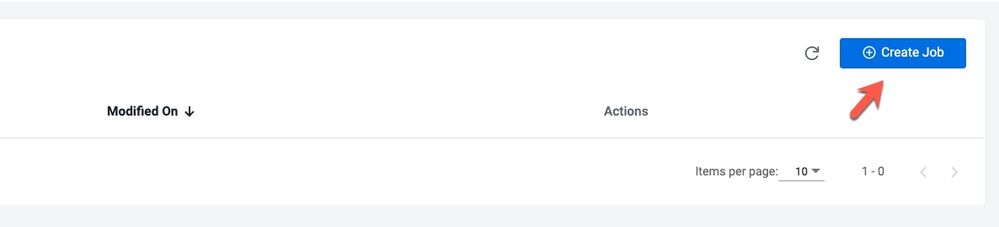

- Click on Create Job:

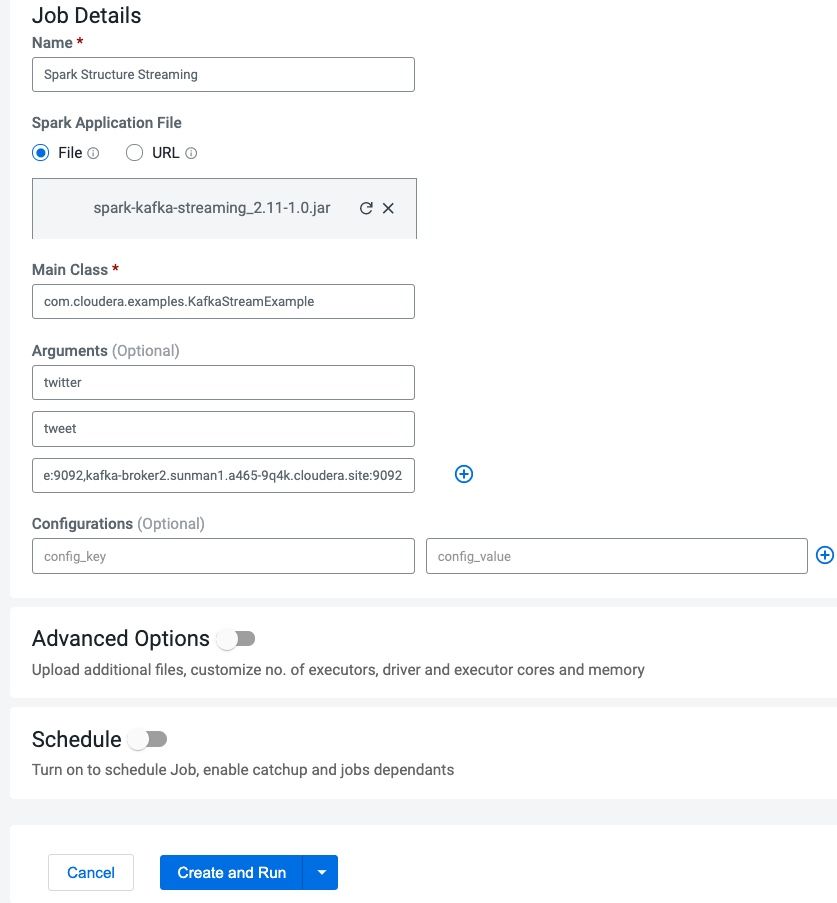

Job Details

- Name

- Job Name

- Spark Application File

- This is the jar created from the sbt package: spark-kafka-streaming_2.11-1.0.jar

- Another option is to simply provide the URL where the jar available

- Main Class

- com.cloudera.examples.KafkaStreamExample

- Arguments

- arg1

- Source Kafka topic: twitter

- arg2

- Target Kafka topic: tweet

- arg3

- Kafka brokers: kafka1:9092,kafka2:9092,kafka3:9092

- arg1

- Name

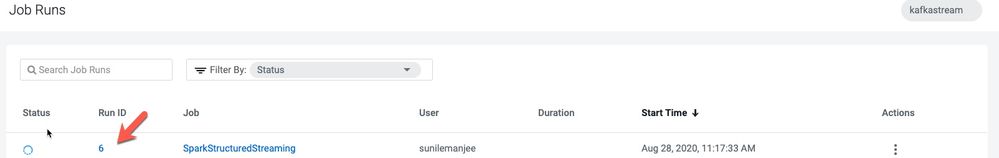

- From here jobs can be created and run or simply created. Click on Create and Run to view the job run:

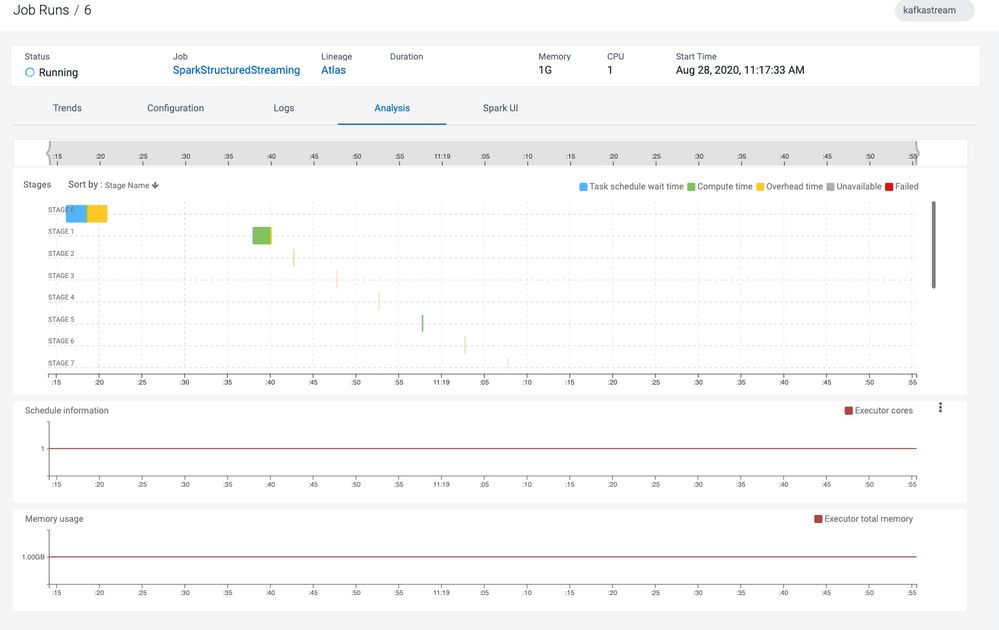

- To view the metrics about the streaming:

At this point, only the text (tweet) from the twitter payload is being written to the tweet Kafka topic.

That's it! You now have a spark structure stream running on CDE fully autoscaled. Enjoy