Community Articles

Find and share helpful community-sourced technical articles.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Announcements

Now Live: Explore expert insights and technical deep dives on the new Cloudera Community Blogs — Read the Announcement

- Cloudera Community

- Support

- Community Articles

- Spark Structured Streaming with Kafka in CDP Data ...

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

In this article, we’ll walk through the steps required to connect a Spark Structured Streaming application to Kafka in CDP Data Engineering Experience (DEX). This article extends the official CDP documentation: Connecting Kafka clients to Data Hub provisioned clusters, to include Spark applications run in Cloudera Data Experience.

Steps

- Obtain the FreeIPA certificate of your environment:

- From the CDP home page, navigate to Management Console > Environments

- Locate and select your environment from the list of available environments

- Click Actions

- Select Get FreeIPA Certificate from the drop-down menu. The FreeIPA certificate downloads.

- Add the FreeIPA certificate to a Java keystore: All clients that you want to connect to the Data Hub provisioned cluster, will need to use the certificate to communicate over TLS. The exact steps of adding the certificate to the truststore depends on the platform and key management software used. For example, you can use the Java keytool command line tool:

keytool -import -keystore [CLIENT_TRUSTSTORE.JKS] -alias [ALIAS] -file [FREEIPA_CERT]

In this case, we will replace [CLIENT_TRUSTSTORE.JKS] with keystore.jks, since we want to name our newly created keystore file keystore.jks. - Obtain CDP workload credentials: A valid workload username and password have to be provided to the client, otherwise, it cannot connect to the cluster. Credentials can be obtained from the Management Console.

- From the CDP Home Page, navigate to Management Console > User Management.

- Locate and select the user account you want to use from the list of available accounts. The user details page displays information about the user.

- Find the username found in the Workload Username entry and note it down.

- Find the Workload Password entry and click Set Workload Password.

- In the dialog box that appears, enter a new workload password, confirm the password and note it down.

- Fill out the Environment text box.

- Click Set Workload Password and wait for the process to finish.

- Click Close.

- Create a pyspark script to stream from a Kafka topic

script.py:

Note: In the above code, we have specified that our keystore location as /app/mount/keystore.jks, in our option (kafka.ssl.truststore.location). This is because when we upload our keystore.jks file to Cloudera data experience later, it will be uploaded to the /app/mount directory.from pyspark.sql import SparkSession from pyspark.sql.types import Row, StructField, StructType, StringType, IntegerType spark = SparkSession\ .builder\ .appName("PythonSQL")\ .getOrCreate() df = spark \ .readStream \ .format("kafka") \ .option("kafka.bootstrap.servers", "kafka_broker_hostname:9093") \ .option("subscribe", "yourtopic") \ .option("kafka.security.protocol", "SASL_SSL") \ .option("kafka.sasl.mechanism", "PLAIN") \ .option("kafka.ssl.truststore.location", "/app/mount/keystore.jks") \ .option("kafka.ssl.truststore.password", "mypassword") \ .option("kafka.sasl.jaas.config", 'org.apache.kafka.common.security.plain.PlainLoginModule required username="yourusername" password="mypassword";') \ .load() df.selectExpr("CAST(key AS STRING)", "CAST(value AS STRING)") query = df.writeStream \ .outputMode("append") \ .format("console") \ .start() \ query.awaitTermination()

Note: We have specified our keystore password in the kafka.ssl.truststore.password option. This was the password that we provided for our keystore at the time of its creation.

Finally, note that we have specified our workload username and password in the kafka.sasl.jaas.config option. - Create a Cloudera Data Engineering Experience Job

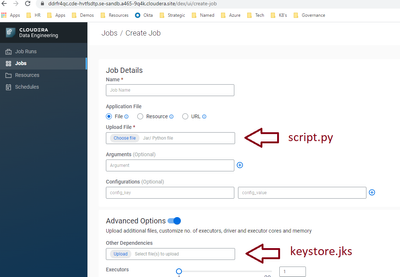

- From the CDP Home Page navigate to CDP Cloudera Data Engineering > Jobs > Create Job

- Upload the script.py file to Cloudera Data Engineering Experience (via the Job Details > Upload File Textbox), and the keystore.jks file (via Advanced Options > Other Dependencies Textbox)

- Name the job using the Job details > Name Textbox

- Unselect Schedule

- Click Run now

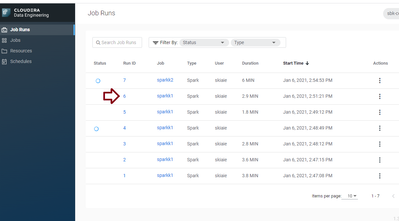

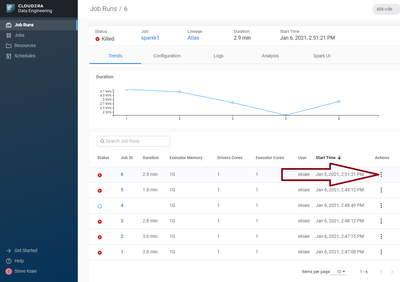

- Validate that the Job is running successfully

- Navigate to CDP Cloudera Data Engineering > Jobs Runs

- Drill down into the Run ID/ Job

- Navigate to Actions > Logs

- Select the stdout tab

|

3,883 Views