Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: how to force name node to be active

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

how to force name node to be active

- Labels:

-

Apache Ambari

-

Apache Hadoop

Created on 12-04-2017 11:10 AM - edited 08-17-2019 08:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

in our ambari cluster both name node are like standby

in order to force one of them to be active we do

hdfs haadmin -transitionToActive --forceactive master01 Illegal argument: Unable to determine service address for namenode 'master01'

but we get - Unable to determine service address

what this is indicate ? and how to fix this issue ?

Created 12-04-2017 02:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

on the namenode -format we get -

17/12/04 14:23:34 ERROR namenode.NameNode: Failed to start namenode. java.io.IOException: Timed out waiting for response from loggers

Created 12-04-2017 10:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you attach your namenode log How's your /etc/hosts entry?

103.114.28.13 master01.sys4.com 103.114.28.12 master03.sys4.com

or IP/hostname /Alias

103.114.28.13 master01.sys4.com master01 103.114.28.12 master03.sys4.com master03

What is the output of

$ zkCli.sh

[zk: localhost:2181(CONNECTED) 0] ls

/hadoop-ha

If this cluster is not critical then you might have to have to go through these steps

Created on 12-04-2017 10:07 PM - edited 08-17-2019 08:08 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I do it on the first machine - master01 ( this is standby machine )

localhost:2181(CONNECTED) 2] ls /hadoop-ha

[hdfsha]

Created 12-04-2017 10:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

my feeling is that no mater which namenode we start , every namenode became to standby and this is the big problem

Created 12-04-2017 10:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

regarding to the host file , we not use it , we have DNS server , and all hosts are resolved . we already check that , and all ip's point to the right hostnames

Created 12-04-2017 10:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

localhost:2181(CONNECTED) 2] ls /hadoop-ha [hdfsha]

Next

localhost:2181(CONNECTED) 2] get /hadoop-ha/hdfsha/ActiveStandbyElectorLock

What output do you get ?

Created 12-04-2017 10:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

[zk: localhost:2181(CONNECTED) 6] ls /hadoop-ha/hdfsha/ActiveStandbyElectorLock Node does not exist: /hadoop-ha/hdfsha/ActiveStandbyElectorLock [zk

: localhost:2181(CONNECTED) 7] get /hadoop-ha/hdfsha/ActiveStandbyElectorLock

Node does not exist: /hadoop-ha/hdfsha/ActiveStandbyElectorLock [zk:

localhost:2181(CONNECTED) 8] ls /hadoop-ha/hdfsha

[] [zk: localhost:2181(CONNECTED) 9]

Created 12-04-2017 10:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you delete the entry in zookeeper and restart

[zk: localhost:2181(CONNECTED) 1] rmr /hadoop-ha

Validate that there is no hadoop-ha entry,

[zk: localhost:2181(CONNECTED) 2] ls /

Then restart the all components HDFS service. This will create a new ZNode with correct lock(of Failover controller).

Also see https://community.hortonworks.com/questions/12942/how-to-clean-up-files-in-zookeeper-directory.html#

Created 12-04-2017 10:38 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

we removed the /hadoop-ha from master01 machine , ans restart HDFS , I will update soon , from my expiriance its takes time around 20min

Created on 12-04-2017 10:57 PM - edited 08-17-2019 08:08 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

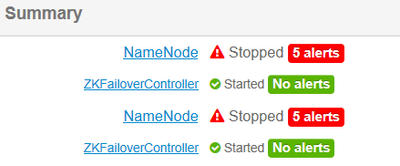

this is the status after full HDFS restart as you see we get both standby -:(