Support Questions

- Cloudera Community

- Support

- Support Questions

- how to force name node to be active

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

how to force name node to be active

- Labels:

-

Apache Ambari

-

Apache Hadoop

Created on 12-04-2017 11:10 AM - edited 08-17-2019 08:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

in our ambari cluster both name node are like standby

in order to force one of them to be active we do

hdfs haadmin -transitionToActive --forceactive master01 Illegal argument: Unable to determine service address for namenode 'master01'

but we get - Unable to determine service address

what this is indicate ? and how to fix this issue ?

Created 12-04-2017 11:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The correct syntax should be

haadmin -failover -forceactive namenode1(active) namenode1(standby)

Note the active and standby inputs

Hope that helps

Created 12-04-2017 11:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

we get that:

hdfs haadmin -failover -forceactive master01 master03

Illegal argument: Unable to determine service address for namenode 'master01'

Created 12-04-2017 11:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

# host master01

master01.sys4.com has address 103.114.28.13

# host master03

master03.sys4.com has address 103.114.28.12

Created 12-04-2017 11:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

why we get - Unable to determine service address for namenode ?

Created 12-04-2017 12:02 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

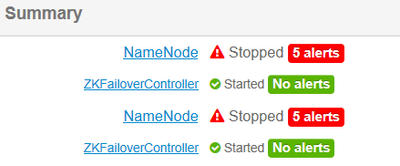

From your screenshot, both namenodes are down hence the failure of the failover commands. Since you enabled NameNode HA using Ambari and the ZooKeeper service instances and ZooKeeper FailoverControllers to be up and running.

Just restart the name nodes but its bizarre that none is marked (Active and Standby). Depending on the cluster use DEV or Prod please take the appropriate steps to restart the namenode because your cluster is now unusable anyway.

Using Ambari use the HDFS restart all command under Service actions ,

Created 12-04-2017 12:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Geoffrey , I already restart the HDFS but without good results after restart the status is as the current status , so I just thinking what are the next steps to resolve this problem ?

Created 12-04-2017 12:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Geoffrey , according to my bad status , what else we can do , ( yesterday we restart all machines in the cluster , and start the services from Zk and HDFS and so on ) , so I am really stuck here

Created 12-04-2017 12:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

we see from the logs also this

017-12-04 12:37:33,544 FATAL namenode.FSEditLog (JournalSet.java:mapJournalsAndReportErrors(398)) - Error: recoverUnfinalizedSegments failed for required journal (JournalAndStream(mgr=QJM to [100.14.28.153:8485, 100.14.28.152:8485, 100.14.27.162:8485], stream=null)) java.io.IOException: Timed out waiting 120000ms for a quorum of nodes to respond.

Created 12-04-2017 01:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If this is a production environment I would advise you to contact hortonworks support.

How many nodes in your cluster?

How many Journalnodes you have in cluster ? Make sure you have odd number.

Could you also confirm whether at any point after enabling the HA the Active and Standby namenodes ever functioned?

Your log messages indicates that there was a timeout condition when the NameNode attempted to call the JournalNodes. The NameNode must successfully call a quorum of JournalNodes: at least 2 out of 3. This means that the call timed out to at least 2 out of 3 of them.

This is a fatal condition for the NameNode, so by design, it aborts.

There are multiple potential reasons for this timeout condition. Reviewing logs from the NameNodes and JournalNodes would likely reveal more details.

If its a none critical cluster ,you can follow the below steps

Stop the Hdfs service if its running.

Start only the journal nodes (as they will need to be made aware of the formatting)

On the first namenode (as user hdfs)

# su - hdfs

Format the namenode

$ hadoop namenode -format

Initialize the Edits (for the journal nodes)

$ hdfs namenode -initializeSharedEdits -force

Format Zookeeper (to force zookeeper to reinitialise)

$ hdfs zkfc -formatZK -force

Using Ambari restart that first namenode

On the second namenode Sync (force synch with first namenode)

$ hdfs namenode -bootstrapStandby -force

On every datanode clear the data directory

Restart the HDFS service

Hope that helps