Support Questions

- Cloudera Community

- Support

- Support Questions

- how to implement and deploying our own sqoop fram...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

how to implement and deploying our own sqoop framework to connect databases dynamically(reusing same framework for importing and exporting data from /to different databases like oracle,casandra,mysql etc)

- Labels:

-

Apache Sqoop

Created 11-01-2016 08:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

i need to use same job for other SQL databases or NOSQL databases

without creation new job only i have passing the parameters in the sqoop job (parameter are connection,driver connection,username,target directory)

sqoop job --create incjob12 --import--connect jdbc:mysql://localhost/test --driver com.mysql.jdbc.Driver --username it1 --password hadoop --table st1 --incremental lastmodified -check-column ts --target-dir sqin -m 1 --merge-key id

what all the thing i highlighted above i have to enter dynamically parameters to connect different databases

please share me how to create sqoop framework by passing our own parameters

please suggest me how to complete this frame work to connect different client connectors

today i need to complete this task please suggest me

thanks in advance

swathi.T

Created 11-01-2016 12:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am not sure exactly what your requirements are, but here goes...

1. You can set these parameters as OS environment variables for each of your db connections. For example, MYSQL_CONN=xx, MYSQL_DRIVER=xx, MYSQL_UU=xx, MYSQL_PWD=xx, MYSQL_TARGDIR=xx, ORA_CONN=xx, ORA_DRIVER=xx, ORA_UU=xx, etc.

Set these by using the export command, eg. export MYSQL_CONN=xx

Then you simply call the db params you want on the command line, eg.

sqoop job --create incjob12 --import--connect $MYSQL_CONN --driver $MYSQL_DRIVER --username $MYSQL_UU --password $MYSQL_PWD ...

2. You can do the same thing but with all params related to a db set as a single OS environment variable. Eg.

set $MYSQL="--connect jdbc:mysql://localhost/test --driver com.mysql.jdbc.Driver --username it1 --password hadoop"

and then run your sqoop job as

sqoop job --create incjob12 --import "$MYSQL" --table st1 --incremental lastmodified -check-column ts --target-dirsqin -m 1 --merge-key id

Note the quotes in both setting and invoking the OS environment variable MYSQL. This is because there are spaces in the value.

If I am not understanding your requirements, let me know.

Created 11-01-2016 12:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am not sure exactly what your requirements are, but here goes...

1. You can set these parameters as OS environment variables for each of your db connections. For example, MYSQL_CONN=xx, MYSQL_DRIVER=xx, MYSQL_UU=xx, MYSQL_PWD=xx, MYSQL_TARGDIR=xx, ORA_CONN=xx, ORA_DRIVER=xx, ORA_UU=xx, etc.

Set these by using the export command, eg. export MYSQL_CONN=xx

Then you simply call the db params you want on the command line, eg.

sqoop job --create incjob12 --import--connect $MYSQL_CONN --driver $MYSQL_DRIVER --username $MYSQL_UU --password $MYSQL_PWD ...

2. You can do the same thing but with all params related to a db set as a single OS environment variable. Eg.

set $MYSQL="--connect jdbc:mysql://localhost/test --driver com.mysql.jdbc.Driver --username it1 --password hadoop"

and then run your sqoop job as

sqoop job --create incjob12 --import "$MYSQL" --table st1 --incremental lastmodified -check-column ts --target-dirsqin -m 1 --merge-key id

Note the quotes in both setting and invoking the OS environment variable MYSQL. This is because there are spaces in the value.

If I am not understanding your requirements, let me know.

Created 11-01-2016 01:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

thanks for giving reply

1. but i need to call dynamically this following parameter for oracle/sql means

"--connect jdbc:mysql://localhost/test --driver com.mysql.jdbc.Driver --username it1 --password hadoop" can i able to get the input from the user as like entering the details in the pop up box or as a input parameter

2. also if i has ten tables(t1,t2,t3,...t10) but i need to load only t1, t3, t5, t6, t10 table alone , is their any possibilities to do this

Thanks in advance...

Created 11-01-2016 06:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

[answer #2]

In this case I would put your sqoop command in a shell script and then pass parameters to that. It is similar to the question above, but everyone uses the same shell script but passes different parameters to it, which are picked up by the sqoop command text in the script.

Example

Script name: sqoop_job.sh

To run script:

./sqoop_job.sh "$MYSQL" st1 ts sqi

Script body:

sqoop job --create incjob12 --import ${1} --table ${2} --incremental lastmodified -check-column ${3} --target-dir ${4} -m 1 --merge-key idFor more on shell scripting and passing parameters:

Created 11-02-2016 04:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

thank u greg

but i am not clear about this, if i has ten tables(t1,t2,t3,...t10) but i need to load only t1, t3, t5, t6, t10 table alone from a single script, is their any possibilities to do this

Thanks in advance...

Created on 11-02-2016 08:00 AM - edited 08-18-2019 05:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

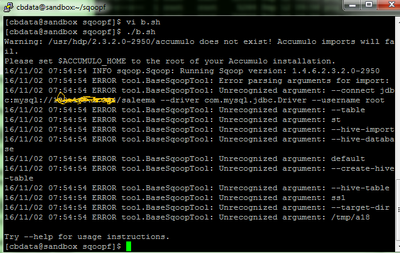

i did the same what u mention

first created the one script vi c1.sh

#!/bin/sh

MYSQL ="--connect jdbc:mysql://xxxxxx/sama --driver com.mysql.jdbc.Driver --username root"

export MYSQL

./c2.sh

then created the vi c2.sh

#!/bin/sh

sqoop import "$MYSQL" --table st1 --target-dir /tmp/sample222 -m 1

and running the ./c1.sh but it shows the folowing error

please tell how to solve this pblm

thanks in advance

Created 11-04-2016 12:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

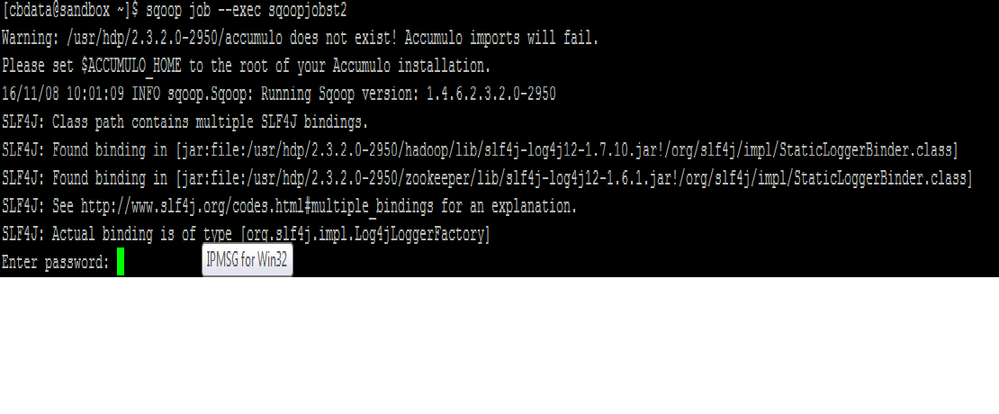

@swathi thukkaraju I have made a minor correction to my code example above. Each input param in the sqoop_job.sh must be ${1}, ${2} etc instead of $1, $2 etc.

So, use

sqoop job --create incjob12 --import ${1} --table ${2} --incremental lastmodified -check-column ${3} --target-dir ${4} --m 1--merge-key idand be sure to pass in "$MYSQL" in quotes because the value has spaces in it.

(I just tested this and it works)

Created 11-09-2016 04:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

how to remove password while executing sqoop job ????

Created 11-09-2016 05:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Try using the option --password-file to remove the possibility of entering / exposing the password. Below is the link for creating the password file (link)