Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: how to read my hdfs data into nifi cluster

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

how to read my hdfs data into nifi cluster

- Labels:

-

Apache NiFi

Created 06-25-2016 11:25 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

i created two different clusters

1) two node nifi cluster 2) three node hadoop (HDP) cluster and i want to load my hdfs data into nifi cluster. so please explain what are the configurations we need to change and how to connect nifi and hadoop cluster.

Created on 06-25-2016 06:30 PM - edited 08-18-2019 05:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Kishore,

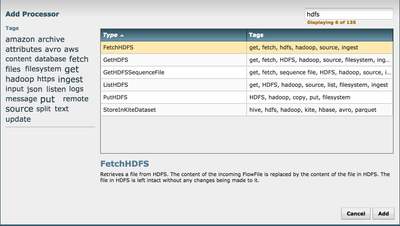

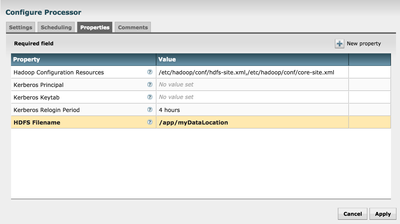

You could drop below processors as per your requirement on to your NiFi UI and configure them properly to pull the data from HDFS.

and to configure them, add hdfs, core property files location saved on to the local file system on nifi node(s), can use Ambari to download these from HDP cluster. pls find sample conf screenshot below:

Thanks!

Created on 06-25-2016 06:30 PM - edited 08-18-2019 05:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Kishore,

You could drop below processors as per your requirement on to your NiFi UI and configure them properly to pull the data from HDFS.

and to configure them, add hdfs, core property files location saved on to the local file system on nifi node(s), can use Ambari to download these from HDP cluster. pls find sample conf screenshot below:

Thanks!

Created 06-27-2016 04:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi jobin,

1) i installed NiFi outside Hadoop. so core-site.xml and hdfs-site.xml files i want to put in nifi bin folder or some other folder.and which directory i want to add in cofgure processor (like /nifi/bin/core-site.xml or /etc/hadoop/conf/core-site.xml).

2) How does it work when you have data to be streamed ? Should it go to the first NiFi node ? If I have 100 MB/s data how we share a load between these two systems ? Do you need a load balancer ?

please explain how to resolve this issues .

Created 06-27-2016 05:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

split your ingest into different NIFI nodes depending on their location. from there do some initial clean up, send it to kafka which can send to your remote hadoop cluster for landing in HDFS.

Created 06-27-2016 06:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi timothy ,

my nifi cluster is different and hadoop cluster is different so how to connect these two cluster and where to save xml file in nifi.

Created 06-27-2016 06:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can save config files in any directory on nifi node, and provide that path in the processor configuration.

Created 06-27-2016 07:05 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

thanks jobin 🙂

Created 06-27-2016 07:08 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How does it work when you have data to be streamed ? Should it go to the first NiFi node ? If I have 100 MB/s data how we share a load between these two systems ? Do you need a load balancer ?

Created 06-27-2016 07:08 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How does it work when you have data to be streamed ? Should it go to the first NiFi node ? If I have 100 MB/s data how we share a load between these two systems ? Do you need a load balancer ?

Created 06-28-2016 05:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Kishore,

If its a cluster, You will be creating your flows in NCM[Nifi Cluster Manager] UI, which runs on all the nodes in the cluster. Since you have only 2 nodes in the cluster(may be only one worker node and NCM), you may not have much to load balance there. Still you can stimulate a load balancer with nifi site-to- site protocol.

you can get more info on site-to-site protocol load balancing here:

https://community.hortonworks.com/questions/509/site-to-site-protocol-load-balancing.html

Thanks!