Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: nifi connector broken

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

nifi connector broken

- Labels:

-

Apache NiFi

Created 08-31-2016 03:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am following this tutorial and I imported the template successfully but when i try to run it its throwing error

"port INFO logs not in valid state."

the tutorial iam following is

http://hortonworks.com/hadoop-tutorial/how-to-refine-and-visualize-server-log-data/

btw its also asking me to download a python file "generate_logs.py" but i don't see where its used ?

Created on 08-31-2016 05:51 PM - edited 08-19-2019 02:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am not saying it will not work as is; however, without a defined path forward from those two output ports the data will just Queue. Looking at your screenshot, it does look like the dataflow is producing FlowFiles.

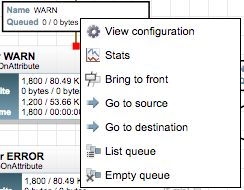

If you look at the stats on the process groups "log Generator" and "Data Enrichment" you will see data being produced and queued. The problem is that none of the components inside "Data Enrichment" are running. If you double click on the "Data Enrichment" process group, you will be taken inside of it. There you will see the stopped components, the invalid components, and the ~20,000 queued FlowFiles. You will need to start all the valid stopped components in this process group to get your data flowing all the way to your two putHDFS processors outside this process group. There are two output ports in this "Data Enrichment" process group that are invalid. They are not necessary for this tutorial to work. I suggest you stop the "Filter WARN" and "Filter INFO" processors and delete the connections feeding these invalid output ports. If you have already run this flow and data exists queued on these connections you wish to delete, you will need to right click on the connection and select "Empty queue" before you will be able to delete it.

These example were not put out by Apache. I will try to find the correct person who wrote this tutorial and see if i can get them to update it.

Thanks,

Matt

Created on 08-31-2016 05:51 PM - edited 08-19-2019 02:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am not saying it will not work as is; however, without a defined path forward from those two output ports the data will just Queue. Looking at your screenshot, it does look like the dataflow is producing FlowFiles.

If you look at the stats on the process groups "log Generator" and "Data Enrichment" you will see data being produced and queued. The problem is that none of the components inside "Data Enrichment" are running. If you double click on the "Data Enrichment" process group, you will be taken inside of it. There you will see the stopped components, the invalid components, and the ~20,000 queued FlowFiles. You will need to start all the valid stopped components in this process group to get your data flowing all the way to your two putHDFS processors outside this process group. There are two output ports in this "Data Enrichment" process group that are invalid. They are not necessary for this tutorial to work. I suggest you stop the "Filter WARN" and "Filter INFO" processors and delete the connections feeding these invalid output ports. If you have already run this flow and data exists queued on these connections you wish to delete, you will need to right click on the connection and select "Empty queue" before you will be able to delete it.

These example were not put out by Apache. I will try to find the correct person who wrote this tutorial and see if i can get them to update it.

Thanks,

Matt

Created 08-31-2016 06:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

awesome solution mclark

- « Previous

-

- 1

- 2

- Next »