Support Questions

- Cloudera Community

- Support

- Support Questions

- puthdfs is writing slow

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

puthdfs is writing slow

- Labels:

-

Apache NiFi

Created 08-15-2017 01:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am getting data using gethdfs and did some processing and writing back to hdfs.

untill puthdfs data is processing fast, but puthdfs is writing data slow to hdfs.

could you please let me know how to improve the speed?

Created 08-15-2017 01:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

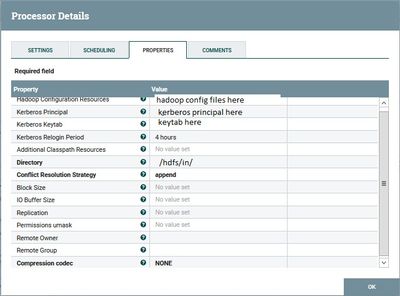

Please share your PutHDFS processor configuration with us.

How large are the individual files that are being written to HDFS?

Thanks,

Matt

Created 08-15-2017 01:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please share your PutHDFS processor configuration with us.

How large are the individual files that are being written to HDFS?

Thanks,

Matt

Created on 08-15-2017 02:36 PM - edited 08-17-2019 07:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

a file is only 1-2 kb file.

configuration is

concurrent tasks; 1, rest are not changed.

Thank you

Created 08-15-2017 06:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It is unlikely you will see the same performance out of Hadoop between reads and writes. The Hadoop Architecture is designed in such a way to favor multiple many readers and few data writers.

Increasing the number of concurrent tasks may help but performance since you will then have multiple files being written concurrently.

1 - 2 KB files are very small and do not make optimal use of your Hadoop architecture. Commonly, NiFi is used to merge bundles of files together to a more optimal size for storage in Hadoop. I believe 64 KB is the default optimal size.

You can remove some of the overhead of each connection by mergeing files together in to larger files using the MergeContent processor before writing to Hadoop.

Thanks,

Matt

Created 10-13-2017 10:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you.

I have merged files depending on the frequency of writes to 64 KB.

Sorry for turning late.

Created 10-13-2017 04:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If merging FlowFiles and adding more concurrent tasks to your putHDFS processor help with your performance issue here, please take a moment to click "accept" on the above answer to close out this thread.

Thank you,

Matt