Support Questions

- Cloudera Community

- Support

- Support Questions

- py function in pyspark shell

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

py function in pyspark shell

Created 04-25-2022 10:13 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have written python function and run in pyspark - cloudera shell but it throwing error while running it. But when I run the same function in my pyspark - local shell it's working perfectly fine.

Complete Code:

from pyspark.sql.functions import *

df=spark.createDataFrame([

('America/New_York','2020-02-01 10:00:00')

,('Europe/Lisbon','2020-02-01 10:00:00')

,('Europe/Madrid','2020-02-01 10:00:00')

,('Europe/London', '2020-02-01 10:00:00')

,('America/Sao_Paulo', '2020-02-01 10:00:00')

]

,["OriginTz","Time"])

df2=spark.createDataFrame([

('Africa/Nairobi', '2020-02-01 10:00:00')

,('Asia/Damascus', '2020-02-01 10:00:00')

,('Asia/Singapore', '2020-02-01 10:00:00')

,('Atlantic/Bermuda','2020-02-01 10:00:00')

,('Canada/Mountain','2020-02-01 10:00:00')

,('Pacific/Tahiti','2020-02-01 10:00:00')

]

,["OriginTz", "Time"])

df.createOrReplaceTempView("test")

df2.createOrReplaceTempView("test2")

tables = ["test", "test2"]

frames = list(range(0,2))

def hive_read_func(tables, frames):

for table, frame in zip(tables, frames):

globals()["dtf"+str(frame)] = eval(f'spark.sql("select * from {table}")')

hive_read_func(tables, frames)

------------------------------------------------------images--------------------------------------------------------------

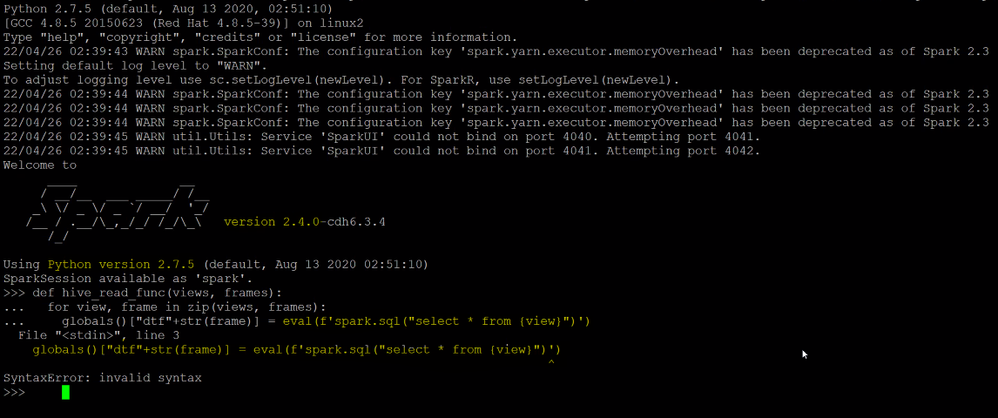

when I run the function hive_read_func in cloudera getting below error:

can some one please look into this and help me to solve

Created 04-26-2022 02:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

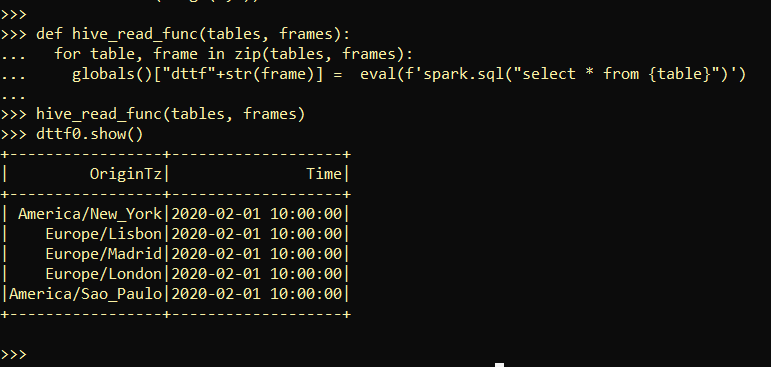

I got the answer myself: this is because of version f-string is available after python 3.1 but I run it in python version 2.6. so got error, now I rewrite the function with out f-string:

def hive_read_func(tables, frames):

for table, frame in zip(tables, frames):

inner_code = "select * from"+" "+ str(table)

globals()["dttf"+str(frame)] = spark.sql(inner_code)

Created 04-26-2022 02:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I got the answer myself: this is because of version f-string is available after python 3.1 but I run it in python version 2.6. so got error, now I rewrite the function with out f-string:

def hive_read_func(tables, frames):

for table, frame in zip(tables, frames):

inner_code = "select * from"+" "+ str(table)

globals()["dttf"+str(frame)] = spark.sql(inner_code)