Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: "Error: Java heap space" caused using distcp t...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

"Error: Java heap space" caused using distcp to copy data from HDFS to S3

- Labels:

-

Apache Hadoop

Created on 03-22-2017 03:17 AM - edited 08-18-2019 03:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I encountered a "Error: Java heap space" problem when using distcp to copy data from HDFS to S3. Can someone tell me how to solve the problem?

File size on HDFS: 1.5GB.

Command:

hadoop distcp -D fs.s3a.fast.upload=true 'hdfs://<address>:8020/tmp/tpcds-generate/10/web_sales' s3a://<address>/tpcds-generate/web_sales

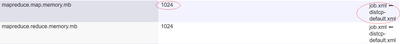

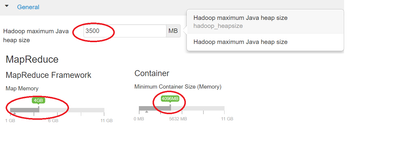

I found the Hadoop job has the following parameters(mapreduce.map.memory.mb=1024), which might caused the problem.

But I actually have set the memory from Ambari UI. Below is the capture of relevant parameters.

Created 03-22-2017 01:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Lifeng Ai - For your question - I solved the problem by removing "-D fs.s3a.fast.upload=true" but still don't know the reason. Do some one know why "fs.s3a.fast.upload=true" will cause java heap space problem?

Yes, fs.s3a.fast.upload=true can cause Heap related issues:

Read the below document:

HADOOP-13560 fixes the problem by introducing the property fs.s3a.fast.upload.buffer

But this is not yet released as part of HDP, and should be released in HDP 2.6.

Hope, this clarifies the issue!

Created 03-22-2017 03:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I solved the problem by removing "-D fs.s3a.fast.upload=true" but still don't know the reason. Do some one know why "fs.s3a.fast.upload=true" will cause java heap space problem? Thanks

Created 03-22-2017 06:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Actually while running "distcp" you should increase the Memory arguments in the command line where you are running the distcp something like following: (When the datasize is too large)

# export HADOOP_CLIENT_OPTS="-Xms4096m -Xmx4096m" # hadoop distcp /source /target

. Or please define it using 'HADOOP_CLIENT_OPTS' in hadoop-env.sh

Created 03-22-2017 01:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Lifeng Ai - For your question - I solved the problem by removing "-D fs.s3a.fast.upload=true" but still don't know the reason. Do some one know why "fs.s3a.fast.upload=true" will cause java heap space problem?

Yes, fs.s3a.fast.upload=true can cause Heap related issues:

Read the below document:

HADOOP-13560 fixes the problem by introducing the property fs.s3a.fast.upload.buffer

But this is not yet released as part of HDP, and should be released in HDP 2.6.

Hope, this clarifies the issue!

Created 03-24-2017 11:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, I'm afraid that fast upload can overload the buffers in Hadoop 2.5, as it uses JVM heap to store blocks while it uploads them. The bigger the mismatch between the data generated (i.e. how fast things can be read) and the upload bandwidth, the more heap you need. On a long-haul upload you usually have limited bandwidth, and the more distcp workers, the more the bandwidth is divided between them, the bigger the mismatch a

In Hadoop 2.5 you can get away with tuning the fast uploader to use less heap. It's tricky enough to configure that in the HDP 2.5 docs we chose not to mention the fs.s3a.fast.upload option entirely. It was just too confusing and we couldn't come up with some good defaults which would work reliably. Which is why I rewrote it completely for HDP 2.6. The HDP 2.6/Apache Hadoop 2.8 (and already in HDCloud) block output stream can buffer on disk (default), or via byte buffers, as well as heap, and tries to do better queueing of writes.

For HDP 2.5. the tuning options are measured in the Hadoop 2.7 docs, Essentially a lower value of fs.s3a.threads.core and fs.s3a.threads.max keeps the number of buffered blocks down, while changing the size of fs.s3a.multipart.size to something like 10485760 (10 MB) and setting fs.s3a.multipart.threshold to the same value reduces the buffer size before the uploads begin.

Like I warned, you can end up spending time tuning, because the heap consumed increases with the threads.max value, and decreases on the multipart threshold and size values. And over a remote connection, the more workers you have in the distcp operation (controlled by the -m option), the less bandwidth each one gets, so again: more heap overflows.

And you will invariably find out on the big uploads that there are limits.

As a result In HDP-2.5, I'd recommend avoiding the fast upload except in the special case of: you have a very high speed connection to an S3 server in the same infrastructure, and use it for code generating data, rather than big distcp operations, which can read data as fast as it can be streamed off multiple disks.