Support Questions

- Cloudera Community

- Support

- Support Questions

- "Path does not exist" error message received when ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

"Path does not exist" error message received when trying to load data from HDFS

- Labels:

-

Apache Spark

-

Apache Zeppelin

Created on 12-10-2019 07:09 PM - edited 12-10-2019 07:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am running following command in Zeppelin.First created hive context with following code -

val hiveContext = new org.apache.spark.sql.SparkSession.Builder().getOrCreate()

then I tried to load a file from HDFS with following code -

val riskFactorDataFrame = spark.read.format("csv").option("header", "true").load("hdfs:///tmp/data/riskfactor1.csv")

but I am getting following error message "org.apache.spark.sql.AnalysisException: Path does not exist: hdfs://sandbox-hdp.hortonworks.com:8020/tmp/data/riskfactor1.csv;"

I am quite new in Hadoop. Please help me figure out what wrong I am doing.

Created 12-17-2019 05:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Do the following steps

sandbox-hdp login: root

root@sandbox-hdp.hortonworks.com's password:

.....

[root@sandbox-hdp~] mkdir -p /tmp/data

[root@sandbox-hdp~]cd /tmp/data

Now here you should be in /tmp/data to validate that do

[root@sandbox-hdp~]pwd

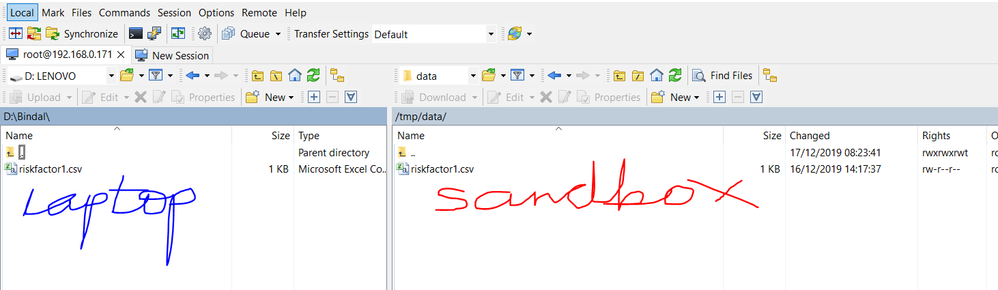

copy your riskfactor1.csv to this directory using some tool win Winscp or Mobaxterm see my screenshot using winscp

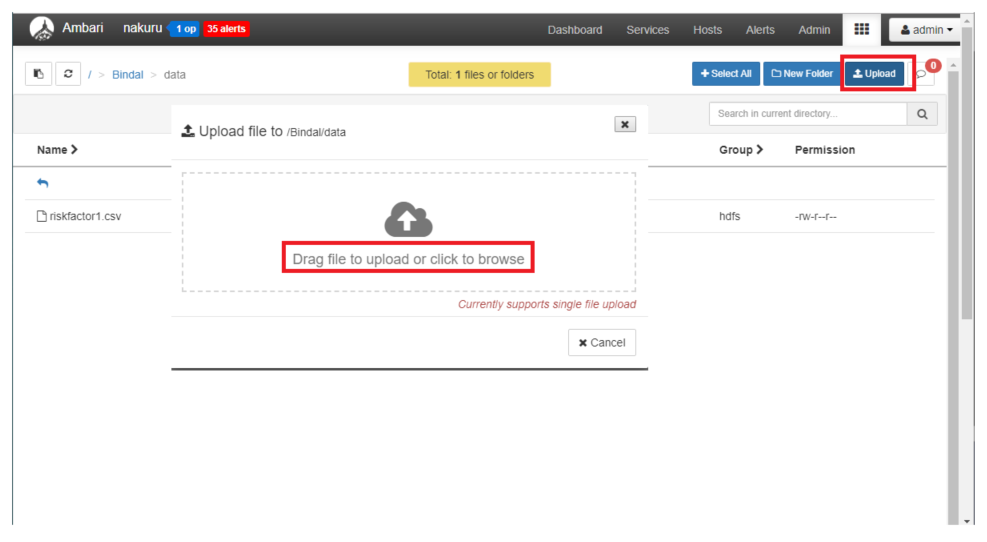

My question is where is riskfactor1.csv file located? If that's not clear you can upload using the ambari view first navigate to /bindal/data and then select the upload please see attached screenshot to upload the file from your laptop.

After the successful upload, you can run your Zeppelin job and keep me posted

Created 12-11-2019 10:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Spark expects the riskfactor1.csv file to be in hdfs path /tmp/data/ but to me it seems you have the file riskfactor1.csv on your local filesystem /tmp/data I have run the below from a sandbox

Please follow the below steps to resolve that "Path does not exist" error. Log on the CLI on your sandbox as user root then

Switch user to hdfs

[root@sandbox-hdp ~]# su - hdfs

Check the current hdfs directory

[hdfs@sandbox-hdp ~]$ hdfs dfs -ls /

Found 13 items

drwxrwxrwt+ - yarn hadoop 0 2019-10-01 18:34 /app-logs

drwxr-xr-x+ - hdfs hdfs 0 2018-11-29 19:01 /apps

drwxr-xr-x+ - yarn hadoop 0 2018-11-29 17:25 /ats

drwxr-xr-x+ - hdfs hdfs 0 2018-11-29 17:26 /atsv2

drwxr-xr-x+ - hdfs hdfs 0 2018-11-29 17:26 /hdp

drwx------+ - livy hdfs 0 2018-11-29 17:55 /livy2-recovery

drwxr-xr-x+ - mapred hdfs 0 2018-11-29 17:26 /mapred

drwxrwxrwx+ - mapred hadoop 0 2018-11-29 17:26 /mr-history

drwxr-xr-x+ - hdfs hdfs 0 2018-11-29 18:54 /ranger

drwxrwxrwx+ - spark hadoop 0 2019-11-24 22:41 /spark2-history

drwxrwxrwx+ - hdfs hdfs 0 2018-11-29 19:01 /tmp

drwxr-xr-x+ - hdfs hdfs 0 2019-09-21 13:32 /user

Create the directory in hdfs usually under /user/xxxx depending on the user but here we are creating a directory /tmp/data and giving an open permission 777 so any user can execute the spark

Create directory in hdfs

$ hdfs dfs -mkdir -p /tmp/data/

Change permissions

$ hdfs dfs -chmod 777 /tmp/data/

Now copy the riskfactor1.csv in the local filesystem to hdfs, here I am assuming the file is in /tmp

[hdfs@sandbox-hdp tmp]$ hdfs dfs -copyFromLocal /tmp/riskfactor1.csv /tmp/data

The above copies the riskfactor1.csv from local temp to hdfs location /tmp/data you can validate by running the below command

[hdfs@sandbox-hdp ]$ hdfs dfs -ls /tmp/data

Found 1 items

-rw-r--r-- 1 hdfs hdfs 0 2019-12-11 18:40 /tmp/data/riskfactor1.csv

Now you can run your spark in zeppelin it should succeed.

Please revert !

Created 12-15-2019 06:55 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

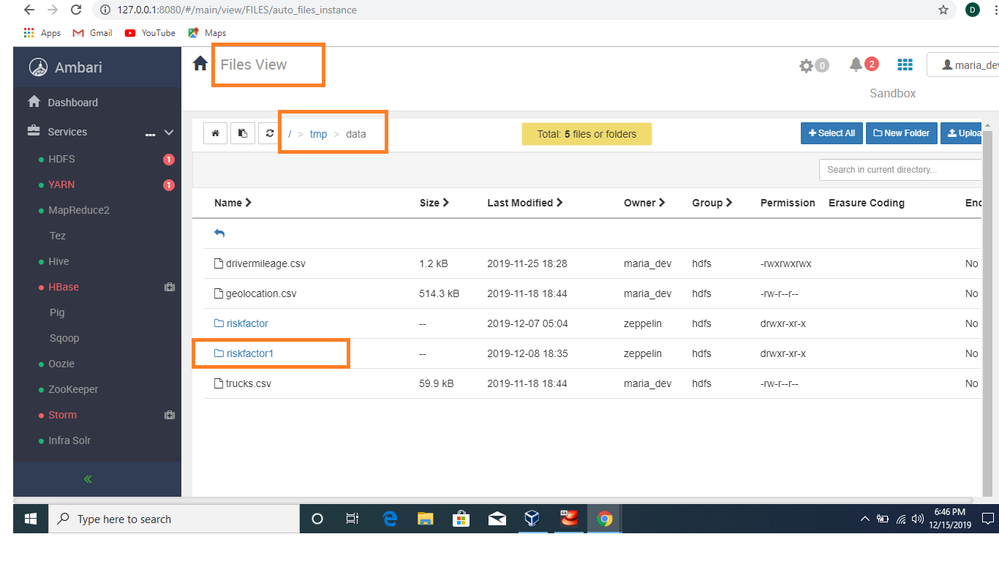

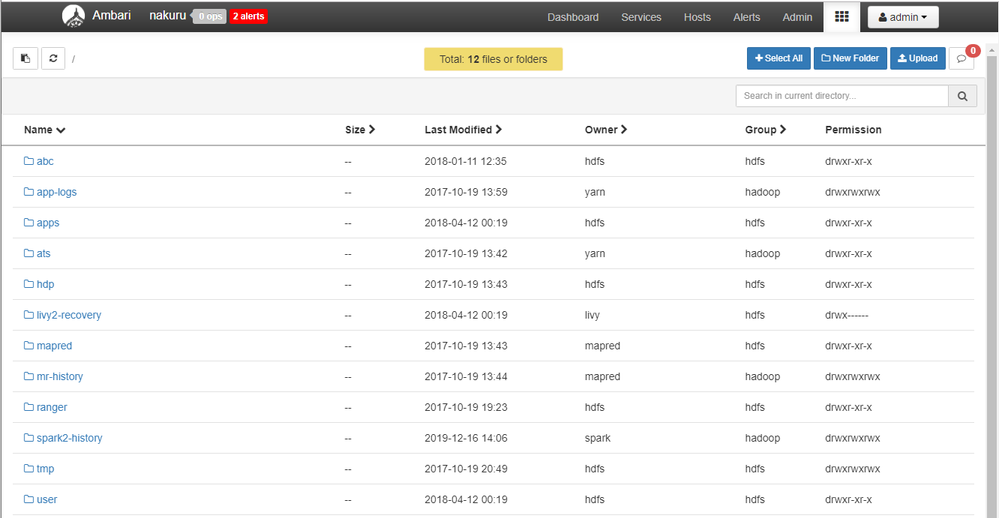

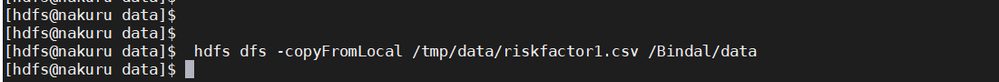

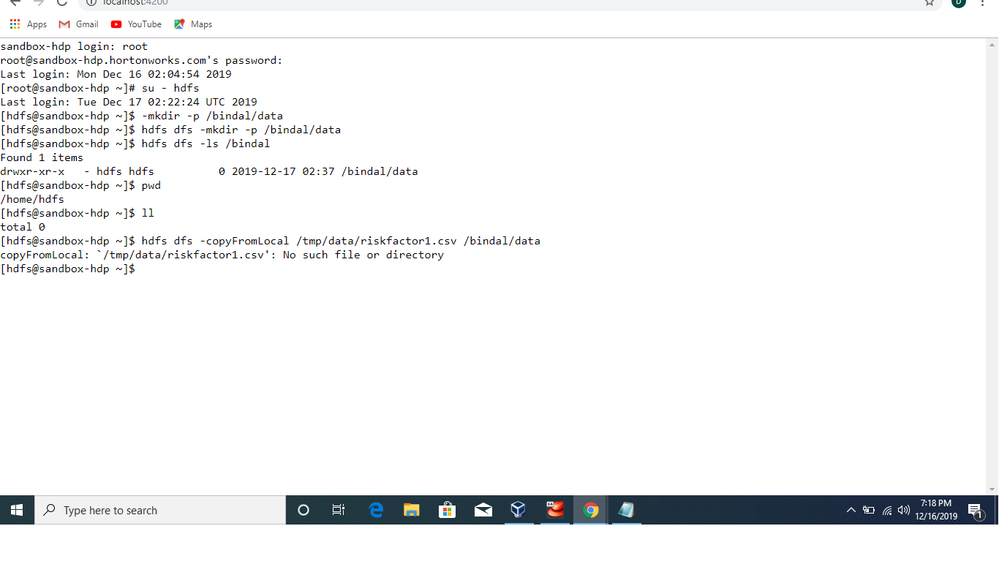

Great thanks for your detailed response. I have added a print screen of Ambari 'File Views'. I want to know whether it is local file system or hdfs file system. However I run commands suggested by you. But when when I tried to run following command "hdfs dfs -copyFromLocal /tmp/data/riskfactor1.csv /tmp/data" . I got the message "copyFromLocal: `/tmp/data/riskfactor1.csv': No such file or directory". I am not sure where I am doing something wrong. Thanks again for your help. Eagerly waiting for your response.

Created on 12-16-2019 05:53 AM - edited 12-16-2019 05:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for sharing the screenshot. I can see from the screenshot your riskfactor and riskfactor1 are directories !! not files

Can you double click on either of them and see the contents.

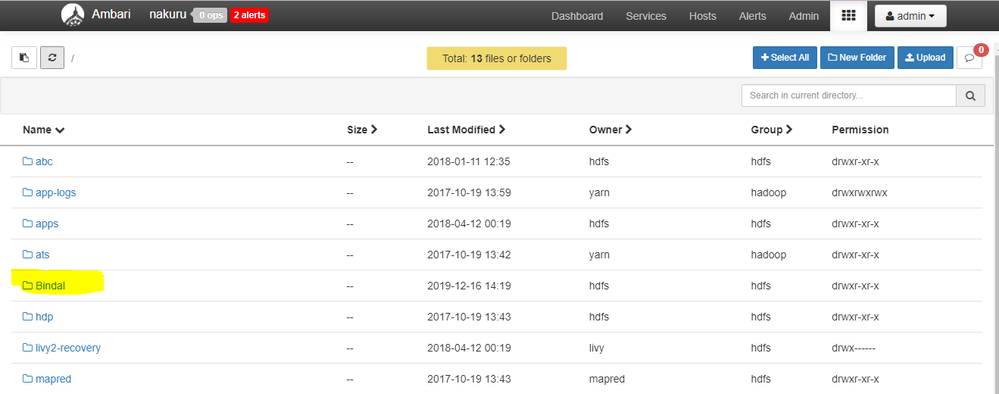

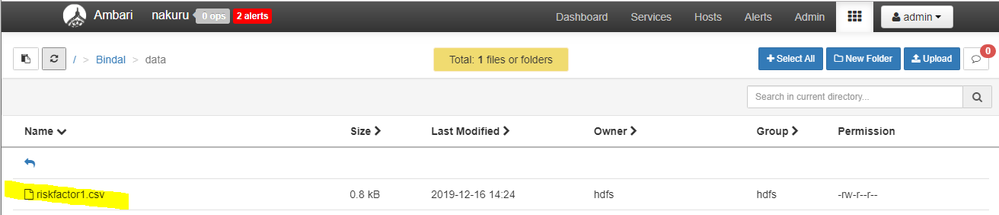

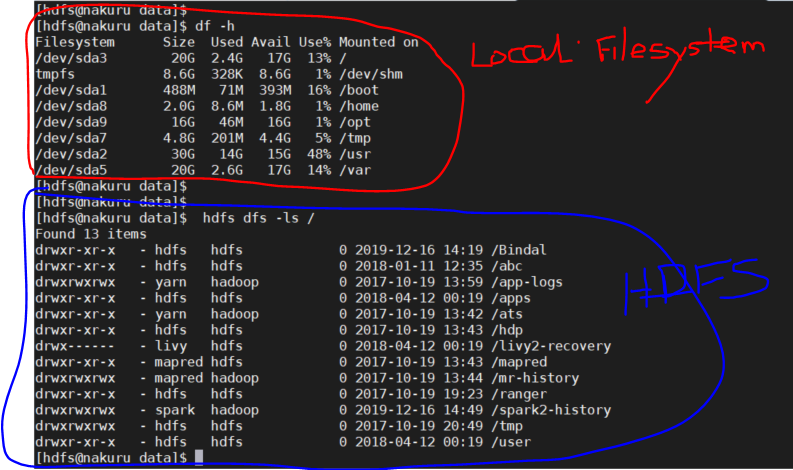

I have mounted an old HDP 2.6.x for illustration whatever filesystem you see under Ambari view is in HDFS.

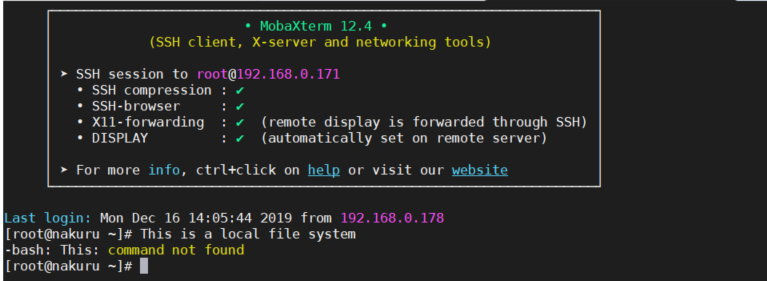

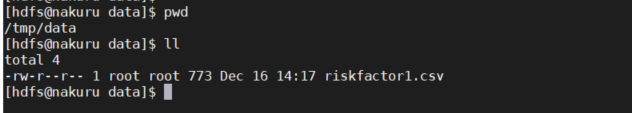

Here is the local filesystem

My Ambari view before the creation of the /Bindal/data the equivalent to /tmp/data

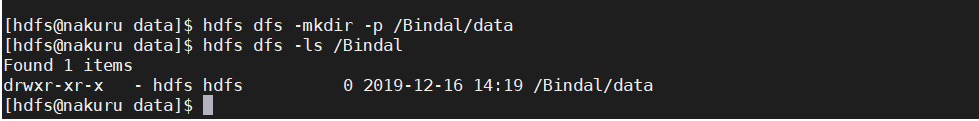

I created a directory in hdfs

Make the directory this is the local fine system

Copy the riskfactor1.csv from local file system /tmp/data

Check the copied file in hdfs

So a walk through from the Linux CLI as root user I created a directory in /tmp/data and placed the riskfactor1.csv in there then create a directory in HDFS /Bindal/data/.

I then copied the file from the local Linux boy to HDFS , I hope that explains the difference between local filesystem and hdfs.

Below is again a screenshot to show the difference

Once the file is in HDFS your zeppelin should run successfully, as reiterated in your screenshot you share you need to double click on riskfactor and riskfactor1 which are directory to see if the difference with my screenshots

HTH

Created 12-16-2019 07:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

you are awesome. You are trying your best to help me. But I am just the starter, so missing the minute threads.

I followed the steps you have provided. But still the same issue. Pls see the screen shot. I want to gain a pinch of what you have mastered. Thanks.

Created 12-17-2019 05:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Do the following steps

sandbox-hdp login: root

root@sandbox-hdp.hortonworks.com's password:

.....

[root@sandbox-hdp~] mkdir -p /tmp/data

[root@sandbox-hdp~]cd /tmp/data

Now here you should be in /tmp/data to validate that do

[root@sandbox-hdp~]pwd

copy your riskfactor1.csv to this directory using some tool win Winscp or Mobaxterm see my screenshot using winscp

My question is where is riskfactor1.csv file located? If that's not clear you can upload using the ambari view first navigate to /bindal/data and then select the upload please see attached screenshot to upload the file from your laptop.

After the successful upload, you can run your Zeppelin job and keep me posted

Created 12-16-2019 05:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content