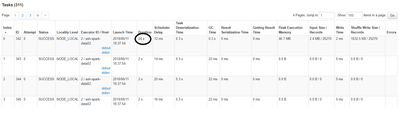

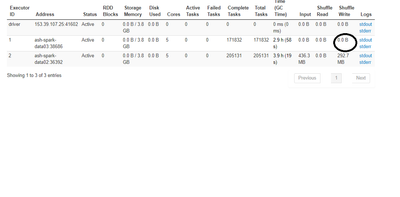

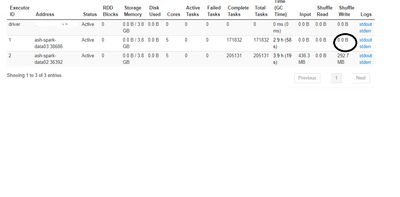

why is the spark shuffle stage is so slow for 1.6 MB shuffle write, and 2.4 MB input?.Also why is the shuffle write happening only on one executor ?.I am running a 3 node cluster with 8 cores each.

Please see my code and Spark UI pictures below

Code:

<code>JavaPairRDD<String, String> javaPairRDD = c.mapToPair(new PairFunction<String, String, String>() {

@Override

public Tuple2<String, String> call(String arg0) throws Exception {

// TODO Auto-generated method stub

try {

if (org.apache.commons.lang.StringUtils.isEmpty(arg0)) {

return new Tuple2<String, String>("", "");

}

Tuple2<String, String> t = new Tuple2<String, String>(getESIndexName(arg0), arg0);

return t;

} catch (Exception e) {

e.printStackTrace();

System.out.println("******* exception in getESIndexName");

}

return new Tuple2<String, String>("", "");

}

});

java.util.Map<String, Iterable<String>> map1 = javaPairRDD.groupByKey().collectAsMap();*