Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: using nifi flow, I cannot write in hbase table...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

using nifi flow, I cannot write in hbase table,

- Labels:

-

Apache HBase

-

Apache NiFi

Created on 05-08-2019 02:23 PM - edited 08-17-2019 03:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear all,

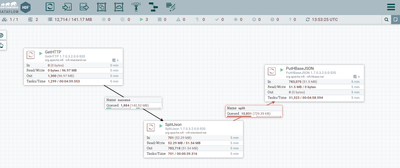

I have a flow in NiFi as the photo shows.

I'm getting data from a URL and trying to put it in Hbase in a table that I made through hbase shell.

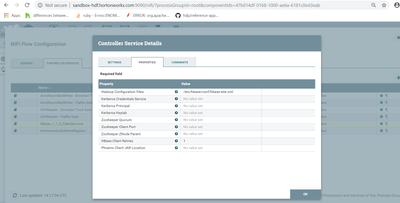

here is my puthbasejson config :

hbase-site.xml is already existing in the path that I gave to the hbase controller.

I do not know what is wrong.

I have sandbox HDP that I activated HDF on it using CDA in virtualbox and I have allocated around 12G to my VM and I allocated virtual memory to my system from my disk in my computer. My computer is 16G. Hbase, HDFS, NIFI are all active in ambari.

Thanks,

Best regards,

Created 05-08-2019 03:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Based on your first screenshot provided, it appears the putHbaseJson processor is processing FlowFiles.

The stats indicate that ~783,000 FlowFiles had been pulled from the inbound connection queue in the last 5 minutes. The PutHbaseJson processor also shows that it has an active thread (1) at the time of the screenshot.

It appears you are auto-terminating both the success and failure outbound relationships on your PutHbaseJson processor. If FlowFiles were auto-terminating on the Failire relationship, you should be seeing a Red square (bulletin) which your screenshot does not show. The bulletin reports an error log was produced. So I can only assume if you are not seeing bulletins being produced that FlowFiles are being auto-terminated via the success relationship.

Have you verified that nothing is written to hbase?

Have you verified all your hbase configurations are correct?

The flow above indicates the issue is more of a throughput problem. The SplitJSON processor is producing large batches of output FlowFiles at one time (that is how that processor works). Back pressure is then applied on the connection between SplitJSON and PutHbaseJson processors (connection highlights red to indicate back pressure is being applied). SplitJSON will not be allowed to execute again until that back pressure drops below the configured threshold. The PutHBaseJson processor continues execute against FlowFiles in the connection queue.

Have you tried the following to see if you see an improvement in throughput of the PutHbaseJson processor:

1. Increase configured batch size. The default is 25. Keep in mind that the content of every FlowFile in a batch is held in heap memory. Setting this value too high can lead to Out Of Memory (OOM) condition in NiFi's JVM. So increment slowly.

2. Increase configured concurrent tasks. Default is 1. One concurrent task allows this processor to execute on 1 batch at a time. Until that batch put is complete the next batch cannot be processed. Adding additional concurrent tasks will allow this processor to execute on multiple batches of FlowFiles at the same time. Keep in mind that you system does have a limited number fo cores. Setting this value too high can lead to your CPU becoming saturated. The max threads all processors on canvas must share is set via "Controller Settings" found in the global menu in the upper right corner of the NiFi UI. General rule of thumb is to set the "Maximum Timer Driven Thread Count" to a value equal to 2 to 4 times the number of cores on your NiFi server.

Thank you,

Matt

If you found this answer addressed your question, please take a moment to login in and click the "ACCEPT" link.

Created 05-08-2019 03:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Based on your first screenshot provided, it appears the putHbaseJson processor is processing FlowFiles.

The stats indicate that ~783,000 FlowFiles had been pulled from the inbound connection queue in the last 5 minutes. The PutHbaseJson processor also shows that it has an active thread (1) at the time of the screenshot.

It appears you are auto-terminating both the success and failure outbound relationships on your PutHbaseJson processor. If FlowFiles were auto-terminating on the Failire relationship, you should be seeing a Red square (bulletin) which your screenshot does not show. The bulletin reports an error log was produced. So I can only assume if you are not seeing bulletins being produced that FlowFiles are being auto-terminated via the success relationship.

Have you verified that nothing is written to hbase?

Have you verified all your hbase configurations are correct?

The flow above indicates the issue is more of a throughput problem. The SplitJSON processor is producing large batches of output FlowFiles at one time (that is how that processor works). Back pressure is then applied on the connection between SplitJSON and PutHbaseJson processors (connection highlights red to indicate back pressure is being applied). SplitJSON will not be allowed to execute again until that back pressure drops below the configured threshold. The PutHBaseJson processor continues execute against FlowFiles in the connection queue.

Have you tried the following to see if you see an improvement in throughput of the PutHbaseJson processor:

1. Increase configured batch size. The default is 25. Keep in mind that the content of every FlowFile in a batch is held in heap memory. Setting this value too high can lead to Out Of Memory (OOM) condition in NiFi's JVM. So increment slowly.

2. Increase configured concurrent tasks. Default is 1. One concurrent task allows this processor to execute on 1 batch at a time. Until that batch put is complete the next batch cannot be processed. Adding additional concurrent tasks will allow this processor to execute on multiple batches of FlowFiles at the same time. Keep in mind that you system does have a limited number fo cores. Setting this value too high can lead to your CPU becoming saturated. The max threads all processors on canvas must share is set via "Controller Settings" found in the global menu in the upper right corner of the NiFi UI. General rule of thumb is to set the "Maximum Timer Driven Thread Count" to a value equal to 2 to 4 times the number of cores on your NiFi server.

Thank you,

Matt

If you found this answer addressed your question, please take a moment to login in and click the "ACCEPT" link.

Created on 05-10-2019 05:11 AM - edited 08-17-2019 03:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Matt, I do not know what happened. It started working and sent the data to my table 😄

Now my question is if I want to run this flow in a local Nifi (without HDF) and send the data to a remote Hbase, what kind of change I should do ? I and another person already asked this question in another threads and did not take the answer :

https://community.hortonworks.com/questions/64155/can-i-connect-nifi-to-a-remote-hbase-service.html

We changed the following items in hbase-site.xml :

<configuration> <property> <name>hbase.rootdir</name> <value>hdfs://IPofHDP:8020/hbase</value> </property> <property> <name>hbase.zookeeper.quorum</name> <value>IPofHDP</value> </property> <property> <name>hbase.rootdir</name> <value>IPofHDP:8020/apps/hbase/data</value> <property> <name>hbase.master.info.bindAddress</name><value>IPofHDP</value> <property> <name>hbase.regionserver.info.bindAddress</name><value>192.168.43.34</value> </configuration>

But still we have following error :

It seems I should have some changes on my HDP machine . But there is not much good tutorial on it.

Thanks a lot for your help and kind consideration.

Best regards,

Created 05-10-2019 07:55 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I strongly recommend starting a new question in the community versus adding new questions and setup scenarios under an existing question. You current "Answer" is a new question which is not directly related to the issue expressed in the original question of this post. Creating a new question gets that question exposed to more users of this forum.

I am not an HBase Subject Matter Expert (SME).

As far as NiFi is concerned, how the NiFi Hbase processor within HDF's NiFi and the Hbase processor in Apache NIFi is no different.

The Error you are showing in the screenshot states that the CS is unable to resolve the hostname "sandbox-hdp.hortonworks.com" which means it can no figure out what the IP address is. If it cannot resolve the hostname, it is not going to be able to communicate with that endpoint.

While the config come from the provided site.xml files to the CS, there may be challenges accessing ports with the sandbox from an external resources.

I am also not an expert with the HDP sandbox.

If above tips do not help, best option is to start a new question that will engage more forum users.

Thank you,

Matt