Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: webHDFS 403 error

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

webHDFS 403 error

Created 01-16-2019 03:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello all,

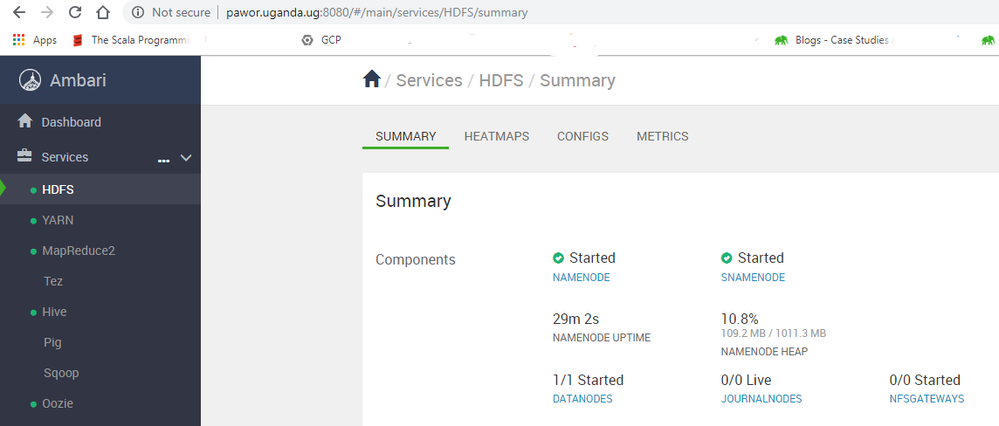

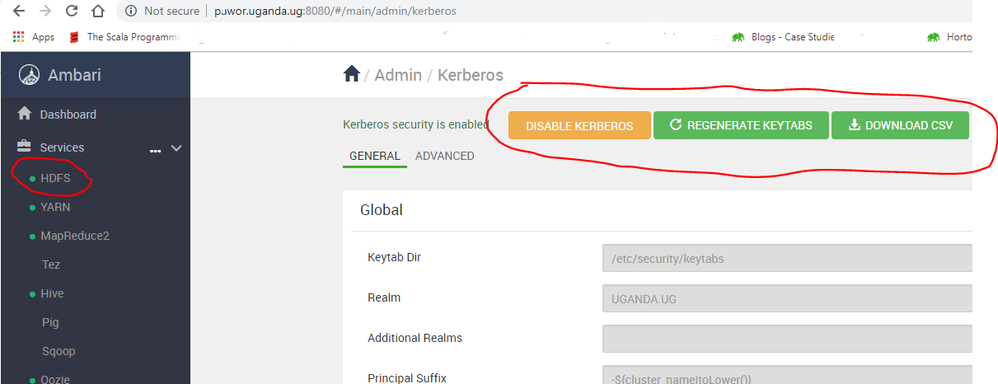

after fresh kerberization of Ambari 2.7.3 / HDP 3 cluster, the HDFS namenode isn't able to start because the hdfs user can't talk to the webhdfs. The following error is returned:

GSSException: Failure unspecified at GSS-API level (Mechanism level: Checksum failed)

It is not only from ambari: I can recreate this error from a simple curl call from hdfs user:

su - hdfs curl --negotiate -u : http://datanode:50070/webhdfs/v1/tmp?op=GETFILESTATUS

Which returns

</head> <body><h2>HTTP ERROR 403</h2> <p>Problem accessing /webhdfs/v1/tmp. Reason: <pre> GSSException: Failure unspecified at GSS-API level (Mechanism level: Checksum failed)</pre></p> </body> </html>

Overall permission for this user should be in tact, since I'm able to run hdfs operations from shell and kinit without problems. What could be the problem?

I've tried recreating keytabs several times, and fiddling with ACL settings on the config, but nothing works. What principal is WEBHDFS expecting? The same results are when I'm trying accessing it with HTTP/host@EXAMPLE.COM principal.

NB: I'll add that there's nothing fancy in the HDFS settings, mainly stock/default config.

NB2: I will add, that I've added all possible encryption types to krb5.conf as I could find, but none if these helped:

default_tkt_enctypes = aes256-cts-hmac-sha1-96 aes256-cts:normal aes128-cts:normal des3-hmac-sha1:normal arcfour-hmac:normal camellia256-cts:normal camellia128-cts:normal des-hmac-sha1:normal des-cbc-md5:normal des-cbc-crc:normal

default_tgs_enctypes = aes256-cts-hmac-sha1-96 aes256-cts:normal aes128-cts:normal des3-hmac-sha1:normal arcfour-hmac:normal camellia256-cts:normal camellia128-cts:normal des-hmac-sha1:normal des-cbc-md5:normal des-cbc-crc:normal

permitted_enctypes = aes256-cts-hmac-sha1-96 aes256-cts:normal aes128-cts:normal des3-hmac-sha1:normal arcfour-hmac:normal camellia256-cts:normal camellia128-cts:normal des-hmac-sha1:normal des-cbc-md5:normal des-cbc-crc:normal

Created 01-16-2019 05:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

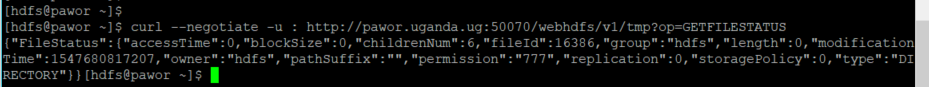

I just run the command against a kerberized 2.6.5 and all is okay

$ curl --negotiate -u : http://panda:50070/webhdfs/v1/tmp?op=GETFILESTATUS {"FileStatus":{"accessTime":0,"blockSize":0,"childrenNum":7,"fileId":16386,"group":"hdfs","length":0,"modificationTime":1539249388688,"owner":"hdfs","pathSuffix":"","permission":"777","replication":0,"storagePolicy":0,"type":"DIRECTORY"}}

Let me spin up an HDP 3.x matching your spec then kerberize it and will revert. are you using Active Directory Kerberos authentication

Created 01-17-2019 12:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

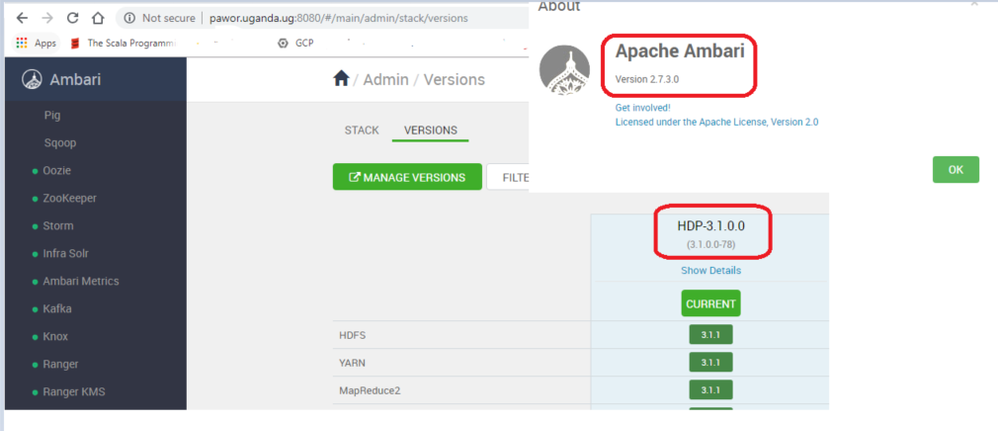

I have just completed the kerberization of a Single node Centos 7 HDP3.1.0.0 running Ambari 2.7.3.0 everything went as planned no hiccups.

See attached screenshots

krb5.conf

[libdefaults]

renew_lifetime = 7d

forwardable = true

default_realm = UGANDA.UG

ticket_lifetime = 24h

dns_lookup_realm = false

dns_lookup_kdc = false

default_ccache_name = /tmp/krb5cc_%{uid}

#default_tgs_enctypes = aes des3-cbc-sha1 rc4 des-cbc-md5

#default_tkt_enctypes = aes des3-cbc-sha1 rc4 des-cbc-md5

[domain_realm]

.uganda.ug = UGANDA.UG

uganda.ug = UGANDA.UG

[logging]

default = FILE:/var/log/krb5kdc.log

admin_server = FILE:/var/log/kadmind.log

kdc = FILE:/var/log/krb5kdc.log

[realms]

UGANDA.UG = {

admin_server = pawor.uganda.ug

kdc = pawor.uganda.ug

}kadm5.acl

*/admin@UGANDA.UG *

kdc.conf

[kdcdefaults]

kdc_ports = 88

kdc_tcp_ports = 88

[realms]

UGANDA.UG = {

#master_key_type = aes256-cts

acl_file = /var/kerberos/krb5kdc/kadm5.acl

dict_file = /usr/share/dict/words

admin_keytab = /var/kerberos/krb5kdc/kadm5.keytab

supported_enctypes = aes256-cts:normal aes128-cts:normal des3-hmac-sha1:normal arcfour-hmac:normal camellia256-cts:normal camellia128-cts:normal des-hmac-sha1:normal des-cbc-md5:normal des-cbc-crc:normal

}To help in the diagnostics can you align your configuration to mine mapping your REALM and Server values and retest! Can you try start the services manually, run the start date nodes command on all the data nodes

su -l hdfs -c "/usr/hdp/current/hadoop-hdfs-namenode/../hadoop/sbin/hadoop-daemon.sh start secondarynamenode" su -l hdfs -c "/usr/hdp/current/hadoop-hdfs-datanode/../hadoop/sbin/hadoop-daemon.sh start datanode"

Take a keen interest in the supported_enctypes and compare with your cluster I don't think adding all the encryption types will help:-)

Created 01-17-2019 08:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Resend the linkedin invitation deleted by error

Created 01-17-2019 08:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks, I think I solved it.

You know what was the problem? The Ambari wasn't creating/re-creating keytabs and principals for HTTP/_HOST@DOMAIN.COM - had to do that by hand. Plus, with the correct encryption...

Thank you for your help!

It's just interesting: did you have to create HTTP/_HOST principal, or did the Ambari create it automatically for you? If that's the case, I wonder why it didn't on my machine. By the way, I'm using openLDAP for Ldap/Kerberos database.

Created 01-17-2019 10:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Glad to know your case was resolved.......No everything was generated automatically by running the Kerberos Wizard.

Created 01-17-2019 09:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Important question (should I post it as a new question? It does kind of follow up from your latest comment, so I post it here): so how should ideally the "default_tkt_enctypes", "default_tgs_enctypes" and "permitted_enctypes" should look like for a normal HDP cluster (not a test sandbox), which would work 100% of the times and also provide high level security?

1. When I've tried the default suggested settings of "des3-cbc-sha1 des3-hmac-sha1 des3-cbc-sha1-kd", I would get errors that the security level was too low. I've then further added "aes256-cts-hmac-sha1-96", but it seems more than one decent enctype is required for proper encryption?

2. The default Kerberos settings, suggested by Ambari, also suggests "des3-cbc-sha1 des3-hmac-sha1 des3-cbc-sha1-kd", but comments it out by default, so I guess it ends up using some default values, which doesn't seem stable (what if the default will change over time or new version of kerberos).

3. Now I've added all possible configs, "aes256-cts-hmac-sha1-96 aes256-cts:normal aes128-cts:normal des3-hmac-sha1:normal arcfour-hmac:normal camellia256-cts:normal camellia128-cts:normal des-hmac-sha1:normal des-cbc-md5:normal des-cbc-crc:normal", but when using ``xst -k `` from ``kadmin`` service, it exports arounds 2-3 entries in the keytab with different encryptions, but not all 8+. Suggesting, that only some types are actually important.

Created 08-02-2021 01:38 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

when i execute the below api from windows 2016, it failing. But same success from linux. Any idea how to resolve this?

C:\Users\atnadmin>D:\CDC\curl\curl -v -i --negotiate -u : "http://xxxx:50070/webhdfs/v1/?op=GETFILESTATUS"

* Trying 10.163.33.97...

* TCP_NODELAY set

* Connected toxxxx (xx.xx.xx.xx) port 50070 (#0)

> GET /webhdfs/v1/?op=GETFILESTATUS HTTP/1.1

> Host: xxxx:50070

> User-Agent: curl/7.54.1

> Accept: */*

>

< HTTP/1.1 401 Authentication required

HTTP/1.1 401 Authentication required

< Date: Mon, 02 Aug 2021 08:36:03 GMT

Date: Mon, 02 Aug 2021 08:36:03 GMT

< Date: Mon, 02 Aug 2021 08:36:03 GMT

Date: Mon, 02 Aug 2021 08:36:03 GMT

< Pragma: no-cache

Pragma: no-cache

< X-FRAME-OPTIONS: SAMEORIGIN

X-FRAME-OPTIONS: SAMEORIGIN

< WWW-Authenticate: Negotiate

WWW-Authenticate: Negotiate

< Set-Cookie: hadoop.auth=; Path=/; HttpOnly

Set-Cookie: hadoop.auth=; Path=/; HttpOnly

< Cache-Control: must-revalidate,no-cache,no-store

Cache-Control: must-revalidate,no-cache,no-store

< Content-Type: text/html;charset=iso-8859-1

Content-Type: text/html;charset=iso-8859-1

< Content-Length: 271

Content-Length: 271

<

* Ignoring the response-body

* Connection #0 to host xxxx left intact

* Issue another request to this URL: 'http://xxxx:50070/webhdfs/v1/?op=GETFILESTATUS'

* Found bundle for host slbdpapgr0b.ge.net: 0x224afe49300 [can pipeline]

* Re-using existing connection! (#0) with host xxxx

* Connected to xxxx (xx.xx.xx.xx) port 50070 (#0)

* Server auth using Negotiate with user ''

> GET /webhdfs/v1/?op=GETFILESTATUS HTTP/1.1

> Host: slbdpapgr0b.ge.net:50070

> Authorization: Negotiate TlRMTVNTUAABAAAAt4II4gAAAAAAAAAAAAAAAAAAAAAKADk4AAAADw==

> User-Agent: curl/7.54.1

> Accept: */*

>

< HTTP/1.1 403 java.lang.IllegalArgumentException

HTTP/1.1 403 java.lang.IllegalArgumentException

< Date: Mon, 02 Aug 2021 08:36:03 GMT

Date: Mon, 02 Aug 2021 08:36:03 GMT

< Date: Mon, 02 Aug 2021 08:36:03 GMT

Date: Mon, 02 Aug 2021 08:36:03 GMT

< Pragma: no-cache

Pragma: no-cache

< X-FRAME-OPTIONS: SAMEORIGIN

X-FRAME-OPTIONS: SAMEORIGIN

< Set-Cookie: hadoop.auth=; Path=/; HttpOnly

Set-Cookie: hadoop.auth=; Path=/; HttpOnly

< Cache-Control: must-revalidate,no-cache,no-store

Cache-Control: must-revalidate,no-cache,no-store

< Content-Type: text/html;charset=iso-8859-1

Content-Type: text/html;charset=iso-8859-1

< Content-Length: 293

Content-Length: 293

<

<html>

<head>

<meta http-equiv="Content-Type" content="text/html;charset=utf-8"/>

<title>Error 403 java.lang.IllegalArgumentException</title>

</head>

<body><h2>HTTP ERROR 403</h2>

<p>Problem accessing /webhdfs/v1/. Reason:

<pre> java.lang.IllegalArgumentException</pre></p>

</body>

</html>

* Closing connection 0

C:\Users\atnadmin>

Thanks

Kamal

Created 08-04-2021 05:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@KamalakannanI think curl works differently than it does on Linux. I found something that could help:

https://stackoverflow.com/questions/62912236/webhdfs-curl-negotiate-on-windows

Created 08-08-2021 10:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Kamalakannan, Has the reply helped resolve your issue? If so, please mark the appropriate reply as the solution, as it will make it easier for others to find the answer in the future.

Regards,

Vidya Sargur,Community Manager

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Learn more about the Cloudera Community: