Community Articles

- Cloudera Community

- Support

- Community Articles

- Benchmarking Hadoop with TeraGen, TeraSort, and Te...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 12-13-2016 03:50 AM - edited 08-17-2019 07:22 AM

I have written articles in the past benchmarking Hadoop cloud environments such at BigStep and AWS. What I didn't dive into those article is how I ran the script. I built scripts to rapidly launch TeraGen, TeraSort, and TeraValidate. Why? I found myself running the same script over and over and over again. Why not make it easier by simply executing a shell script.

All scripts I mentioned are located here.

Grab the following files

- teragen.sh

- terasort.sh

- validate.sh

To run TeraGen, TeraSort, and TeraValidate a determination of the volume of data and number of records is required. For example you can generate 500GB of data with 5000000000 rows.

The script comes with the following predefined sets

#SIZE=500G #ROWS=5000000000 #SIZE=100G #ROWS=1000000000 #This will be used as it only value uncommented out SIZE=1T ROWS=10000000000 # SIZE=10G #ROWS=100000000 # SIZE=1G # ROWS=10000000

Above 1T (for terabyte) and rows 10000000000 are uncommented out. Meaning this script will generate 1TB of data with 10000000000 rows. If you want to use different dataset size and rows, simply comment out all other size and rows. Essentially using only the one you want. Only 1 SIZE and ROWS should be set (uncommented out). This applies to all scripts (teragen.sh, terasort.sh, validate.sh). All scripts must have same SIZE & ROWS setting.

Logs

A log directory is created based on where you run the script. Run output and stats are stored in the logs directory.

For example if you run /home/sunile/teragen.sh

It will create the logs directory here, /home/sunile/logs. All the logs from teragen, sort, and validate will reside here.

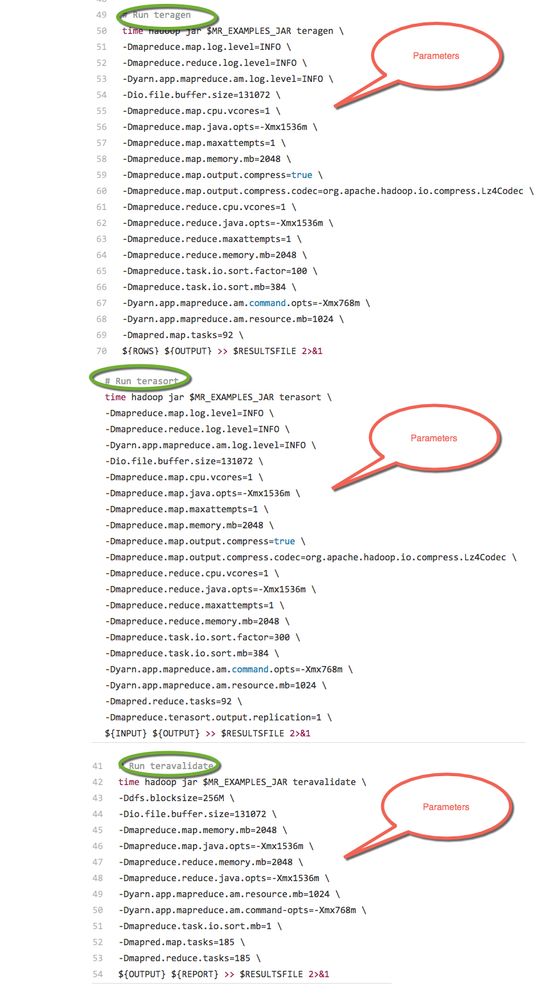

Parameters

This is an important piece for tuning. To benchmark your environments parameters should be configured. Much of this is trial and error. I would say experience is required here. ie How each parameter impacts a MapReduce job. Get help here.

For tuning change/add parameters here:

For ease of first time execution, use the ones set in the script. Run it as is and grab your stats. If the stats are acceptable then move on. What is acceptable? Take a look the articles I published on BigStep and AWS. If stats not acceptable, starting tuning.

Run the jobs in the following order

- TeraGen (teragen.sh)

- TeraSort (terasort.sh)

- TeraValidate (validate.sh)

Hope these scripts help you quickly benchmark your environment. Now go build some cool stuff!

Created on 06-02-2017 04:20 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi @smanjee. Thank you for the steps and explaining how to use the scripts.

I'm new to this kind of testing, can you explain to me what should be the points to observe. I wanted to learn benchmarking the environment.

Thanks.

Created on 06-06-2017 08:35 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

This is helpful. Thanks a lot.

Created on 03-19-2019 11:16 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thank you for posting this!

I am running an ambari 2.7.3 4 node cluster and I would like to run your tests.

When I look for: hadoop-mapreduce-examples.jar

on my cluster I find it in /hdp/apps/3.1.0.0-78/mapreduce/mapreduce.tar.gz

So, please help me:

Make a workflow to execute each of these scripts.

Created on

11-25-2019

12:02 AM

- last edited on

11-26-2019

08:11 PM

by

ask_bill_brooks

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@sunile_manjee Your article is too good and informative. I am searching for Benchmarking Hadoop with TeraGen, TeraSort, and TeraValidate with ease and I get exact article i am thankful to you for sharing this educational article . and the way you written is also good, you covered up all the points which i searching for & I am impressed by reading this article. Keep writing and sharing educational article like this which can help us to grow our knowledge.

Regards : Sevenmentor